Understanding LiteLLM Pricing For 2026

LiteLLM is an open source proxy that's free to use and community-maintained. Best for teams with strong DevOps expertise who want complete infrastructure control and can handle self-hosting complexity without enterprise SLAs or dedicated support.

What Is LiteLLM AI Gateway?

LiteLLM AI gateway is an open source Python SDK and proxy server that provides a unified interface to call 100+ LLM APIs using an OpenAI-compatible format. The project started as a simple wrapper library to standardize LLM calls across different LLM providers like OpenAI, Anthropic, Azure, Vertex AI, Bedrock, and others.

Unlike managed AI gateways that offer hosted infrastructure and enterprise support, LiteLLM AI gateway operates on a fundamentally different model. You download the open source code, deploy it on your own infrastructure, and maintain it yourself. There are no usage-based fees, no log limits, and no request quotas imposed by LiteLLM AI gateway itself.

However, this "free" approach comes with hidden costs that many teams underestimate during evaluation.

How LiteLLM Approaches Pricing Overall

LiteLLM pricing philosophy is straightforward: the software is free (MIT licensed), but you own the entire operational burden.

The Three Cost Layers

1. LiteLLM Software License

The proxy server software itself is $0. You can fork it, modify it, and use it commercially without any licensing fees.

2. Infrastructure Costs

You pay for servers, databases, monitoring tools, load balancing, and all supporting infrastructure. For a production deployment handling moderate traffic, typical infrastructure costs range from $200-$500 monthly depending on traffic volume, redundancy requirements, and cloud provider.

3. LLM Provider Costs

You pay LLM providers (OpenAI, Anthropic, etc.) directly at their standard API rates. LiteLLM doesn't add any markup or transaction fees.

Optional Enterprise Tier

In 2024, LiteLLM introduced commercial enterprise offerings for teams that want additional features and support:

- Enterprise Basic: $250/month with Prometheus metrics, LLM guardrails, JWT authentication, SSO, and audit logs

- Enterprise Premium: $30,000/year for organizations with substantial token usage or strict compliance requirements

Most teams evaluating LiteLLM are considering the free open source version, not these enterprise tiers.

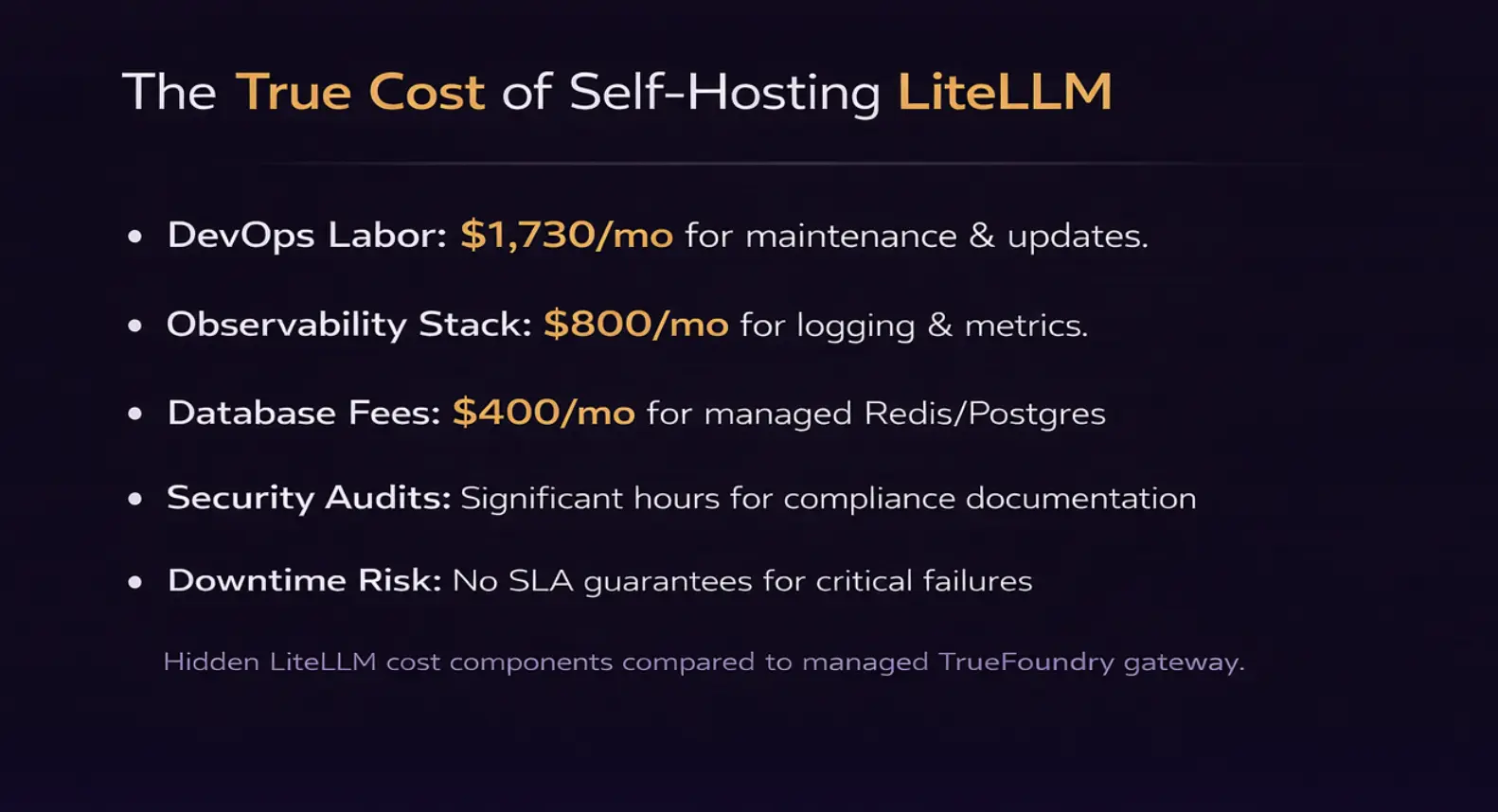

The Hidden Costs of "Free" Open Source Proxies

When engineering teams evaluate LiteLLM pricing, they often focus on the $0 price tag without accounting for total cost of ownership (TCO). Here are the hidden costs that emerge in production:

1. DevOps and Infrastructure Management

Running LiteLLM gateway in production requires dedicated engineering time for:

- Initial deployment: Setting up Kubernetes clusters, configuring load balancers, establishing CI/CD pipelines, and integrating with monitoring systems typically takes 2-4 weeks of senior DevOps time

- Ongoing maintenance: Security patches, dependency updates, scaling adjustments, and infrastructure troubleshooting require 10-20 hours monthly

- Incident response: When the proxy server goes down at 2 AM, your on-call engineer handles it, not a vendor's support team.

For a senior DevOps engineer at $150K annual salary, 20 hours monthly of maintenance translates to approximately $1,730 in labor costs per month.

2. Monitoring and Observability Stack

LiteLLM gateway features in the open source version don't include production-grade observability out of the box. You need to integrate:

- Logging infrastructure: ELK stack, Splunk, or CloudWatch for centralized logs

- Metrics collection: Prometheus + Grafana for performance monitoring

- Alerting systems: PagerDuty or similar for incident management

- Tracing: Distributed tracing with OpenTelemetry for debugging multi-model workflows

Setting up and maintaining this observability stack adds another $200-$800 monthly in infrastructure costs, plus engineering time for configuration and tuning.

3. Database and State Management

The LiteLLM proxy requires a database (typically PostgreSQL or Redis) for:

- Virtual key management (managing every API key).

- Budget tracking per key/user for precise cost tracking.

- Rate limits state management.

- Request logs and analytics.

For production LLM deployments, you need managed database services with backups, replication, and high availability. Expect $100-$400 monthly depending on scale.

4. Security and Compliance Overhead

Without a vendor managing security updates, your team is responsible for:

- Vulnerability scanning: Regular dependency audits using tools like Snyk or Dependabot.

- Patch management: Testing and deploying security updates promptly.

- Compliance documentation: For SOC 2, HIPAA, or ISO 27001 audits, you document your self-hosted proxy's security controls.

- Access controls: Implementing and maintaining RBAC, SSO, and audit logging.

For enterprises with compliance requirements, the lack of vendor-provided security certifications and SLAs creates significant audit friction.

5. Community Support Limitations

LiteLLM AI is community-maintained, which means:

- No SLA guarantees: If the proxy has a critical bug affecting your production traffic, you rely on GitHub issues and community contributors to fix it.

- Documentation gaps: Community docs are often incomplete or outdated for edge cases.

- Feature requests: New capabilities depend on maintainer priorities, not your business needs.

- Breaking changes: Open source projects sometimes introduce breaking changes that require refactoring your integration code.

For startups and small teams, this community-driven model can work well. For enterprises running mission-critical AI applications serving millions of users, the lack of dedicated support is a significant risk.

LiteLLM Pricing Plan Breakdown

Open Source (Free)

Price: $0 for software license | Infrastructure: $200-$500/month typical

Best For: Teams with strong DevOps capabilities who need complete infrastructure control and can handle self-hosting complexity.

The open source version includes unified API access to 100+ LLM providers, virtual key management, budget tracking per key/user, load balancing and fallback routing, rate limiting (RPM/TPM), and integrations with Langfuse, LangSmith, and OpenTelemetry logging.

What You Manage:

- Server provisioning and scaling.

- Database setup and maintenance.

- Monitoring and alerting config.

- Security patches and updates.

- Backup and disaster recovery.

- Incident response and on-call.

Real-World TCO Example:

For a mid-sized team running LiteLLM Gateway in production on AWS with moderate traffic (1-5M requests/month), typical monthly costs look like:

This doesn't include initial setup time (2-4 weeks) or incident response costs.

Enterprise Basic ($250/month)

Price: $250/month | Deployment: Cloud or self-hosted

Best For: Teams who want enterprise features but still manage infrastructure

Enterprise Basic adds Prometheus metrics and custom callbacks, LLM guardrails for content filtering, JWT authorization for API security, SSO integration (Okta, Azure AD), and audit logs for compliance.

What You Still Manage:

- All infrastructure provisioning and scaling.

- Database management.

- Incident response and on-call.

- Security patch deployment.

The $250/month fee covers software licensing and access to LiteLLM gateway features, but you still handle all operational aspects. Total TCO is $250 + infrastructure costs ($300-$700) + DevOps time ($1,730) = approximately $2,280-$2,680/month.

Enterprise Premium ($30,000/year)

Price: $30,000 annually ($2,500/month) | Deployment: Cloud or self-hosted

Best For: Large organizations with substantial token usage who need advanced compliance features and priority support

Enterprise Premium includes all Enterprise Basic features plus priority support with faster response times, dedicated account management, custom feature development, and assistance with compliance certifications (SOC 2, HIPAA).

What You Still Manage:

- Infrastructure provisioning and scaling.

- Day-to-day operational maintenance.

- Incident response (though with priority support).

Total TCO is $2,500 + infrastructure costs ($300-$700) + reduced DevOps time (10-15 hrs, approximately $865-$1,300) = approximately $3,665-$4,500/month.

LiteLLM Pricing vs. Competitors (2026)

Here's how LiteLLM pricing compares to managed AI gateway alternatives across pricing models and operational burden:

Core Philosophical Differences

Cost Comparison at Different Scales

Key Insight: LiteLLM's TCO remains relatively flat because labor costs dominate. At low volumes (<500K requests/month), LiteLLM AI is actually more expensive than managed alternatives when you account for DevOps time. LiteLLM only becomes cost-competitive at very high scales (>50M requests/month) where the $2,500-$3,500 monthly TCO is significantly less than enterprise pricing from managed vendors.

When LiteLLM AI Gateway Pricing Makes Sense?

LiteLLM gateway self-hosted model is ideal for specific use cases where operational control justifies the DevOps burden:

1. You Have Strong In-House DevOps Expertise

If your team already runs complex infrastructure (Kubernetes, observability stacks, CI/CD pipelines) and has dedicated platform teams, the incremental cost of managing LiteLLM AI gateway is relatively low. Your DevOps team can integrate LiteLLM into existing infrastructure-as-code workflows without significant overhead.

Ideal Profile:

- ✅ Dedicated platform engineering team (3+ engineers)

- ✅ Existing Kubernetes clusters with spare capacity

- ✅ Mature observability stack (Prometheus, Grafana, ELK)

- ✅ Established on-call rotation for infrastructure incidents

2. You Need Complete Infrastructure Control

For teams with strict data residency requirements, air-gapped environments, or regulatory constraints that prohibit third-party SaaS vendors, self-hosting is often the only option. LiteLLM AI provides a production-ready proxy that you can deploy entirely within your controlled environment.

Use Cases:

- Government or defense contractors with FedRAMP requirements.

- Financial services with data residency mandates.

- Healthcare organizations under strict HIPAA interpretations.

- Companies operating in China, Russia, or other jurisdictions with data sovereignty laws.

3. You are Building a Multi-Tenant Platform

If you're building an AI application platform that serves other businesses (B2B2C model), you may want to manage the gateway infrastructure yourself to:

- Customize billing and quota logic per customer

- Implement proprietary rate limiting algorithms

- Build white-label observability dashboards

- Integrate deeply with your existing platform architecture

Self-hosting LiteLLM gateway gives you complete control to modify the proxy code for your specific platform requirements.

4. You are Operating at Massive Scale (>50M Requests/Month)

At extremely high request volumes, the fixed costs of DevOps labor become a smaller percentage of total spend. A $3,500/month TCO for infrastructure and maintenance is attractive when managed vendor pricing reaches $20,000-$50,000/month at equivalent scale.

Breakeven Analysis:

- Below 5M requests/month: Managed solutions often cheaper when factoring in labor.

- 5M-20M requests/month: Cost-competitive depending on feature requirements.

- Above 50M requests/month: LiteLLM TCO becomes significantly lower than managed vendors.

5. You Don't Need Enterprise Features

If your use case is straightforward (basic load balancing, simple fallback routing, minimal observability), LiteLLM gateway features in the open source set may suffice. Teams that don't require semantic caching, prompt registries, advanced RBAC, or compliance certifications can avoid paying for enterprise features they won't use.

Why High-Scale Teams Look Beyond LiteLLM

Despite the $0 software license, many enterprises and high-growth startups choose managed AI gateways over LiteLLM for several reasons:

1. Time-to-Market Pressure

Deploying and configuring LiteLLM for production takes 2-4 weeks of engineering time. For startups racing to launch new AI features or enterprises with aggressive roadmaps, this setup time represents opportunity cost. Managed gateways like TrueFoundry or Portkey offer instant deployment with production-grade infrastructure in minutes, not weeks.

Example Scenario: A fintech startup is launching an AI-powered financial advisor chatbot. Delaying launch by 3 weeks to set up LiteLLM infrastructure means lost revenue, competitive disadvantage, and missed investor milestones. The team opts for TrueFoundry's managed gateway to launch in 2 days instead of 3 weeks.

2. Engineering Focus on Core Product

Every hour your DevOps team spends managing LiteLLM infrastructure is an hour not spent building product features that differentiate your business. For most companies, the AI gateway is critical infrastructure but not a competitive advantage in itself.

Opportunity Cost Calculation:

- 20 hours/month managing LiteLLM × $150/hour loaded cost = $3,000/month in labor.

- Those same 20 hours could build 1-2 new product features per month.

- At a $10M ARR SaaS company, 2 extra features/month could drive 5-10% faster revenue growth.

3. Lack of SLA Guarantees

Community-maintained open source projects don't provide uptime SLAs or legally binding support commitments. If a critical bug in LiteLLM causes your production AI application to fail, you're dependent on GitHub issues and community response times.

Risk Scenario: Your AI customer support chatbot (serving 100K users daily) goes down due to a LiteLLM proxy bug. Without vendor SLA commitments, you have no recourse for damages, no guaranteed fix timeline, and no dedicated support engineer to investigate. Your reputation and customer trust suffer.

Managed vendors provide 99.9% uptime SLAs with financial penalties if they fail to meet commitments.

4. Missing Enterprise Features for Agentic AI

LiteLLM focuses on basic proxy functionality (unified API, load balancing, rate limiting). It lacks advanced capabilities that modern AI applications need:

- Model Context Protocol (MCP): LiteLLM doesn't support MCP for agentic AI workflows where models interact with external tools and APIs.

- Prompt Registry: No centralized repository for versioning, testing, and deploying prompts across teams.

- Semantic Caching: No intelligent caching that recognizes semantically similar queries to reduce LLM costs.

- Advanced Observability: DIY observability requires significant additional tooling and configuration.

For teams building sophisticated agentic AI applications, these missing features force additional engineering work or push teams toward managed platforms.

5. Compliance and Audit Friction

During SOC 2, ISO 27001, or HIPAA audits, self-hosted infrastructure creates documentation overhead. You must demonstrate:

- Security patch processes and response times

- Vulnerability management procedures

- Access control implementation

- Audit logging completeness

- Disaster recovery testing

Managed vendors provide pre-certified infrastructure and audit support, reducing compliance burden significantly.

How TrueFoundry Provides a Production-Grade Managed Alternative

TrueFoundry offers a fully managed AI gateway that eliminates LiteLLM's operational burden while providing enterprise-grade features for agentic AI applications.

Key Advantages Over Self-Hosted LiteLLM

1. Zero Infrastructure Management

TrueFoundry handles all server provisioning, scaling, monitoring, security patches, and incident response. Your team deploys AI applications in minutes without touching Kubernetes, databases, or Docker containers.

2. Built for Agentic AI with MCP

TrueFoundry natively supports Model Context Protocol (MCP), enabling sophisticated agentic workflows where AI models interact with external tools, databases, and APIs. This is critical for modern AI applications that go beyond simple chat interfaces.

3. Better Cost Structure for Growth

While LiteLLM's TCO remains flat at $2,000-$3,500/month regardless of usage, TrueFoundry offers:

- Free tier: 50,000 requests/month (10x Portkey's free tier logs)

- Pro tier: $499/month for up to 1M requests with all enterprise features included

- Predictable scaling: No surprise DevOps labor costs as traffic grows

4. Enterprise Governance from Day One

Unlike LiteLLM which requires Enterprise Premium ($30K/year) for compliance features, TrueFoundry Pro ($499/month) includes:

- Granular RBAC with team-based access controls

- Complete audit logging for compliance requirements

- Guardrails and content filtering

- SOC 2 Type II certified infrastructure

- 24/7 dedicated support with <4 hour response times

5. VPC and On-Premises DeploymentFor enterprises with data residency requirements, TrueFoundry offers VPC and on-premises deployment at Enterprise tier (similar to Portkey), but without requiring you to manage the underlying infrastructure. You get the control benefits of self-hosting without the operational burden.

When TrueFoundry Wins Over LiteLLM

Scenario 1: Fast-Growing AI Startup

A Series A startup building an AI coding assistant needs to launch quickly, scale unpredictably, and focus engineering resources on product differentiation rather than infrastructure management. TrueFoundry's managed platform lets them go from zero to production in 2 days with built-in observability, guardrails, and MCP support for agentic workflows.

Scenario 2: Enterprise with Compliance Requirements

A healthcare company building AI-powered clinical decision support needs HIPAA compliance, audit logs, and guaranteed uptime SLAs. Self-hosting LiteLLM creates significant audit overhead and support risk. TrueFoundry provides pre-certified infrastructure with BAAs (Business Associate Agreements) and dedicated compliance support.

Scenario 3: Multi-Model Agentic Application

A fintech company is building an AI financial advisor that uses multiple models (GPT-4 for conversation, Claude for analysis, Gemini for multimodal, and open source models for specialized tasks) and needs to orchestrate tool calls, maintain conversation context, and implement semantic caching. LiteLLM provides basic load balancing but lacks MCP support and semantic caching. TrueFoundry's purpose-built agentic AI platform handles the complexity natively.

Conclusion

LiteLLM pricing and its "free and open source" promise are compelling, but the reality is more nuanced. While the software license costs $0, total cost of ownership (infrastructure, labor, monitoring, support) typically ranges from $2,000-$3,500/month for production deployments. This makes LiteLLM more expensive than managed alternatives at low-to-medium request volumes (<5M requests/month).

LiteLLM makes sense for teams with strong DevOps expertise who need complete infrastructure control for data residency, air-gapped environments, or highly customized platform requirements. It can also be cost-effective at massive scale (>50M requests/month) where fixed DevOps costs become a smaller percentage of total spend.

However, for most teams evaluating AI gateways in 2026, the operational burden of self-hosting LiteLLM outweighs the licensing cost savings. Key disadvantages include:

- 2-4 weeks of setup time delaying time-to-market

- Ongoing DevOps labor (10-20 hours/month) diverting engineering focus from product development

- No SLA guarantees or dedicated support for production incidents

- Missing enterprise features like MCP for agentic AI, semantic caching, and prompt registries

- Compliance overhead for SOC 2, HIPAA, or ISO 27001 audits

TrueFoundry provides a managed alternative that eliminates operational burden while offering superior capabilities for modern AI applications. With native MCP support for agentic workflows, semantic caching, comprehensive observability, and enterprise governance features from Pro tier ($499/month), TrueFoundry delivers better value for teams focused on building AI products rather than managing infrastructure.

If your team has dedicated platform engineers, operates in strictly regulated environments requiring self-hosting, or runs traffic exceeding 50 million requests monthly, LiteLLM is worth evaluating. For everyone else, managed platforms like TrueFoundry offer faster deployment, lower TCO at typical scales, and enterprise capabilities that LiteLLM doesn't provide.

The right choice depends on your team's strengths. If infrastructure operations are a core competency and competitive advantage, self-host LiteLLM. If AI product development is your focus, choose a managed platform and invest engineering time in features that differentiate your business.

Frequently Asked Questions

Is LiteLLM really free if I self-host it?

The software license is free, but total cost of ownership includes infrastructure ($200-$500/month), DevOps labor ($1,500-$2,000/month), monitoring tools ($200-$800/month), and incident response costs. Real-world TCO for production deployments typically ranges from $2,000-$3,500/month, which is higher than managed alternatives at low-to-medium request volumes.

Can LiteLLM handle enterprise-scale production traffic?

Yes, LiteLLM can scale to handle high request volumes if you architect the infrastructure properly with load balancing, database replication, and horizontal scaling. However, you're responsible for all capacity planning, performance tuning, and incident response. Managed vendors handle this complexity for you.

Does LiteLLM support Model Context Protocol (MCP) for agentic AI?

No, LiteLLM does not currently support MCP natively. It focuses on proxying requests to LLM providers with basic routing and observability. For sophisticated agentic AI workflows, you need a platform like TrueFoundry with native MCP support.

How does LiteLLM's security compare to managed gateways?

LiteLLM's open source code is auditable, which is a security advantage for teams that can conduct thorough code reviews. However, you're responsible for all security operations: vulnerability patching, dependency updates, access controls, secrets management, and audit logging. Managed vendors provide SOC 2 certified infrastructure, dedicated security teams, and automated patch management, reducing your security operational burden significantly.

What happens if LiteLLM has a critical bug in production?

You rely on community response via GitHub issues. There's no guaranteed fix timeline, no dedicated support engineer, and no SLA commitment. For mission-critical applications, this support risk can be unacceptable. LiteLLM Enterprise Premium ($30K/year) provides priority support but still requires you to manage infrastructure. Managed vendors provide 24/7 support with guaranteed response times.

Can I migrate from LiteLLM to a managed gateway later?

Yes, but migration complexity depends on how deeply you've customized LiteLLM. If you're using standard features (unified API, basic routing), migration to TrueFoundry or Portkey is straightforward since they offer OpenAI-compatible APIs. If you've heavily modified LiteLLM's code or built custom integrations, migration requires more engineering effort. Starting with a managed platform reduces future migration risk.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

%20(28).png)