How TrueFoundry Integrates with AWS: The Architecture of a Control Plane

For cloud architects and DevOps engineers, the primary challenge in scaling Generative AI is often not the availability of compute resources, but the orchestration of those resources. AWS provides powerful primitives -- Amazon EKS, Spot Instances, IAM roles, and Amazon Bedrock. However, combining these into a cohesive internal developer platform often involves complex configuration and ongoing maintenance.

TrueFoundry acts as that orchestration layer, sitting as an infrastructure overlay directly inside your AWS account. Below, we’ll break down exactly how the platform integrates with AWS—covering security boundaries, identity federation, networking, and compute optimization.

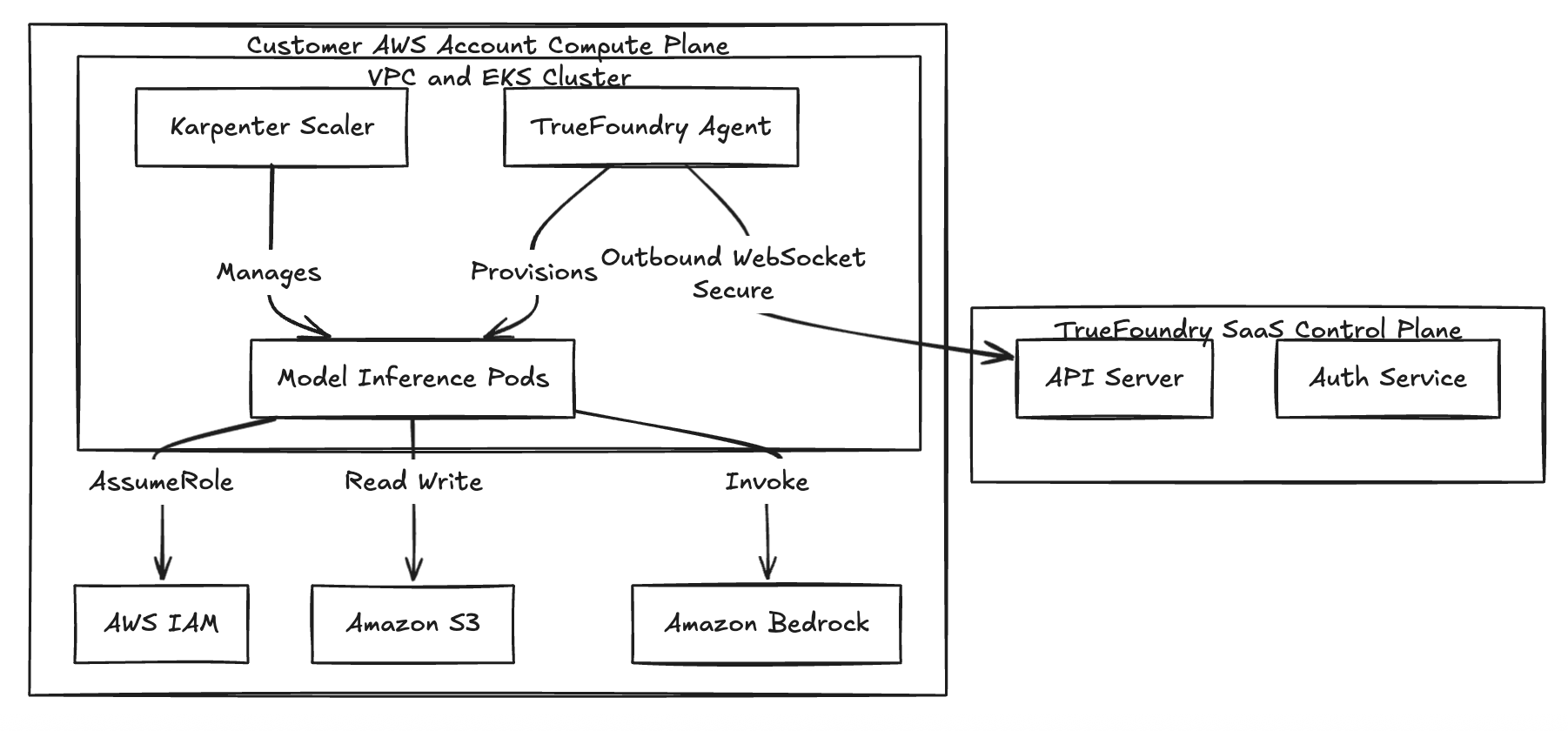

The Deployment Model: Control Plane vs. Compute Plane

We use a split-plane architecture to decouple management from execution.

- The Control Plane: This is the management layer (API and dashboard). It handles metadata, user auth (RBAC), and job scheduling.

- The Compute Plane: This is where the actual work happens. It consists of agents and controllers running on your Amazon EKS cluster, handling model weights, customer data, and GPU processing.

Connectivity relies on a secure, outbound-only WebSocket or gRPC stream. The agent running in your cluster initiates the connection to the Control Plane to fetch new deployment manifests and push status updates (like pod health). Because the traffic is outbound-only, you don’t need to open any inbound ports on your VPC security groups—keeping your VPC completely private.

Architecture Diagram: Split-Plane Security

Fig 1: The Split-Plane Architecture ensures data residency remains within the customer VPC.

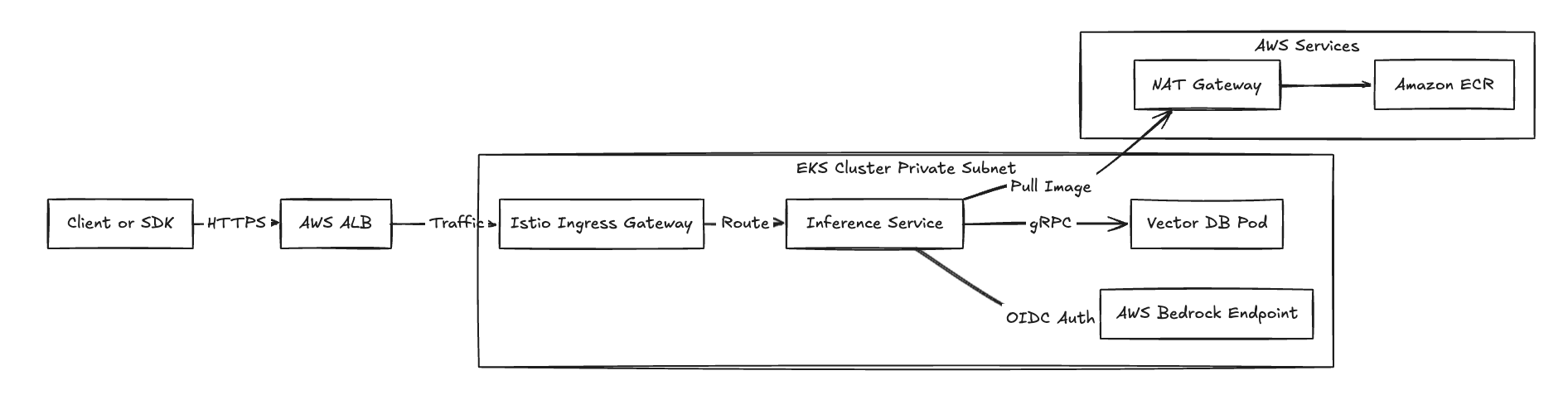

Networking Topology and Traffic Flow

When deploying TrueFoundry into an AWS environment, the networking configuration leverages the AWS VPC CNI plugin for Kubernetes. Compute resources operate within private subnets, ensuring that inference endpoints are not exposed to the public internet by default.

Ingress and Egress Patterns

- Inbound Traffic (Inference Requests): Application traffic enters the VPC through an AWS Application Load Balancer (ALB), managed by the AWS Load Balancer Controller. The ALB terminates TLS connections and forwards requests to the Istio Ingress Gateway running within the EKS cluster.

- Outbound Traffic (Registry & APIs): EKS worker nodes require outbound network access to pull container images from Amazon ECR and communicate with the Control Plane API. This traffic is routed through a NAT Gateway associated with the private subnets.

Service Mesh Integration

TrueFoundry deploys Istio to manage east-west traffic between microservices. For example, in a RAG pipeline where an embedding service communicates with a vector database, Istio manages routing and enforces mutual TLS (mTLS). This ensures internal cluster traffic is encrypted in transit without requiring application-level changes.

Fig 2: Network traffic flow demonstrating ingress for inference and egress for dependencies.

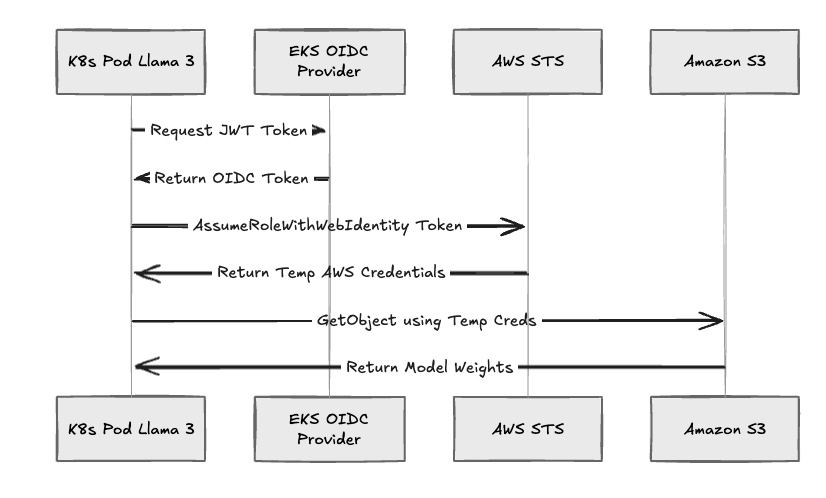

Identity Federation and Security

A primary security objective in enterprise AWS environments is the removal of static credentials. TrueFoundry addresses this by implementing IAM Roles for Service Accounts (IRSA).

The Authentication Flow

When a user deploys a workload, such as a fine-tuning job or an inference service, the platform executes the following workflow:

- Service Account Creation: TrueFoundry creates a Kubernetes Service Account in the workload namespace.

- Role Association: The Service Account is annotated with the ARN of a pre-provisioned AWS IAM Role.

- Token Exchange: The EKS OIDC provider validates the JSON Web Token (JWT) issued by the cluster to the pod.

- Credential Issuance: The pod exchanges this OIDC token with AWS STS (Security Token Service) to receive temporary, rotating AWS credentials.

This mechanism ensures application code uses standard AWS SDKs (e.g., boto3) without hardcoded secrets. If a pod is compromised, the potential impact is limited to the specific permissions granted to that IAM role, and credentials automatically expire.

Fig 3: The IRSA authentication flow used by TrueFoundry workloads.

Compute Engine: EKS, Karpenter, and Spot Optimization

Running Large Language Models (LLMs) on on-demand instances presents significant cost challenges. TrueFoundry orchestrates Karpenter, the open-source Kubernetes node provisioning system, to optimize compute utilization.

Spot Instance Orchestration

TrueFoundry provides specialized logic for managing EC2 Spot Instances:

- Capacity Provisioning: When a deployment requests resources (e.g., 24GB VRAM), the platform directs Karpenter to provision the most cost-effective instance type meeting the constraints. This might result in a g5.2xlarge, g5.4xlarge, or p3.2xlarge depending on current availability in the AWS region.

- Interruption Handling: The system monitors for the EC2 Spot Instance Termination Notice, which provides a two-minute warning before instance reclamation.

- Proactive Replacement: Upon detecting a termination signal via the AWS Node Termination Handler, the platform cordons the affected node, drains connections, and triggers Karpenter to provision a replacement immediately.

This orchestration enables the reliable use of Spot Instances for production inference workloads, typically yielding savings of up to 70% compared to On-Demand pricing for spot-tolerant workloads.

The AI Gateway: Unifying Bedrock and SageMaker

For organizations utilizing proprietary models, TrueFoundry serves as a unified API interface for Amazon Bedrock and Amazon SageMaker.

Bedrock Integration Patterns

Rather than embedding direct AWS SDK calls within application code, developers route requests through the TrueFoundry Gateway. The Gateway performs:

- Authentication: Verifies the internal API key against the Control Plane registry.

- Telemetry: Logs input and output token counts for granular cost attribution and audit trails.

- Proxying: Signs requests using the Gateway's own IAM role and forwards them to the Bedrock InvokeModel endpoint.

This centralization allows administrators to manage access policies at the Gateway level without constantly modifying IAM policies or rotating AWS credentials.

SageMaker Integration Patterns

TrueFoundry supports deploying models to SageMaker endpoints. However, the platform also provides the capability to deploy models directly to EC2/EKS. This alternative allows organizations to bypass the premiums typically associated with fully managed inference endpoints while maintaining a consistent deployment experience for the developer.

Infrastructure as Code (IaC) Compatibility

TrueFoundry is designed to integrate with existing Infrastructure as Code workflows. The platform provides verified Terraform Modules to provision the requisite underlying infrastructure:

- VPC & Networking: Configures private subnets, NAT gateways, and Security Groups according to AWS best practices.

- EKS Cluster: Provisions the Kubernetes control plane, managed node groups, and essential add-ons such as the VPC CNI and EBS CSI driver.

- Databases: Provisions Amazon RDS instances for metadata storage if the Control Plane is self-hosted.

This ensures the environment consists of standard AWS resources defined in the customer's Terraform state file, remaining fully auditable.

Comparison: AWS Native vs. AWS + TrueFoundry

The following table outlines the operational differences between building a platform using raw AWS primitives versus using the TrueFoundry overlay.

Conclusion: Architectural Alignment

The integration of TrueFoundry with AWS aligns with the architectural pattern of utilizing the hyperscaler for infrastructure reliability while implementing a specialized control plane for application-specific management.

For organizations invested in AWS, this model provides a mechanism to modernize AI operations while maintaining existing data residency and security perimeters. The customer retains control over the VPC and security boundaries, utilizing TrueFoundry to streamline the complexity of the AI application lifecycle.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

%20(28).png)