Architecting LLMs on OpenShift: Solving SCCs and Hybrid Identity

Enterprise architects know the reality of deploying on Red Hat OpenShift: you do not simply kubectl apply standard manifests. Whether you run on-premise, on IBM Cloud Satellite, or via Azure Red Hat OpenShift (ARO), the security primitives—specifically Security Context Constraints (SCCs)—will break standard upstream Kubernetes deployments immediately.

TrueFoundry functions as an orchestration overlay. We decouple the developer experience from the infrastructure complexity, allowing you to deploy LLMs while adhering to the strict boundaries of the Red Hat ecosystem. This post details how we integrate with OpenShift's networking, IBM Cloud IAM, and watsonx.ai.

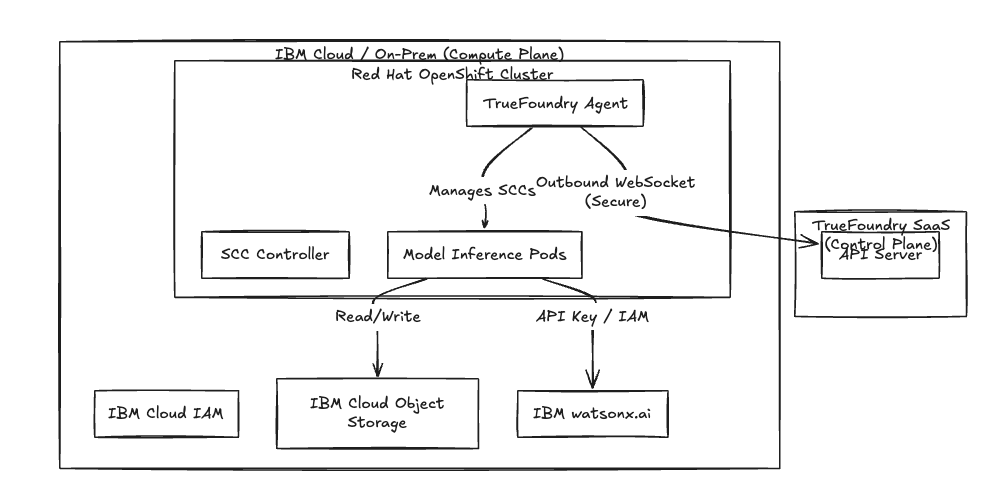

The Deployment Model: Split-Plane Architecture

We use a split-plane architecture to satisfy data residency requirements. You strictly control the Compute Plane; we manage the metadata in the Control Plane.

- Control Plane (SaaS or VPC): Handles identity management, model catalogs, and job scheduling.

- Compute Plane (Your Cluster): The TrueFoundry Agent runs directly on your Red Hat OpenShift Container Platform.

The Agent initiates an outbound-only WebSocket connection to the Control Plane. You do not open inbound firewall ports. This satisfies air-gapped or high-security requirements common in financial and healthcare sectors.

Fig 1: TrueFoundry operates within the local compute plane, respecting the secure perimeter.

Security: Automating SCCs and RBAC

The friction point in OpenShift is the enforcement of Security Context Constraints (SCCs). Unlike upstream Kubernetes, OpenShift prevents pods from running as root or accessing host paths by default.

Handling Restricted-v2

We architect the TrueFoundry agent to operate within the restricted-v2 SCC.

- UID Injection: We do not hardcode User IDs. The agent detects the namespace's annotated UID range and dynamically injects the correct runAsUser ID into the inference pod spec at runtime.

- Volume Abstraction: We bypass hostPath requirements by utilizing Persistent Volume Claims (PVCs) backed by IBM Cloud Block Storage or vSphere CSI for on-premise setups.

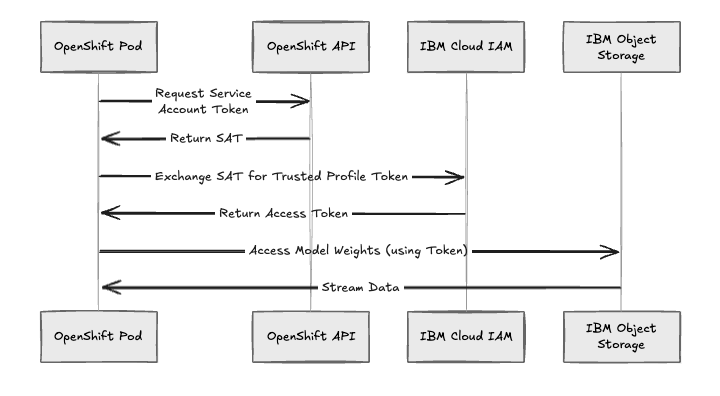

Identity Federation: IBM Cloud IAM

Hardcoded credentials in secrets are a security risk. For deployments on IBM Cloud, we implement identity federation using IBM Cloud Trusted Profiles.

This allows your OpenShift workloads to assume a dynamic identity. The pod exchanges its localized Service Account Token for an IBM Cloud IAM token, granting temporary access to IBM Cloud Object Storage (COS) or IBM Key Protect.

Fig 2: Authentication flow utilizing Trusted Profiles to eliminate static long-lived credentials.

Networking: Integrating with OpenShift Routes

Standard Kubernetes Ingress resources often require translation in Red Hat environments. TrueFoundry integrates directly with the OpenShift Ingress Controller (HAProxy).

- Ingress: When you deploy a model, we provision an OpenShift Route automatically, handling SSL termination at the edge.

- Air-Gapped Egress: For disconnected environments, we support image pulls from internal Red Hat Quay registries. You can pre-cache model weights in an internal S3-compatible store to eliminate runtime internet dependencies.

Compute: GPU Scheduling & Watsonx.ai

We interface with the NVIDIA GPU Operator to handle hardware acceleration.

MIG Partitioning

For A100 or H100 clusters, we support NVIDIA Multi-Instance GPU (MIG). The TrueFoundry scheduler identifies available MIG profiles and targets pods to the correct logical partition, increasing hardware utilization density without manual affinity configuration.

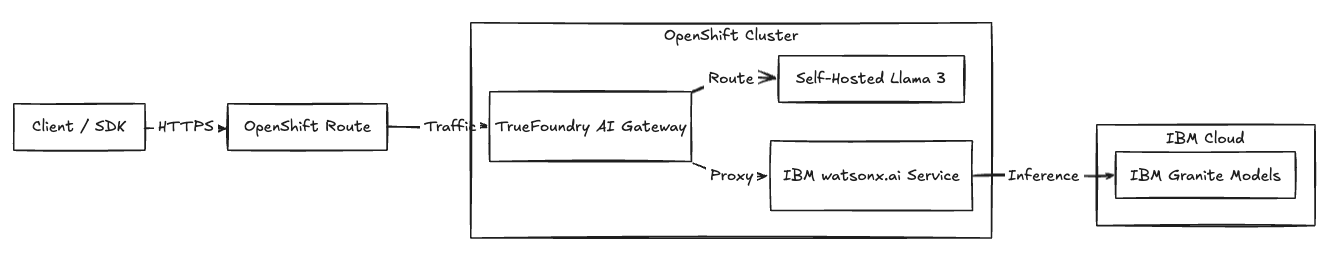

The Unified Gateway

For teams using IBM watsonx.ai, we provide a unified AI Gateway.

- Protocol Translation: Developers use a standard OpenAI-compatible schema. The Gateway handles the transformation to the watsonx.ai API.

- Unified Telemetry: We log requests to both self-hosted models (Llama 3, Mistral) and SaaS models (Granite, Watsonx) in a single pane for cost and audit observability.

Fig 3: The Gateway unifies traffic between self-hosted pods and managed IBM services.

Operational Comparison

The table below outlines the specific operational shifts when overlaying TrueFoundry on native OpenShift.

Architectural Continuity

Integrating TrueFoundry with IBM Cloud and Red Hat OpenShift enables you to maintain the strict compliance posture of the Red Hat ecosystem while accelerating model deployment. You retain data residency on OpenShift—whether on-premise or in the IBM Cloud—and provide your engineering teams with a unified interface that abstracts the underlying infrastructure constraints.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.webp)