AI Gateway vs API Gateway: Know The Difference

Enterprise AI adoption has reached a tipping point where traditional infrastructure approaches no longer suffice. While API gateways have served enterprises well for managing REST APIs and microservices, the emergence of AI workloads, particularly large language models, introduces unique challenges that expose critical limitations. Token-based pricing, variable latency, complex prompt routing, and AI-specific security requirements demand specialized infrastructure. Organizations attempting to route AI traffic through conventional API gateways often encounter performance bottlenecks, cost overruns, and compliance gaps that threaten their AI initiatives. Understanding when to leverage AI gateways versus traditional API gateways has become essential for successful enterprise AI deployment.

What is AI Gateway?

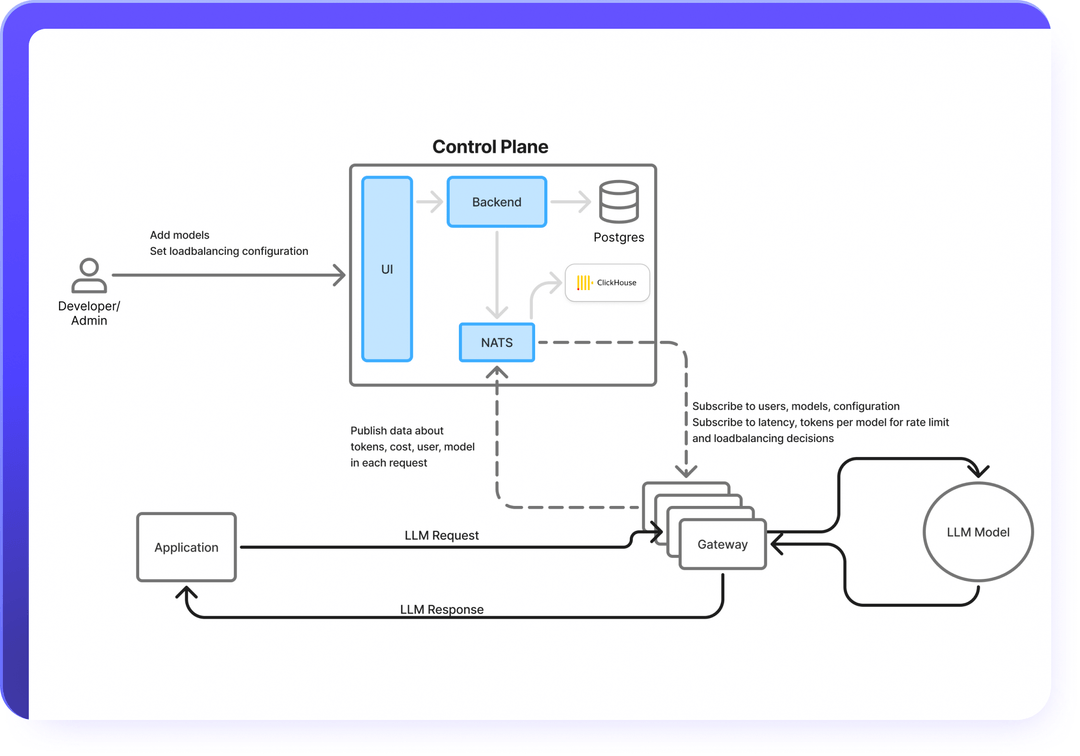

An AI gateway is a specialized infrastructure component designed specifically for managing artificial intelligence and machine learning workloads, particularly large language models and generative AI applications. Unlike traditional API gateways, AI gateways understand the unique characteristics of AI traffic, including token-based processing, variable response times, and model-specific routing requirements.

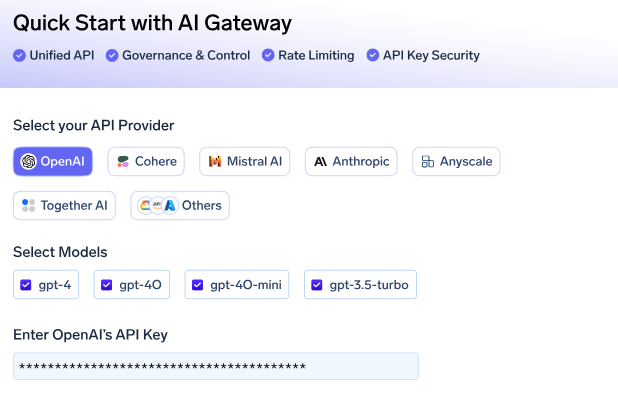

Core AI Gateway Functions address AI-specific challenges through intelligent model routing, token-level cost tracking, and prompt management. AI gateways provide unified access to multiple LLM providers through standardized interfaces, enabling seamless switching between OpenAI, Anthropic, Cohere, and self-hosted models. Advanced features include semantic caching for similar prompts, automatic failover between model providers, and real-time cost optimization based on token usage patterns.

What is an API Gateway?

An API gateway serves as the centralized entry point for managing, securing, and routing traffic to backend services in distributed architectures. Acting as a reverse proxy, it sits between client applications and microservices, providing essential enterprise capabilities including authentication, authorization, rate limiting, load balancing, and request/response transformation.

Core API Gateway Functions include traffic management through intelligent routing based on URL paths, HTTP methods, and headers. The gateway handles cross-cutting concerns like SSL termination, request throttling, and caching to optimize performance. Authentication mechanisms verify client credentials, while authorization policies ensure proper access control. Request and response transformation capabilities enable protocol translation and data format conversion.

API Gateway vs AI Gateway

Increasing Popularity of AI Gateway

The AI gateway market is experiencing explosive growth driven by the rapid enterprise adoption of generative AI technologies and the limitations of traditional infrastructure in handling AI-specific workloads.

Market Momentum and Investment reflect unprecedented enterprise demand. According to recent industry analysis, AI gateway adoption has grown 400% year-over-year, with enterprise AI spending reaching $50 billion globally. Major technology vendors, including Microsoft, Google, and AWS, have launched dedicated AI gateway services, while specialized providers like TrueFoundry, LangChain, and others have raised significant funding to address enterprise AI infrastructure needs.

Enterprise Pain Points Drive Adoption as organizations discover traditional API management tools inadequate for AI workloads. Early AI adopters frequently report cost overruns exceeding 300% of initial projections when routing AI traffic through conventional gateways. Performance issues arise from inefficient caching mechanisms that fail to recognize semantically similar prompts, while security gaps emerge from inadequate protection against prompt injection attacks and a lack of AI-specific compliance monitoring.

Regulatory and Compliance Pressures accelerate AI gateway adoption across regulated industries. The EU AI Act, emerging AI governance frameworks, and sector-specific regulations require detailed audit trails, bias monitoring, and content filtering capabilities that traditional API gateways cannot provide. Healthcare organizations implementing AI applications need HIPAA-compliant prompt handling, while financial services require specialized fraud detection and algorithmic transparency features.

Technological Maturity and Innovation drive solution sophistication. Modern AI gateways offer advanced capabilities, including semantic caching that reduces costs by up to 70%, intelligent routing that optimizes performance across multiple model providers, and real-time cost optimization that prevents budget overruns. These capabilities address enterprise requirements for predictable costs, reliable performance, and comprehensive governance that traditional infrastructure cannot deliver.

Industry Validation comes from Fortune 500 implementations demonstrating measurable ROI. Organizations report 40-60% cost reductions, improved AI application performance, and enhanced security posture after implementing specialized AI gateway solutions.

When to Use AI Gateway?

Organizations should consider implementing AI gateways when their AI initiatives move beyond experimental phases into production environments where enterprise requirements become critical. The transition point typically occurs when organizations deploy multiple AI applications, integrate various model providers, or face increasing cost and compliance pressures that traditional infrastructure cannot address effectively.

Multi-model environments represent the most compelling use case for AI gateway adoption. When organizations utilize multiple LLM providers, combining OpenAI for general tasks, Anthropic for safety-critical applications, and self-hosted models for proprietary data, an AI gateway becomes essential for unified management. Traditional API gateways struggle with the complexity of routing decisions based on model capabilities, token limits, and cost optimization across diverse providers. AI gateways enable seamless switching between models based on real-time performance metrics, cost considerations, and specific use case requirements.

Cost control concerns drive many organizations toward AI gateway solutions as AI usage scales across the enterprise. Organizations processing millions of tokens monthly often discover their AI costs spiraling beyond budget projections. AI gateways provide granular cost tracking, intelligent caching mechanisms that recognize semantically similar prompts, and automated routing to cost-effective models when appropriate. These capabilities can reduce AI infrastructure costs by 40-70% while maintaining application performance and user experience.

Regulatory compliance requirements make AI gateways essential for organizations in regulated industries. Healthcare organizations handling patient data, financial institutions processing sensitive customer information, and government agencies managing classified materials need specialized audit trails, content filtering, and bias monitoring capabilities. Traditional API gateways lack the AI-specific governance features required for regulatory compliance, while AI gateways provide purpose-built tools for prompt sanitization, output monitoring, and comprehensive audit logging that satisfy regulatory requirements.

Do You Really Need an AI Gateway? Let's Evaluate

Not every organization requires an AI gateway immediately, and honest self-assessment prevents unnecessary infrastructure complexity and investment. The decision ultimately depends on your AI maturity, scale, and specific enterprise requirements rather than following technology trends or vendor recommendations.

Start with Simple Scenarios that may not warrant AI gateway investment. Organizations running single AI applications with one model provider, processing fewer than 100,000 tokens monthly, or operating in pilot phases can often manage effectively with direct API connections or lightweight API management tools. Small teams experimenting with ChatGPT integration, basic document summarization, or simple chatbot implementations typically don't need specialized gateway infrastructure. Similarly, organizations with minimal compliance requirements and straightforward cost structures may find traditional solutions adequate for their current needs.

Evaluate Scale and Complexity Indicators that signal AI gateway necessity. Key triggers include monthly token consumption exceeding one million, usage across multiple model providers, implementation of production-grade AI applications serving external customers, or requirements for detailed cost attribution across teams and projects. Technical complexity increases significantly when organizations need intelligent routing between models, semantic caching for cost optimization, or automated failover mechanisms for reliability. These scenarios typically emerge as AI initiatives mature from experimentation to business-critical operations.

Consider Compliance and Governance Requirements that often mandate specialized infrastructure. Organizations in healthcare, finance, government, or other regulated industries typically require AI-specific audit trails, content filtering, and bias monitoring capabilities that general-purpose API gateways cannot provide. Even seemingly simple AI applications become complex when they must satisfy HIPAA, SOC 2, or other regulatory frameworks. Additionally, organizations with strict data sovereignty requirements or those handling sensitive intellectual property often need the advanced security and governance features that specialized AI gateways provide.

Assess Long-term Strategy and Growth Projections to avoid costly infrastructure migrations later. Organizations planning a significant expansion of AI, anticipating regulatory compliance requirements, or building AI-powered products for external customers should consider adopting an AI gateway earlier in their journey to avoid technical debt and migration complexities.

TrueFoundry AI Gateway Solution

TrueFoundry AI Gateway delivers enterprise-grade AI traffic management through a purpose-built platform that addresses the unique challenges of production AI workloads. Processing over one million LLM calls daily for organizations including NVIDIA, CVS Health, and Siemens, the platform combines high-performance architecture with comprehensive AI-specific features.

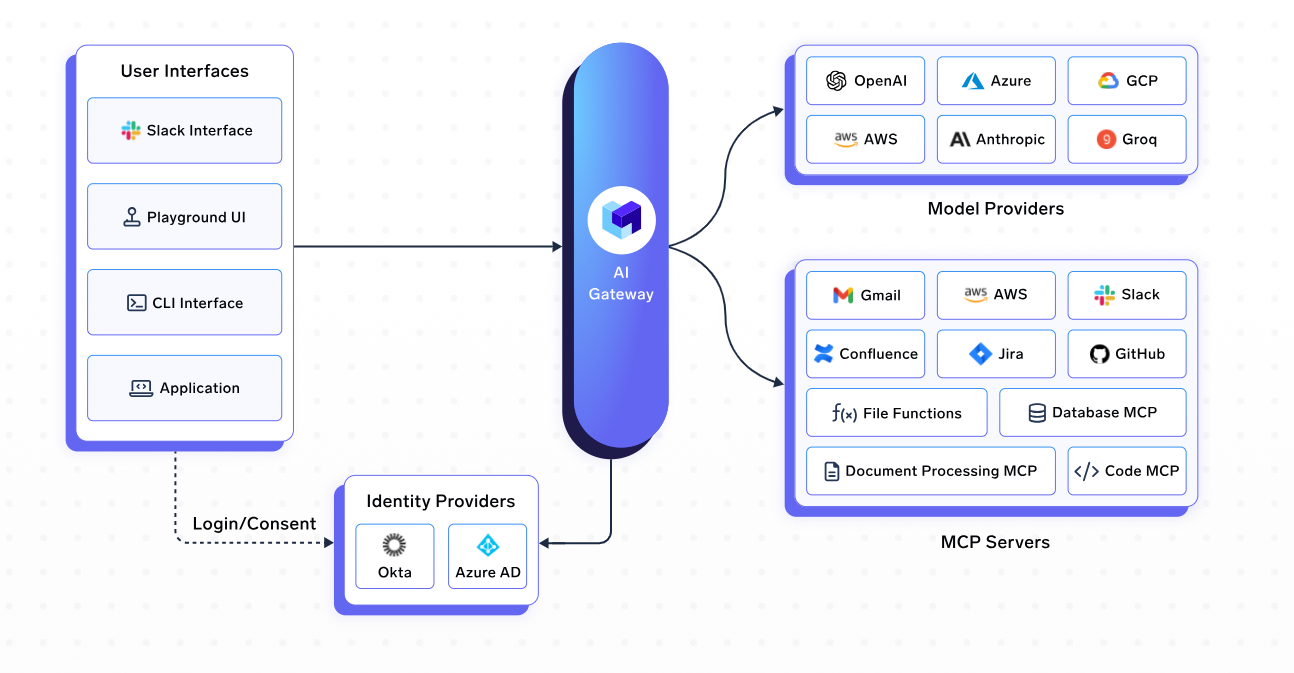

Unified Model Access provides seamless integration with 1000+ LLMs through a single OpenAI-compatible API interface. The gateway supports third-party providers, including OpenAI, Anthropic, Cohere, and cloud platforms, alongside self-hosted and fine-tuned models through one-click "Add to Gateway" functionality. This unified approach eliminates vendor lock-in while enabling organizations to leverage the best models for specific use cases without code changes.

Enterprise-Grade Performance delivers minimal latency overhead of less than 5ms through an architecture optimized for production workloads. Built on the Hono framework for ultra-fast request handling, the gateway scales from 250 RPS on 1 CPU/1GB RAM to thousands of RPS through horizontal scaling. Zero external calls on the request path and in-memory enforcement of all policies ensure sub-millisecond response times for authentication, authorization, and routing decisions.

Advanced Traffic Management capabilities include intelligent load balancing with weight-based and latency-based routing strategies. The gateway continuously monitors requests per minute, tokens per minute, and failure rates to maintain optimal model health. Automatic failover to backup models ensures continuous service availability, while semantic caching reduces costs by up to 70% by recognizing similar prompts rather than requiring exact matches.

Comprehensive Security and Governance features provide enterprise-grade access control through RBAC integration with identity providers, including Okta and Azure AD. Fine-grained permissions management, centralized API key management, and comprehensive audit trails support regulatory compliance requirements. Built-in guardrails offer content filtering, bias detection, and prompt injection prevention specifically designed for AI applications.

Multimodal and Agentic AI Support enables next-generation AI workflows through native MCP (Model Context Protocol) Gateway integration for enterprise tool orchestration. The platform supports text, image, and audio inputs while providing dedicated infrastructure for AI agent workflows with enterprise-grade security and observability.

Conclusion

The choice between API gateways and AI gateways represents a strategic infrastructure decision that impacts the success of enterprise AI initiatives. While traditional API gateways excel at managing conventional web services, AI workloads demand specialized capabilities that only purpose-built AI gateways can provide. Token-based pricing models, variable latency patterns, multi-modal processing, and AI-specific security requirements create fundamental mismatches with traditional gateway architectures. Organizations that recognize these differences early and implement appropriate AI gateway solutions position themselves for sustainable AI success, avoiding the performance bottlenecks, cost overruns, and compliance gaps that plague AI initiatives built on inadequate infrastructure foundations.

FAQ’s

1. What is the main difference between an API Gateway and an AI Gateway?

An API Gateway manages traditional web traffic using fixed endpoints and request patterns, while an AI Gateway handles token-based, model-specific routing for LLMs. AI Gateways offer features like semantic caching, cost tracking, and prompt management capabilities essential for running large-scale generative AI applications in production.

2. Do I need an AI Gateway if I’m only using OpenAI’s API?

Not necessarily. If your use is limited, you can interact directly with OpenAI. However, as your token usage grows or you integrate multiple models, an AI Gateway helps with cost optimization, provider switching, and governance, giving you better control, observability, and scalability.

3. How does an AI Gateway reduce costs?

AI Gateways reduce costs by tracking token usage, routing to cost-efficient models, and leveraging semantic caching, reusing similar prompts instead of sending redundant ones. This can lower token spend by up to 70%, especially in high-volume applications like chatbots, content generation, or customer support automation.

4. Can an AI Gateway help with compliance and data governance?

Yes. AI Gateways include AI-specific guardrails like prompt filtering, output moderation, audit logs, and role-based access control. These features help organizations meet compliance requirements such as HIPAA, GDPR, and SOC 2 when deploying LLMs in regulated industries like healthcare or finance.

5. What makes TrueFoundry’s AI Gateway unique?

TrueFoundry offers unified access to 1000+ LLMs with near-zero latency, advanced routing, semantic caching, and enterprise-grade observability. It supports multimodal input and integrates seamlessly with identity providers and internal tools, making it ideal for organizations scaling production AI workloads with cost, performance, and security in mind.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.