Top 5 Envoy Proxy Alternatives

The modern software ecosystem increasingly relies on microservices and distributed architectures, where efficient communication, security, and observability are critical.

In this environment, service proxies act as the backbone of reliable infrastructure. Envoy Proxy has emerged as a popular choice, offering advanced features like load balancing, service discovery, traffic routing, and telemetry. Its robust architecture makes it a favorite for enterprises adopting cloud-native designs, Kubernetes, or service mesh frameworks.

However, as organizations scale, the limitations of a single proxy solution become evident. Teams may require more flexibility, deeper observability, better integration with multi-cloud environments, or simpler operational management.

Exploring alternatives to Envoy can reveal platforms that offer enhanced performance, enterprise-ready governance, or specialized support for modern microservices patterns.

What is Envoy Proxy?

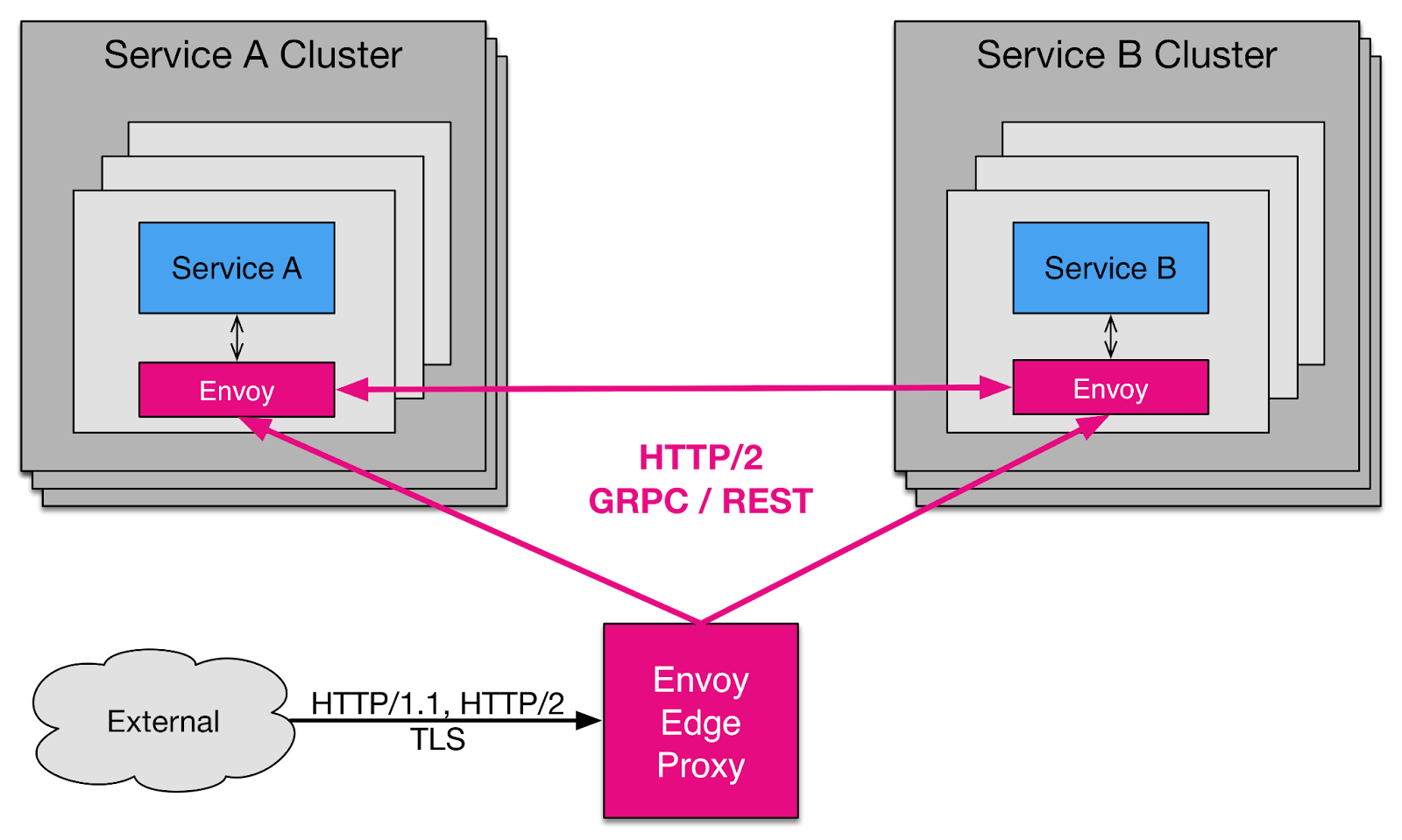

Envoy Proxy is a high-performance, open-source edge and service proxy designed for cloud-native applications and microservice architectures. Developed by Lyft, Envoy has become a cornerstone in modern infrastructure due to its flexibility, scalability, and rich set of features.

It operates as a communication bus and universal data plane for services, handling all inbound and outbound traffic, while providing observability, resilience, and security across distributed systems.

At its core, Envoy Proxy simplifies service-to-service communication. It decouples service clients from the complexities of networking, enabling developers to focus on application logic rather than building custom routing, load balancing, or monitoring systems. Envoy supports advanced features such as dynamic service discovery, health checking, HTTP/2 and gRPC support, circuit breaking, and rate limiting. These capabilities make it ideal for organizations adopting service mesh architectures, such as Istio or Consul, where Envoy often serves as the default sidecar proxy.

Key Features of Envoy Proxy

- Load Balancing and Traffic Routing: Envoy intelligently routes requests using sophisticated strategies such as least request, round-robin, and weighted balancing to ensure high availability and optimal performance.

- Observability and Metrics: It provides detailed telemetry, including request traces, latency histograms, and error rates, enabling real-time monitoring and debugging of microservices.

- Service Discovery: Envoy integrates with popular service registries, automatically updating routes when services are added, removed, or fail.

- Resilience Features: Circuit breakers, retries, timeouts, and rate limiting help protect services from failures and ensure smooth traffic flow under load.

- Protocol Support: Native support for HTTP/1.1, HTTP/2, gRPC, and TCP allows Envoy to handle modern workloads efficiently.

Envoy’s architecture makes it a versatile tool for building secure, reliable, and observable distributed systems. Its plug-in ecosystem, dynamic configuration, and performance optimizations have made it a standard component for enterprises deploying microservices at scale. By centralizing traffic management while providing fine-grained control, Envoy helps teams reduce operational complexity and improve system resilience.

How Does Envoy Proxy Work?

Envoy Proxy functions as a high-performance communication layer for modern microservices, sitting between services to handle all inbound and outbound traffic. It can operate as a sidecar proxy alongside each service, at the edge of a network, or as a standalone service gateway. By intercepting traffic, Envoy provides features such as load balancing, service discovery, retries, rate limiting, and observability without requiring changes to the application code.

Client Request Interception

When a client sends a request, Envoy receives it first. The proxy evaluates the request against configured rules for routing, retries, and rate limiting.

Dynamic Routing

Envoy uses service discovery to determine the available instances of the target service. Based on routing rules, it selects an endpoint using strategies like least request, round-robin, or weighted routing.

Traffic Resilience

Before forwarding, Envoy applies circuit breakers, timeouts, and retry policies to ensure that downstream failures do not cascade. If a request fails, it can automatically retry according to predefined rules.

Observability and Metrics Collection

As traffic flows through Envoy, it logs telemetry including request counts, latency, errors, and response codes. This data feeds into dashboards, tracing systems, and alerting mechanisms.

Protocol Handling and Transformation

Envoy supports HTTP/1.1, HTTP/2, gRPC, and TCP. It can transform headers, inject metadata, or modify requests and responses dynamically.

Key Features

- Sidecar and Edge Deployment: Flexible deployment as a sidecar proxy for each service or at the network edge.

- Traffic Routing and Load Balancing: Supports advanced routing strategies for optimal resource utilization.

- Retries, Timeouts, and Circuit Breaking: Ensures service reliability under load or failure conditions.

- Observability and Tracing: Integrated support for distributed tracing and metrics collection.

- Protocol-Agnostic Support: Works with HTTP, gRPC, and TCP for diverse microservice workloads.

By operating as a transparent intermediary, Envoy enables developers to focus on business logic while providing enterprise-grade traffic control, resilience, and observability. Its dynamic configuration and extensibility make it suitable for highly scalable, distributed applications.

Why Explore Envoy Proxy Alternatives?

Envoy Proxy is a robust and widely adopted solution, but it is not a one-size-fits-all tool. Organizations often face challenges related to complexity, operational overhead, and scalability when deploying Envoy at enterprise scale.

Configuring and managing Envoy’s dynamic routing, policies, and telemetry can require deep expertise, which may slow down deployment and increase maintenance costs.

Additionally, Envoy’s core design focuses heavily on microservices communication and service mesh integration, which may not fully address certain enterprise needs. For example, teams seeking integrated AI traffic management, enhanced agent orchestration, or enterprise-grade GPU and workload observability may find Envoy insufficient out of the box. Similarly, managing multi-cloud or hybrid deployments at scale can be cumbersome with Envoy alone, especially when advanced security, governance, or compliance features are required.

Other considerations include performance and resource efficiency. While Envoy is high-performance, its rich feature set can introduce overhead for lightweight workloads or small-scale services.

Organizations also increasingly prioritize tools that unify observability, governance, and orchestration under a single platform rather than relying on separate configurations and external plugins.

Exploring alternatives allows teams to find solutions tailored to their operational priorities. Platforms such as TrueFoundry, Portkey, LiteLLM, AWS Bedrock, and Azure AI Foundry provide additional benefits, including centralized agent management, enhanced tracing, simplified multi-model orchestration, and enterprise-grade compliance. Choosing the right alternative ensures reduced complexity, better scalability, improved governance, and faster time-to-production for modern distributed applications.

Top 5 Envoy Proxy Alternatives

Choosing the right gateway for your applications can make a huge difference in performance, security, and scalability. While Envoy Proxy remains a powerful and flexible option, many organizations look for alternatives that offer simpler management, enterprise-grade features, or integrated AI capabilities.

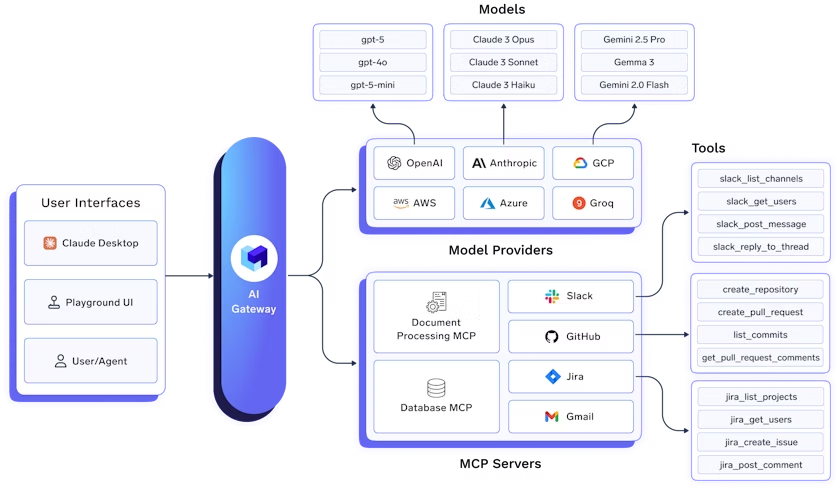

1. TrueFoundry

TrueFoundry offers an enterprise-grade AI Gateway designed to securely manage, route, and observe API and AI workloads at scale. The gateway centralizes LLM and service traffic, providing unified access across multiple AI models, including OpenAI, Claude, Gemini, Groq, Mistral, and more.

By integrating routing, observability, and governance, TrueFoundry simplifies traffic management while ensuring high performance and low latency for production workloads.

The platform enables organizations to orchestrate multi-model workloads seamlessly, centralize API key management, and implement strict team-based access control. Full observability allows monitoring of latency, token usage, error rates, and request volumes, with centralized logging to inspect requests and responses, ensuring compliance and rapid debugging.

Key Features:

- Unified LLM API Access: Connect all major AI models via a single gateway and orchestrate multi-model workloads.

- Observability & Metrics: Track latency, errors, token usage, and request volume; inspect logs by model, team, or region.

- Quota & Access Control: Enforce rate limits, RBAC, and token-based or cost-based quotas for governance.

- Low-Latency Inference: Achieve sub-3ms internal latency, supporting real-time chat, RAG, and AI assistants.

- Routing & Fallbacks: Smart routing based on latency or load; auto-fallback to secondary models; geo-aware routing for compliance.

- Self-Hosted Model Support: Deploy LLaMA, Mistral, Falcon, and others in VPC, hybrid, or air-gapped environments with full GPU scheduling and autoscaling.

With its AI Gateway, TrueFoundry ensures secure, reliable, and observable traffic management, making it a robust Envoy Proxy alternative for enterprises looking to streamline their API and AI workflows.

2. Kong AI / Kong Gateway

Kong AI Gateway is a high-performance API and AI gateway designed to simplify multi-LLM integration and API traffic management. It centralizes routing, security, observability, and policy enforcement for both AI workloads and traditional APIs.

Kong provides smart routing, semantic caching, and RAG pipeline integration, allowing enterprises to optimize costs, maintain high availability, and ensure low-latency responses. Its cloud and self-hosted options offer flexibility across hybrid and multi-cloud environments, making it a robust alternative to Envoy Proxy for enterprise traffic orchestration.

Key Features

- Unified API Interface: Manage multiple AI providers and models through a single gateway.

- Semantic Caching & Routing: Reduce redundant calls and optimize response times.

- RAG Pipeline Support: Automatically augment prompts with context from vector databases.

- Observability: Monitor token usage, latency, error rates, and request volume.

- Policy Enforcement: Apply security, access, and rate-limit controls consistently.

Also explore: Top 5 Kong AI Alternatives

3. NGINX Plus

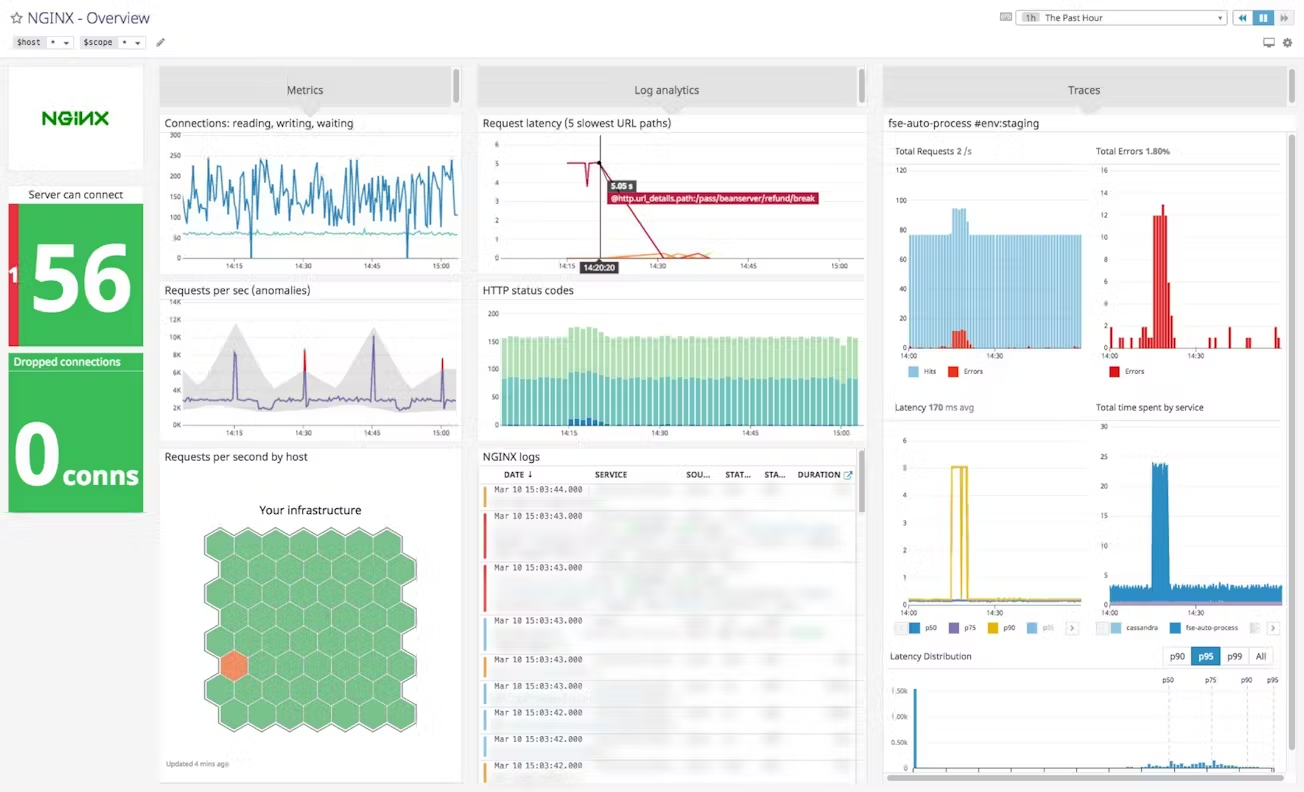

NGINX Plus is an advanced, commercial-grade version of NGINX that combines reverse proxy, load balancing, and API gateway capabilities. It provides enterprises with high-performance traffic management, seamless scaling, and robust observability. NGINX Plus is suitable for both traditional microservices and modern AI applications, offering centralized routing, caching, and security enforcement.

Its flexible architecture supports hybrid, on-prem, and cloud deployments, making it a reliable choice for managing complex API traffic in production.

Key Features

- High-Performance Load Balancing: Distribute traffic efficiently across multiple servers and services.

- Advanced Caching: Reduce latency and backend load with response caching.

- Centralized Routing & Proxying: Handle API and AI traffic seamlessly.

- Observability & Metrics: Monitor server health, request metrics, and performance.

- Security Features: SSL/TLS, access control, and rate-limiting support.

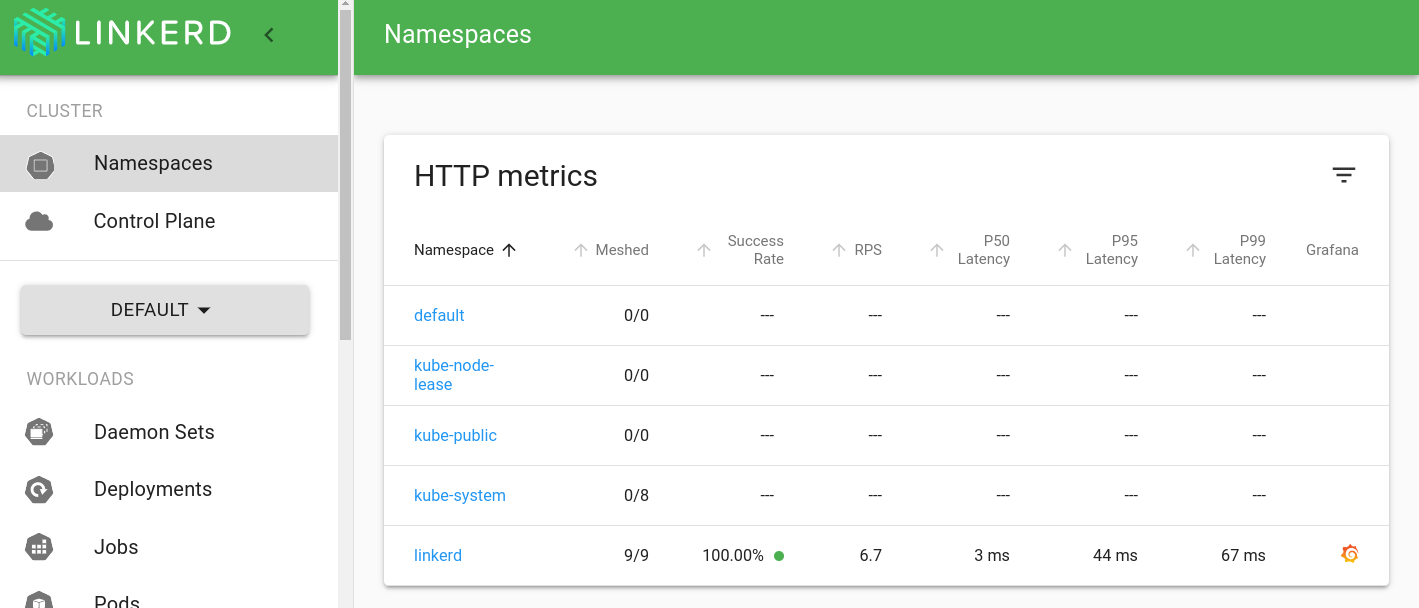

4. Linkerd

Linkerd is a lightweight, cloud-native service mesh designed to simplify service-to-service communication in Kubernetes and microservices environments. Unlike Envoy, it emphasizes simplicity, minimal configuration, and high performance, making it ideal for teams that want the benefits of a service mesh without the operational complexity.

Key Features:

- Sidecar Proxy Deployment: Automatically injects proxies alongside services to manage traffic transparently.

- Traffic Resilience: Supports retries, timeouts, and traffic shifting to prevent cascading failures.

- Observability & Metrics: Collects telemetry, including request counts, latency, and errors for monitoring and debugging.

- mTLS Security: Encrypts all service-to-service communication by default.

- Lightweight & High Performance: Minimal overhead, suitable for small and large-scale deployments alike.

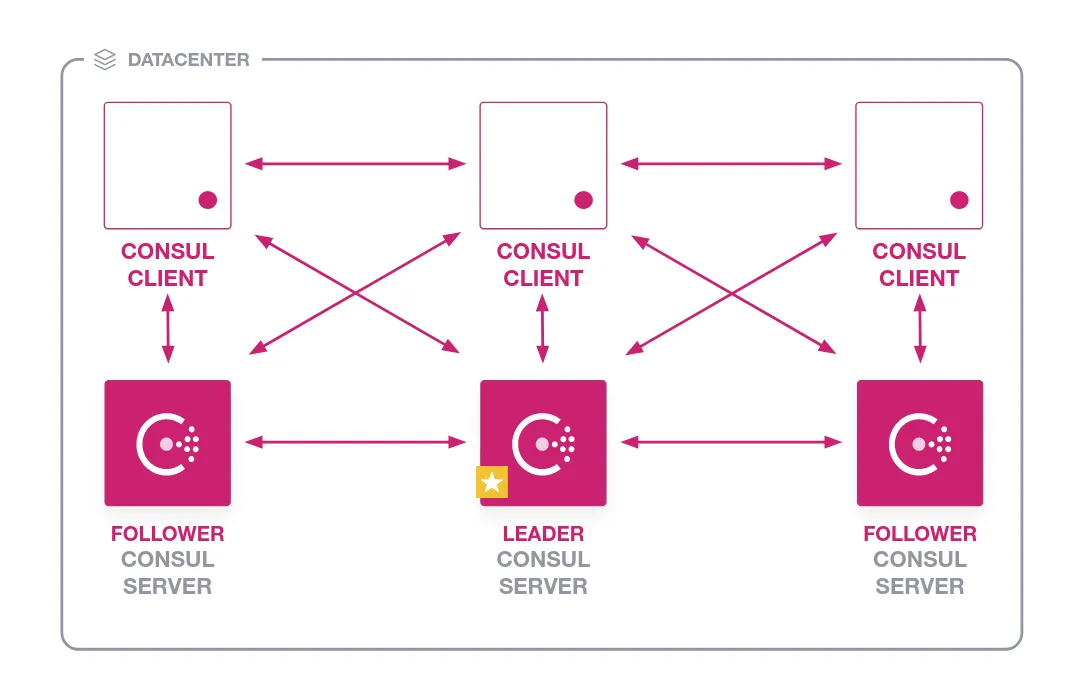

5. Consul Connect

Consul Connect, part of HashiCorp’s Consul platform, is an enterprise-grade service mesh focused on secure and reliable service-to-service communication. It uses mutual TLS (mTLS) encryption and identity-based authorization to protect microservices traffic, providing enterprise-grade security and governance.

Key Features:

- Mutual TLS Encryption: Secures all traffic between services automatically.

- Identity-Based Access Control: Uses service identity (“intentions”) to enforce secure authorization.

- Sidecar Proxy Support: Transparent proxy deployment for service-to-service traffic management.

- Service Discovery Integration: Works with Consul for dynamic service registration and health monitoring.

- Observability & Metrics: Tracks service health, request metrics, and performance for operational insights.

Conclusion

Envoy Proxy has proven itself as a versatile and high-performance edge and service proxy, but modern enterprises often require specialized capabilities such as integrated AI routing, advanced observability, and simplified policy enforcement. This has driven the adoption of alternative platforms that combine traffic management with enterprise-grade security, scalability, and governance.

TrueFoundry stands out by providing a centralized AI and API gateway with full observability, tracing, and policy controls, making it ideal for complex workflows and multi-model environments. Kong AI Gateway complements this with semantic routing, RAG pipeline integration, and multi-LLM orchestration for low-latency, cost-efficient deployments.

Traditional gateways like NGINX Plus, AWS API Gateway, and Azure API Management continue to offer reliable load balancing, caching, and centralized API management for a wide range of applications.

Choosing the right alternative depends on your organization’s specific needs, whether that’s AI-focused traffic, cloud-native integration, or enterprise-grade compliance and scalability.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.