Top 5 MCP Gateways of 2025

Here's a scenario that played out across hundreds of enterprise engineering teams in late 2024: You've built an AI agent that can write code, analyze data, and generate reports. It works beautifully in demos and presentations. But as soon as you try to connect it with your actual tools, Slack, Jira, internal databases, suddenly you're drowning in authentication flows, security reviews, and integration hell.

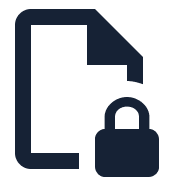

This infrastructure gap is exactly what Anthropic tackled when they released the Model Context Protocol (MCP) in November 2024. The protocol promised something elegant: a standardized way for AI agents to discover and interact with tools without custom integrations for every API, database, or internal system.

This was different from previously used tools and connectors, which were API-based and designed for direct integration with systems via code in specified formats. They were difficult to handle for AI systems, causing all kinds of reliability issues. MCP was not just a new tool calling schema; it was a complete communication protocol, designed for AI systems.

But here's what nobody had anticipated: The jump from protocol specification to production-ready infrastructure turned out to be much bigger than anyone expected. Teams quickly realized that while MCP solved the integration problem, it created new challenges around security, observability, and operational management that the base protocol simply doesn't address.

With the sudden influx of MCP adoption, there was another infrastructure gap brewing. This gap was management hell, which had moved from LLMs to Connectors to MCPs, all just in two years.

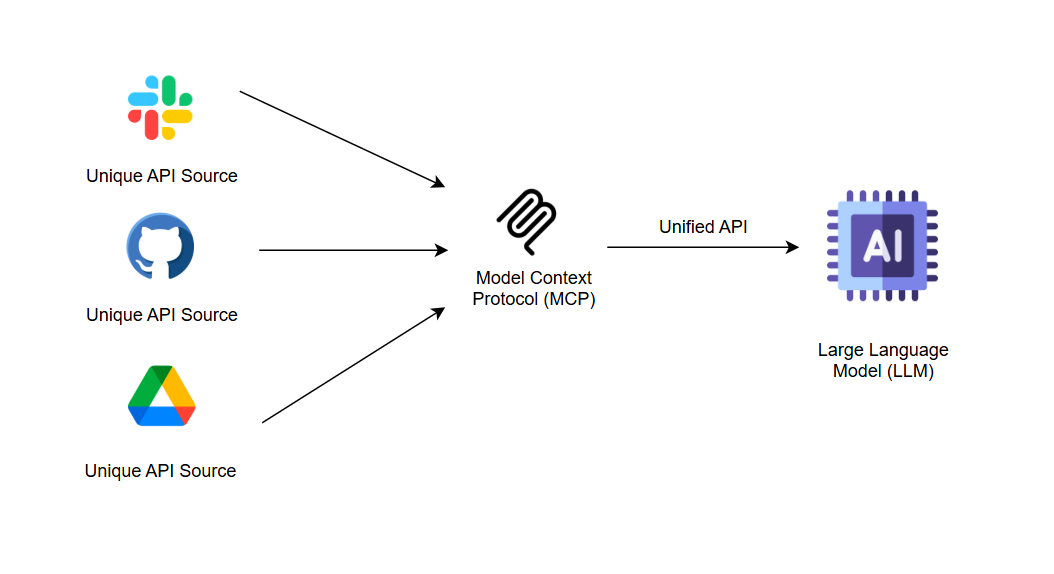

Enter MCP Gateways, the new infrastructural layer that bridges this gap. These aren't just proxy servers; they're the control panel that makes the AI agent tool enterprise-ready. Today, we're examining five solutions that represent fundamentally different approaches to the same critical challenge: how do you safely and centrally manage AI agent interactions with real-world tools at scale?

Why Do You Need MCP Gateway

Before we dive into specific solutions, first let's establish what we're really solving. Running MCP servers directly might work for small/individual use cases, but it exposes three critical problems that enterprises can't ignore:

Security Issues: MCP servers execute with whatever permissions you grant them. Managing permission allowances, security groups, auth issues, user-specific roles, container-based isolation, etc., becomes too inconvenient, too quickly, as systems expand.

The Visibility Black Hole: Direct MCP connections provide zero insight into what agents are actually doing with your tools. Your system logs, their reports, and analysis are all hidden, unless shown in a structured manner on a well-managed dashboard.

Operational Chaos: Managing individual MCP servers becomes unwieldy fast. Multiply that by dozens of tools and multiple environments, and you've got an operational nightmare.

MCP Gateways solve these problems by providing security isolation, comprehensive observability, and centralized management. But as we'll see, each solution takes a distinctly different design approach.

1. TrueFoundry

Core Philosophy: If you're already managing AI infrastructure, why fragment it across different systems?

TrueFoundry's approach builds on a simple but powerful insight: most organizations already have AI infrastructure for managing LLMs. Instead of building parallel infrastructure for MCP tools, they've unified everything into a single control panel that handles both with identical security, observability, and performance characteristics. This eases out management of AI systems and provides a centralised control & monitoring platform.

Lightning Latency

The performance numbers tell the story: sub-3ms latency under load, achieved by handling authentication and rate limiting in-memory rather than through database queries. When agents are making hundreds of tool calls per conversation, this performance difference compounds significantly.

Centralised & Integrated Infrastructure

MCP Server Groups provide logical isolation that other gateways often overlook. Different teams can experiment with different MCP servers without creating security holes or configuration conflicts. This matters more in practice than most teams realize. TrueFoundry also supports containerised MCP deployment, unified integration with its AI Gateway, seamless authentication and access control, along with custom configs, rate limiting, load balancing, fallback mechanisms, guardrails, and model deployments on the cloud as well. The interactive playground generates production-ready code snippets across multiple programming languages. This isn't just developer convenience, it's about reducing the barrier between experimentation and actual deployment.

But perhaps the biggest advantage is unified billing and observability. Organizations already tracking LLM costs get a consolidated view of tool usage costs and performance metrics. This prevents the budget surprises that have caught many early MCP adopters off guard.

Target Audience

Organizations already running significant AI workloads that want to extend their existing infrastructure rather than fragment it. The unified approach particularly appeals to teams that prefer comprehensive AI infrastructure management from a single vendor. Organisations looking to adapt to an easy-to-use and manage, feature-rich solution should also go for it. It is a great enterprise-level offering for most engineering teams, including various integrations (n8n, Slack, Claude Code, etc.) and cloud offerings (deployment, finetuning) complementing it.

2. Docker

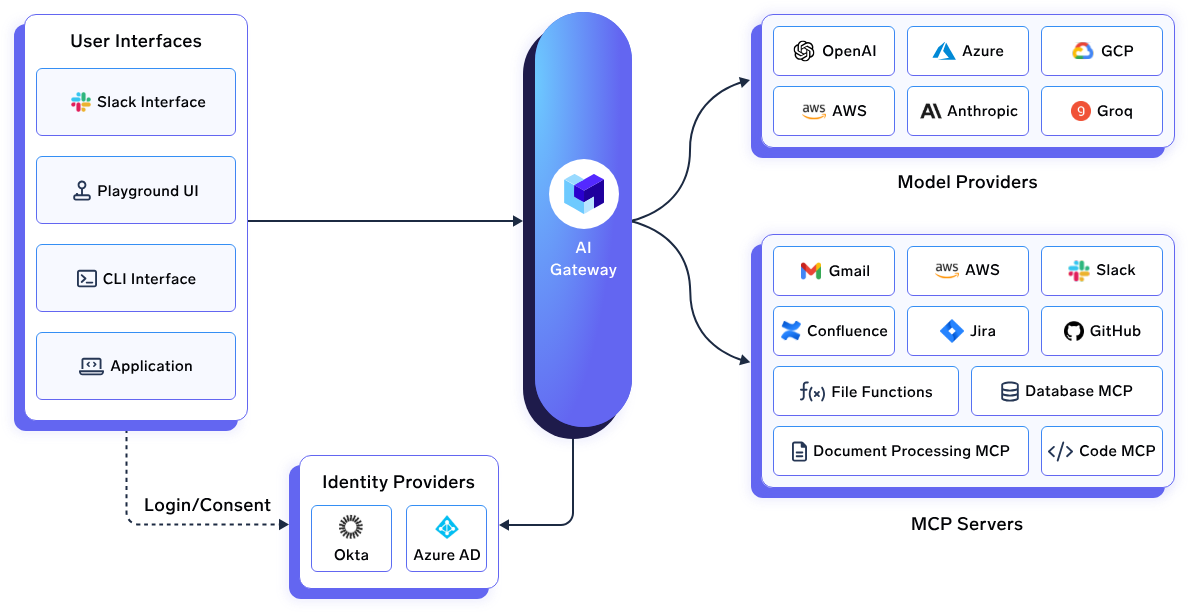

Core Philosophy: Treat MCP servers like any other workload that needs isolation, security, and environment management, all through containerised solutions. Docker jumped into the MCP space by leveraging their core strength: containerization, which is quite beneficial for engineering teams with container-heavy infrastructures.

The Container Advantage

The isolation model addresses something that keeps enterprise security teams up at night: tool poisoning attacks. Each MCP server runs with a CPU limited to 1 core, memory capped at 2GB, and no host filesystem access by default. This isn't just security theater; it's about predictable resource usage and protection against runaway processes.

Cryptographically signed container images provide supply chain security. When you're running tools that can access production systems, knowing exactly what code you're executing becomes critical.

The Docker Desktop integration has significantly lowered the barrier to secured, isolated experimentation, where developers can safely experiment without complex setup procedures.

Best Fit

Organizations with container-first infrastructure, container-specific use-cases (ex: executing code), and strong security requirements. The Docker approach works particularly well for teams that are comfortable with container security models and want to apply familiar patterns to MCP deployment.

3. IBM MCP Gateway

Core Philosophy: Enable sophisticated multi-gateway deployments with maximum architectural flexibility.

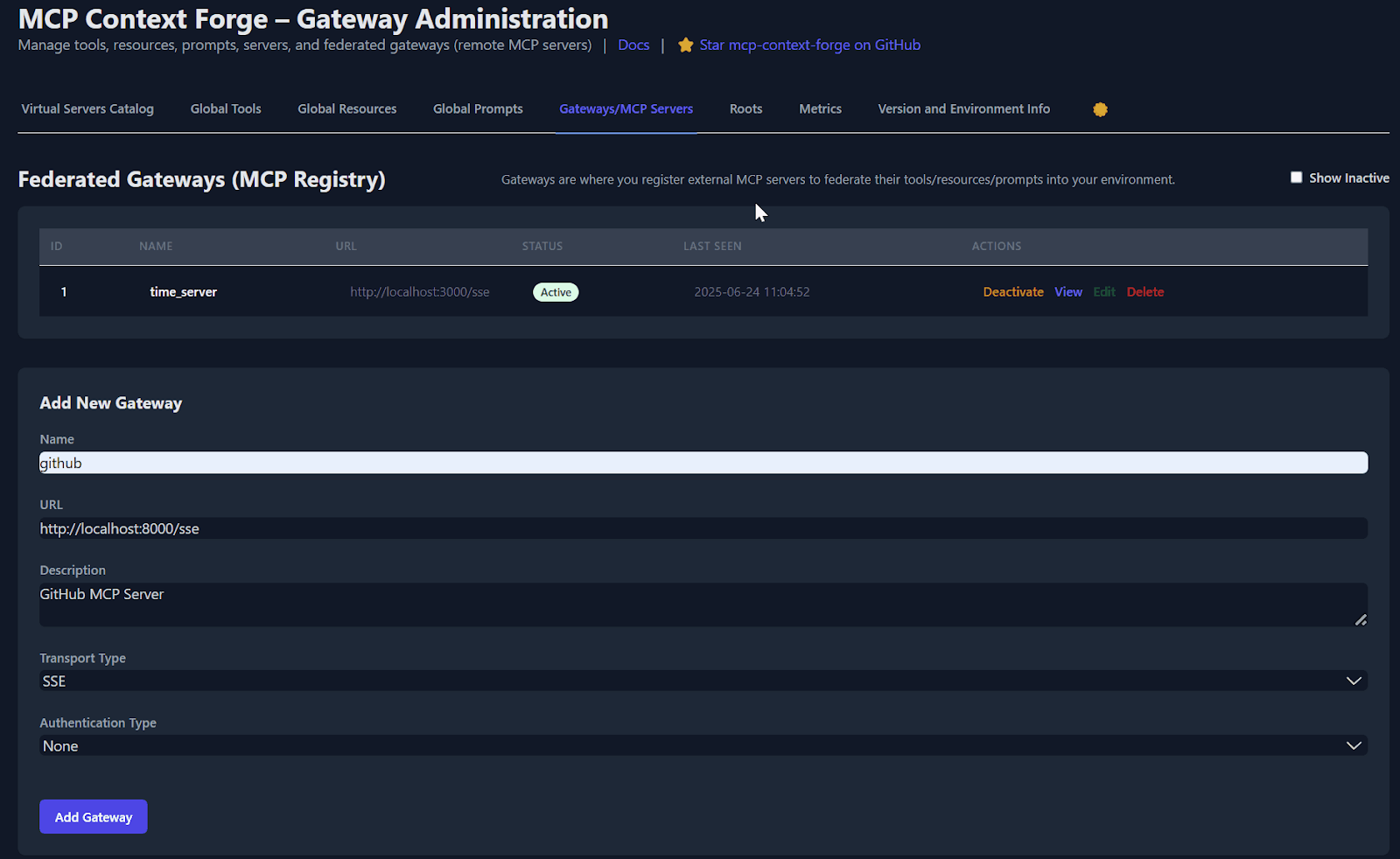

IBM's Context Forge represents the most architecturally ambitious approach in the market, though their explicit disclaimer about a lack of official IBM support creates some adoption friction for enterprise customers.

Federation Capabilities

The federation features distinguish Context Forge from simpler gateway approaches. Auto-discovery via mDNS, health monitoring, and capability merging enable deployments where multiple gateways work together seamlessly. For very large organizations with complex infrastructure spanning multiple environments, this federation model solves real operational problems.

Flexible authentication supports JWT Bearer tokens, Basic Auth, and custom header schemes with AES encryption for tool credentials. The multi-database support (PostgreSQL, MySQL, SQLite) allows integration with existing enterprise systems without architectural changes.

The virtual server composition feature lets you combine multiple MCP servers into a single logical endpoint, simplifying agent interactions while maintaining backend flexibility.

Target Market

Large organizations with sophisticated DevOps teams comfortable with infrastructure management. The federation model particularly appeals to enterprises that anticipate running multiple MCP gateway deployments across different environments or regions.

Important Caveat

The alpha/beta status and explicit lack of commercial support make this primarily suitable for organizations with internal expertise to handle production issues independently, and it is not recommended for most enterprise use cases. Things like ease of development, the legacy nature of IBM products, and its complicated management processes should be considered before selecting this as your choice.

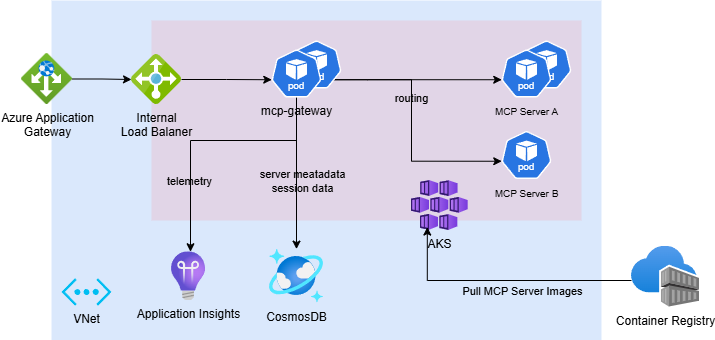

4. Microsoft MCP Gateway

Core Philosophy: Leverage existing Azure infrastructure rather than building parallel systems.

Microsoft's approach reflects its broader ecosystem strategy. Instead of creating a standalone gateway, they've built multiple MCP integration points across Azure services that work together.

Azure Integration Depth

Native Azure AD integration eliminates authentication complexity for Azure customers. OAuth 2.0 flows, policy enforcement through Azure API Management, and integration with existing identity providers work without additional configuration. The Azure MCP Server provides direct integration with other cloud applications and services. This isn't just convenience; it also reduces the code required to connect AI agents with other Azure resources. Kubernetes-native architecture handles session-aware routing and multi-tenant deployments using familiar Azure container orchestration patterns.

Integration Matrix

Ideal Scenario

Azure-centric organizations that want MCP capabilities to integrate seamlessly with existing cloud infrastructure and are willing to sacrifice quick development times, centralised AI integration, and ease of monitoring for operational robustness and micro-level flexibility, are suited for large-scale enterprise use-cases. The native integration approach works particularly well for teams already heavily invested in the Azure ecosystem and legacy solutions.

Considerations

Multi-cloud or hybrid deployments face integration challenges that Microsoft's Azure-first design doesn't elegantly address. Organizations should carefully consider vendor lock-in implications, development pain-points, and the highly complicated management/monitoring nature. It is recommended to explore other options that are more flexible and easy to deploy/manage from an enterprise perspective.

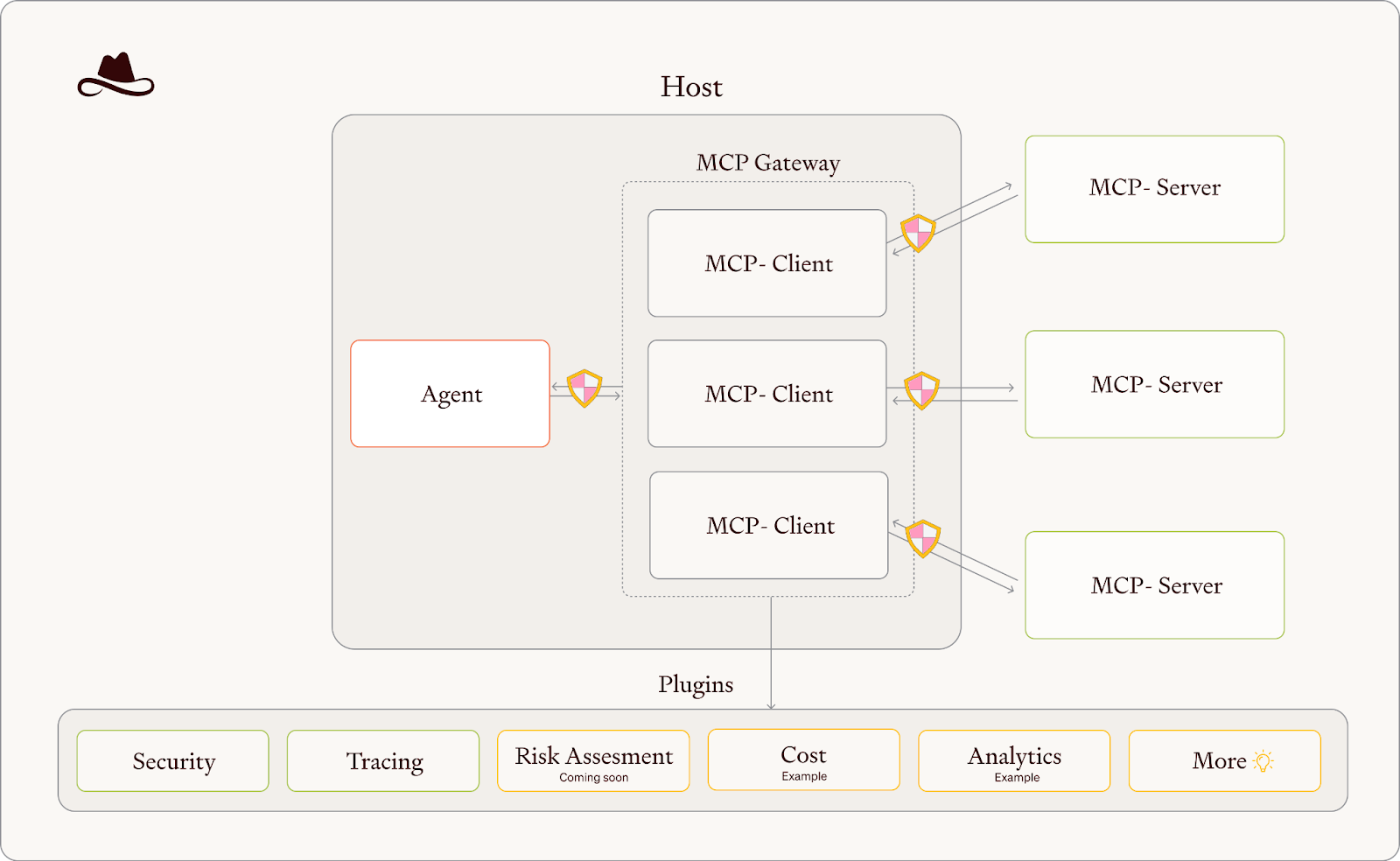

5. Lasso Security

Lasso Security (recognized as a 2024 Gartner Cool Vendor for AI Security) focuses on what they call the "invisible agent" problem, providing visibility and control where traditional security tools fall short.

Features

The plugin-based architecture enables real-time security scanning, token masking, and AI safety guardrails. This modular design allows organizations to add security capabilities incrementally rather than adopting an all-or-nothing approach. Tool reputation analysis addresses supply chain security concerns that many organizations cite as their primary barrier to MCP adoption. The system tracks and scores MCP servers based on behavior patterns, code analysis, and community feedback. Real-time threat detection monitors for jailbreaks, unauthorized access patterns, and data exfiltration attempts. Unlike general-purpose security tools, these capabilities are specifically designed for AI agent behavior patterns.

Target Segment

Organizations in regulated industries or handling sensitive data where comprehensive security monitoring to be non-negotiable. The security-first approach particularly appeals to teams that need detailed audit trails and specialized threat detection capabilities.

Performance and Cost Reality

Real-world deployment data reveals significant differences between marketing claims and production performance. Based on testing across multiple implementations, here's what organizations should actually expect:

Cost Impact Analysis

The operational cost implications are more nuanced than simple pricing comparisons:

- Caching overhead: Higher storage costs due to agent context management

- Success rate improvement: Fewer retries and error handling costs

- Security compliance: Potential savings from reduced security incident response

- Operational efficiency: Reduced manual tool integration and maintenance

How to Evaluate The Best MCP Gateway?

The choice isn't just about features, it's about matching architectural philosophy with organizational reality and long-term strategy.

Choose TrueFoundry if: You're already managing significant AI workloads and want a unified infrastructure. The consolidated approach reduces operational complexity and provides comprehensive observability across both LLM and tool usage without architectural fragmentation, quick development times, and ultra-low latency.

Conclusion

The MCP Gateway market is moving rapidly, but the fundamental patterns are becoming clear. The solutions that will dominate are those that balance three critical imperatives:

Security depth: As agent capabilities expand, the potential impact of security failures increases exponentially. Gateways that provide comprehensive threat detection and policy enforcement will capture the market segments where security is non-negotiable.

Operational simplicity: The complexity of managing hundreds of MCP tools across multiple environments will drive adoption toward solutions that provide unified management and observability without sacrificing functionality.

Architectural adaptability: As agentic AI requirements evolve, organizations need infrastructure that can adapt and scale without requiring complete reimplementation. The vendors building flexible, extensible platforms today are positioning themselves for long-term success.

But here's the deeper insight: MCP Gateways represent just the first wave of infrastructure requirements for agentic AI. Agent-to-agent communication protocols, multi-modal tool interfaces, and autonomous workflow orchestration will all require similar infrastructure layers. The organizations building comprehensive, secure, and scalable MCP capabilities today are laying the foundation for the broader transformation toward autonomous AI systems.

The market is moving fast, but the fundamentals remain constant: enterprises need solutions that work reliably at scale, integrate with existing security frameworks, and provide operational visibility into AI agent behavior. The MCP Gateway vendors that solve these enterprise realities, rather than just protocol compliance, will capture the largest share of the emerging agentic AI infrastructure market.

For organizations making infrastructure decisions today, the key is choosing a solution that can evolve with your agentic AI requirements while solving your immediate security, observability, and operational challenges. The future belongs to teams that get this infrastructure layer right, and that future is arriving faster than most people realize.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.