Inside the Model Context Protocol (MCP): Architecture, Motivation & Internal Usage

Introduction

LLM-powered applications have created a pressing need for models to access up-to-date, domain-specific data from diverse sources. MCP addresses this by providing a unified protocol standardising how applications can dynamically expose data and capabilities to any LLM-powered application.

MCP Overview

At its core, MCP follows a JSON-RPC-based client-server architecture where a host application can connect to multiple servers:

- MCP Hosts are LLM-powered applications like Claude Desktop, IDEs, or AI tools that want to access data through MCP.

- MCP Clients implement the client-side MCP protocol in different programming languages. They are the bridge through which the MCP Hosts connect with the MCP Servers.

- MCP Servers expose specific data and capabilities through the MCP protocol. It can provide three main types of capabilities:

- Resources are data that the MCP servers make available for the applications to read. They can include file contents, API responses, etc. Resources are application-controlled; the applications decide how to include them in the user flow.

- Tools are capabilities and data exposed by the MCP servers. For example, a Kubernetes MCP server can expose tools for getting all pods and deleting a pod. Tools are model-controlled. LLMs decide to call them based on the given context.

- Prompts are templateable user interactions or workflows exposed by the MCP server. They are user-controlled. LLM Applications decide how to expose them so the user can select the appropriate prompt.

Transport

Transports define how an MCP Client and Server communicate with each other.

STDIO

In this setup, the MCP Client within the MCP Host is responsible for spinning up the MCP Server on the same machine. Communication between the MCP Client and MCP Server happens via STDIN and STDOUT. Here, one MCP Server only communicates with a single MCP client.

A typical configuration looks like this:

Example: You can run GitHub's MCP Server locally using Docker and a Personal Access Token (PAT)

GitHub - github/github-mcp-server: GitHub's official MCP Server

{

"mcpServers": {

"github": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"GITHUB_PERSONAL_ACCESS_TOKEN",

"ghcr.io/github/github-mcp-server"

],

"env": {

"GITHUB_PERSONAL_ACCESS_TOKEN": "<YOUR_TOKEN>"

}

}

}

}Authorisation

Typically, the authorisation can happen in two ways

- Users can use environment variables to pass their token to the MCP Server process. This is the widely used method.

- MCP Server can implement OAuth2 device flow. However, the official MCP specification does not outline anything.

Limitations

- The user’s device, running the LLM-powered application, must install Docker or Node/Python. Though this is not an issue for a developer, it dramatically reduces the number of people who can use the MCP Server.

- Given that the MCP Server is present and running on the user’s device, upgrades become cumbersome, and rapid iteration is affected.

- Web apps running on the browser cannot use this.

- Without OAuth2 device flow, users must configure their tokens for every MCP Server. Tokens are often not stored securely on the device, and rotation is cumbersome.

Given the limitations, STDIO transport is unsuitable for creating custom MCP Servers on top of a company’s service and data source or SaaS subscriptions like GitHub or Slack. However, it will be helpful for developer tools use cases in IDEs.

Streamable HTTP

Streamable HTTP allows MCP Servers to handle multiple MCP clients connecting and communicating using the HTTP protocol. This removes a lot of the limitations of the STDIO transport. Mainly:

- We no longer need to host the MCP Server on the user’s device; it can be hosted anywhere.

- New capabilities added to the MCP server become instantly available to all users.

- Web apps running on the browser can use this.

Authorisation

Authorisation in this transport is actively being developed. The latest specification released on 2025-03-26 mentions:

- MCP Auth implementation must follow OAuth 2.1.

- MCP Auth implementation should follow Dynamic Client Registration.

A typical Auth flow would look something like this:

In the above flow, the MCP Server issuing tokens acts as a Resource and an Authorisation Server. This is impractical for MCP Servers for Slack, GitHub, etc, where the provider already implements its OAuth2 Authorisation Server. A company’s internally hosted services behind IDP-provided Authorisation Servers suffer from the same issue (Ex, ArgoCD installation behind an Authorisation Server provided by Okta).

The specification allows delegating this to a third-party authorisation server, but the MCP Server must issue its token and manage the relationship with the third-party token. This negates all the benefits of a third-party authorisation server, as the MCP Server needs to implement secure token management and the OAuth2 routes.

Limitation

- MCP Servers with auth require infrastructure like a database for securely storing and managing the lifecycle of their tokens. Every MCP Server would, in effect, become its own Authorisation Server, which is redundant and impossible to maintain within a company. The community is actively working on amending the authorisation spec to improve this.

- Providers like Atlassian, Sentry, Slack, Github, etc., do not support Dynamic Client Registration (DCR) or even support OAuth2 public client. Building MCP Servers on top of these providers becomes more difficult for the community and more work for the provider. Atlassian recently published its own MCP Server, but its DCR implementation only allows

localhostand a select few redirect URIs, like Claude, etc.

TrueFoundry’s Approach

At TrueFoundry, we want our colleagues to actively be able to connect their Atlassian, Sentry, Slack, GitHub, etc. accounts to LLM as extra context. We also want to ensure that every employee can only access resources or take action on resources via LLMs that they are authorised to. MCP + OAuth2 seems the perfect way to expose this.

- We want to focus on writing good-quality MCP servers to expose the data and capabilities more efficiently to LLMs. We want to do this securely, adhering to RBAC boundaries defined within the provider.

- We do not want to maintain additional Authorisation Servers for different MCP Servers. We want to reuse the providers' OAuth2 capabilities.

- Providers usually support only OAuth2 confidential clients. DCR and public client are not even an option.

- The MCP Authorisation spec is still actively changing and being worked on. We cannot wait for it to stabilise before working on this.

Given the above, we came up with the following architecture.

There are a few components here.

MCP Servers

We wrote HTTP MCP Servers for providers like Slack, Sentry, Atlasian, Github, etc, following Streamable HTTP transport.

These MCP Servers proxy between the LLM and the Provider’s HTTP APIs. Note that this is not a 1:1 translation. These providers' HTTP APIs have a lot of unnecessary content that can easily derail the LLM and fill up the context window. For example, a list pod tool on a Kubernetes MCP Server will have much duplicate content as the pod spec repeats across multiple pods. Large fields, like managedFields, will not be required for most users’ requests. Other SaaS providers will have nested parent entities, fields that are not functional, like Avatar URL, etc. This is where we want to spend most of our development time. Eventually, we need a system in MCP that can help LLMs discover the data model of a system and then dynamically query for specific fields of resources.

These MCP servers expect an HTTP Authorisation header and directly pass it on to the Provider’s HTTP API.

Registering MCP Servers on TrueFoundry

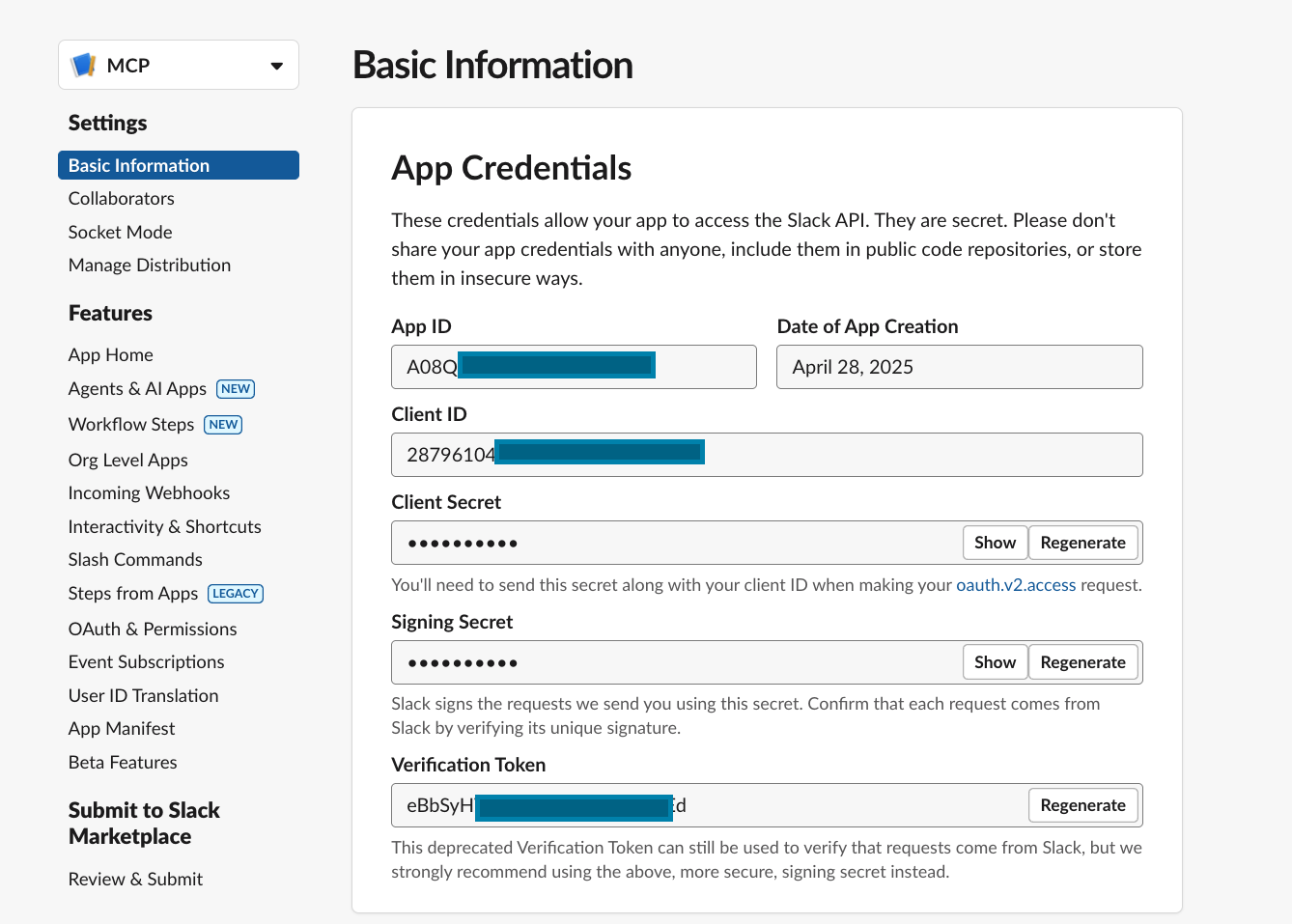

After deploying the MCP Servers, we create OAuth2 Apps or confidential clients for each Provider. This is how it looks for Slack.

Above, you can also notice that the authorizer redirect URI is configured. It usually looks something like https://base-url/mcp-integrations/oauth2/callback.

Then we integrate the MCP Server along with the URL.

We can use the scopes to make this whole integration read-only, if we want or give permission on specific resource types. Even if the MCP server has tools to “write” any resources and the user has “write” access, LLMs will not be able to get the same privilege.

Selecting the MCP Server on the TrueFoundry Gateway and Authorising

Once integrated, we can select the MCP Server on the Gateway Playground.

If the user is not authorised, we use the OAuth2 client information in the integration and walk the user through the OAuth2 Authorisation code flow. At the end of the process, TrueFoundry securely saves the Access and Refresh Tokens (if supported and enabled).

We can remove our credentials from TrueFoundry or even revoke them directly from the Provider.

Start using it!

Once authorised, you can use the context from the MCP servers directly on our Playground.

Note that we do not pass any OAuth2 Credentials to the frontend. Frontend calls an API:

curl -X POST "https://base-url/api/llm/agent/responses" \

-H "Authorization: Bearer YOUR_API_TOKEN" \

-H "Content-Type: application/json" \

-d '{

"model": "openai-main/gpt-4o-mini",

"messages": [

{

"role": "user",

"content": "what are the issues in my app"

}

],

"tools": [

{

"type": "mcp-server",

"integration_fqn": "truefoundry:custom:devtest-mcp-servers:mcp-server:sentry",

"tools": ["list_projects", "list_issues"]

},

{

"type": "mcp-server",

"integration_fqn": "truefoundry:custom:devtest-mcp-servers:mcp-server:slack"

}

]

}'

LLM Gateway implements the “agentic” loop, sending the initial messages and the MCP Server’s tool descriptions to the LLM. The user can also filter the tools exposed to the LLM. Based on the LLM’s Response, LLM Gateway can communicate with the MCP Servers and pass on the callers’ credentials. We automatically refresh any short-lived credentials. The updates are sent to the frontend using SSE.

Conclusion

This MCP + OAuth2 flow enabled our colleagues to securely incorporate data from providers like Slack, GitHub, Sentry, etc., into their LLM-centric workflow.

A couple of use cases,

- LLMs can access Sentry via MCP to fetch error details and correlate with related code changes from GitHub for faster debugging.

- LLMs create, update, and query Jira tickets through MCP, streamlining developer workflows.

- LLMs can access Slack conversations via MCP to analyse and summarise lengthy discussion threads, helping teams quickly understand decisions and action items without reading entire chat logs.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.webp)