The Messy Middle: Surviving the Transition from Rule-based IVR to Agentic systems

1. The Transitional Problem – Why Old Systems Fail and LLMs Aren’t “There Yet”

For decades, enterprises have relied on rule-based Interactive Voice Response (IVR) systems to manage customer calls. These systems were designed to deliver efficiency: push callers through menus, recognize a handful of keywords, and route them to the right team or script. At a small scale, this worked. But in an enterprise handling hundreds of millions of calls annually, these limitations become painfully clear. Customers don’t follow scripts. They speak in natural, free-flowing, unpredictable language - sometimes emotional, sometimes technical, sometimes impatient. Rule-based systems simply weren’t built to handle such diverse requests.

The result? High call abandonment rates, frustrated customers, and significant brand damage. Enterprises trying to force-fit human conversations into rigid decision trees are left with longer resolution times, higher agent handover rates, and ballooning operational costs. Worse still, customer experience suffers in ways that directly hit retention and revenue. The industry analytics reported that in 2021 the IVR self-service overall Csat rating was 75%, and IVR Navigation overall Csat rating was only 53%! [link]

Unlike rule-based systems, LLM-based Agentic systems can parse intent, adapt to context, and respond in natural, human-like language. In theory, they can handle the fluidity of real conversations at scale. They represent the long-awaited step change: not just automating call routing, but engaging customers in a way that feels personalized and empathetic.

But the problem is: off-the-shelf LLMs are not trained for the industry-specific operational processes of large enterprises, nor are they hardened to meet enterprise-grade standards for data privacy, reliability, and deployment. Despite their promise, today’s LLMs still exhibit failure modes that make CIOs and compliance officers nervous. They may “hallucinate” responses, misinterpret regulatory-sensitive queries, or vary in tone from one interaction to the next. Reliability, consistency, and compliance - all non-negotiables for a Fortune 500 customer service team - cannot yet be guaranteed.

This leaves enterprises stuck in the transitional problem: The old system can technically handle the call volume, but this results in a large number of unsatisfied customers due to poorly supported use cases and rising expectations from modern users. Meanwhile, the new systems, though more aligned with today’s user needs, aren’t yet reliable enough to fully replace the old ones.

For technology leaders, this moment is defined by a delicate tension: rely too heavily on legacy systems and risk growing customer dissatisfaction; adopt LLMs too quickly and risk costly errors that erode trust.

2. Why Businesses Can’t Wait – The Cost of Inaction and the Urgency of AI Adoption

For many executives, the safest choice might appear to be waiting until LLMs “mature.” But in practice, that’s not an option. The competitive pressures are too high, and the cost of inaction is steep.

First, customer expectations have shifted irreversibly. Consumers interact daily with AI-powered chatbots, smart assistants, and recommendation engines. When they call into a billion-dollar enterprise, they expect the same level of fluency, personalization, and responsiveness from their IVR experiences. Delivering anything less feels antiquated. In industries like banking, insurance, and telecom, that gap is not just inconvenient - it’s enough to push customers to competitors who are already investing in AI.

Second, the economics of call centers are brutal. Handling 500 million calls a year with human agents alone is infeasible. Even incremental efficiency gains translate into tens of millions in savings annually. Failing to adopt AI now doesn’t just mean lagging behind, it means locking in unnecessary cost structures that erode margins.

Third, competitors are moving. Across industries, we’re already seeing market leaders experiment with AI-driven customer experience. Some may stumble, but the signal to customers and investors is clear: innovation is happening, and brands that lead with AI will differentiate. Waiting on the sidelines risks not only customer churn but also reputational damage as a “slow adopter.”

Finally, there’s the organizational learning curve. Deploying AI responsibly in a Fortune 500 environment takes time: aligning legal teams, training staff, integrating with legacy systems, setting up observability frameworks. These are not capabilities that can be turned on overnight. Even if LLMs become fully reliable tomorrow, enterprises that have not already built the muscle for AI adoption will be years behind.

In short, businesses can’t afford to wait. The risks of inaction - rising costs, lost customers, diminished competitiveness - far outweigh the risks of carefully managed adoption. The challenge is not whether to adopt, but how to adopt responsibly during the messy middle.

3. Real World Use Case: Balancing LLMs with Rule-Based Systems

Recognizing that neither rule-based IVRs nor off-the-shelf LLM-powered solutions could meet the needs of a Fortune 500 enterprise on their own, TrueFoundry worked with one of the largest pharmacy chains in the U.S. to design a hybrid Voice AI agent. The goal was ambitious: replicate the effectiveness of a skilled human pharmacist on millions of routine calls spanning prescription status, refills, store information, inventory checks, and other everyday customer needs.

At the heart of this design is a hybrid optimization approach: combining the efficiency of deterministic rule-based systems with the flexibility of AI-driven conversation. Common and repetitive queries, such as checking store hours or refills ready for pickup, are routed through rule-based fast-path processing. This handles 90–95% of routine requests, avoiding unnecessary LLM calls, reducing latency, and lowering compute costs.

When a customer presents a more complex or ambiguous request, an intelligent routing system takes over. Using intent recognition classifiers that also take into account background context, automatically added to the customer request from prior conversation, the intent manager determines whether the request can be resolved by rules or should be escalated to an LLM-powered conversational flow. This ensures the right balance: predictable responses where precision matters, and natural interactions where flexibility is critical.

For nuanced interactions, like refill expedite and cancel flows, we built conversational agents using LangGraph. These agents integrate with backend systems through tool calling, enabling them to securely process refill requests in real time. Customers can ask naturally (“Can you speed up my refill?”), and the system interprets the intent while executing the necessary backend actions.

To maintain a human-like feel even during backend operations, we implemented filler text responses (“Please wait a moment while I fetch your details”). These subtle touches reinforce customer trust by making the system feel responsive rather than robotic.

Because the solution serves pharmacies, accuracy in interpreting prescriptions was non-negotiable. We integrated industry-specific speech-to-text models fine-tuned for pharmaceutical vocabulary, ensuring correct recognition of complex drug names. This significantly reduces transcription errors that could otherwise frustrate customers or risk patient safety.

All LLM interactions are funneled through a dedicated gateway service backed by a prompt management service. This enables centralized management of prompts and guarantees consistent, pre-configured responses that comply with both regulatory requirements and brand voice. Updates can be rolled out quickly without touching dozens of services, keeping the system agile while ensuring compliance.

To safeguard customer experience, the Voice AI agent includes real-time sentiment analysis. If frustration or dissatisfaction is detected, such as repeated negative responses or rising emotional intensity, the system can trigger an escalation to a human agent. This ensures sensitive situations are handled with empathy, preventing negative customer experiences from spiraling.

Performance monitoring is handled by Analytics AI, an agentic service built to analyze events from across the system. It improves the business operation by:

- Evaluating sentiment trends across millions of conversations to detect frustrated or unsatisfied customers.

- Auto-generating SQL queries across business domains (pharmacy ops, inventory, call center metrics).

- Routing queries intelligently to the correct dataset to perform category-specific analysis.

- Measuring service-level performance, highlighting pain points or emerging bottlenecks.

This closes the loop between technology and business, providing both transparency and actionable insights.

The system is already used in ~2,000 of 10,000 stores, serving thousands of customers daily. The hybrid model ensures scale without compromising trust: LLMs enhance customer interactions where needed, while rules guarantee reliability and speed in the majority of cases. Early deployments show significant improvements in efficiency, call resolution, and customer satisfaction.

In short, the hybrid design is not a compromise - it’s a strategic bridge. It enables enterprises to adopt AI responsibly today, while paving the way for a future where LLMs may take on an even greater share of the load.

4. The Tradeoffs: Transparency About Complexity and Maintenance

While the hybrid Voice AI approach solves the transitional problem, it also introduces its own set of tradeoffs. For enterprises managing hundreds of millions of customer calls, these tradeoffs are not minor details - they shape total cost of ownership, organizational readiness, and long-term sustainability.

1. Increased Architectural Complexity

In a pure rule-based system, the logic is deterministic and relatively simple to trace. In a pure LLM system, the architecture could, in theory, be simplified to a conversational engine plus backend integrations. A hybrid system, however, requires both. This means maintaining parallel infrastructures:

- Deterministic flows for the vast majority of common queries.

- AI-driven flows for nuanced, high-value interactions.

- An intelligent routing layer that decides which path to activate.

The benefit is flexibility and resilience, but the tradeoff is a more complex architecture that requires cross-functional teams to design, monitor, and continuously tune.

2. Higher Maintenance Burden

Traditional IVRs require occasional script updates. By contrast, a hybrid system needs ongoing maintenance across multiple fronts:

- Classifier retraining: Keeping routing models accurate as new call patterns emerge.

- Domain-specific models: Maintaining speech-to-text engines fine-tuned for pharmaceutical vocabulary or other industry needs.

- Monitoring pipelines: Ensuring that the monitoring tools capture the right events and surface actionable insights.

This introduces new maintenance rhythms closer to software product lifecycle management than traditional telephony support.

3. Cost Efficiency vs. Performance Balance

While rule-based fast-paths keep 90–95% of calls with simple and single intent efficient, the hybrid still incurs costs from:

- Running LLMs for complex flows.

- Operating dual infrastructures.

- Investing in specialized monitoring and analytics systems.

Enterprises must weigh whether the customer experience uplift justifies the added cost. In many cases, it does, but the ROI calculation depends on industry margins, regulatory risks, and customer expectations.

4. Governance and Compliance Complexity

Enterprises, particularly in healthcare, banking, and insurance, must enforce strict compliance. A hybrid system introduces more moving parts:

- Rule-based responses offer compliance by design.

- LLM-based flows require guardrails, auditing, and real-time monitoring for hallucinations or off-brand language.

- Intelligent routing must prove explainability: why did a given call go to an LLM vs. a rule?

This increases governance complexity but also provides an opportunity: the hybrid system can be instrumented for greater transparency than either approach in isolation.

5. Organizational Skill Shift

Finally, hybrid adoption requires new skill sets. Enterprises need not just IVR designers, but also:

- Data scientists for routing classifiers.

- Prompt engineers for LLM flows.

- AI operations teams to manage real-time monitoring, sentiment analysis, and escalation triggers.

This talent shift is a non-trivial consideration for enterprises with legacy call center IT teams.

The Bottom Line

The hybrid Voice AI approach is not “set and forget.” It is a living system that demands careful design, continuous monitoring, and organizational investment. The reward is a resilient bridge that allows enterprises to leverage AI without sacrificing reliability. But the tradeoff is real: greater complexity, higher maintenance, and ongoing governance needs.

For leaders making decisions today, acknowledging these tradeoffs openly is key to building trust across technical, operational, and compliance stakeholders.

5. Future Outlook – Balancing the Curves of Old and New

The evolution of customer interaction technologies doesn’t follow a straight line - it follows cycles. New innovations rise with great promise, experience inevitable setbacks, and eventually find their place in the enterprise toolkit. At the same time, traditional technologies don’t simply vanish; they correct, stabilize, and continue to deliver value in niches where their strengths are unmatched.

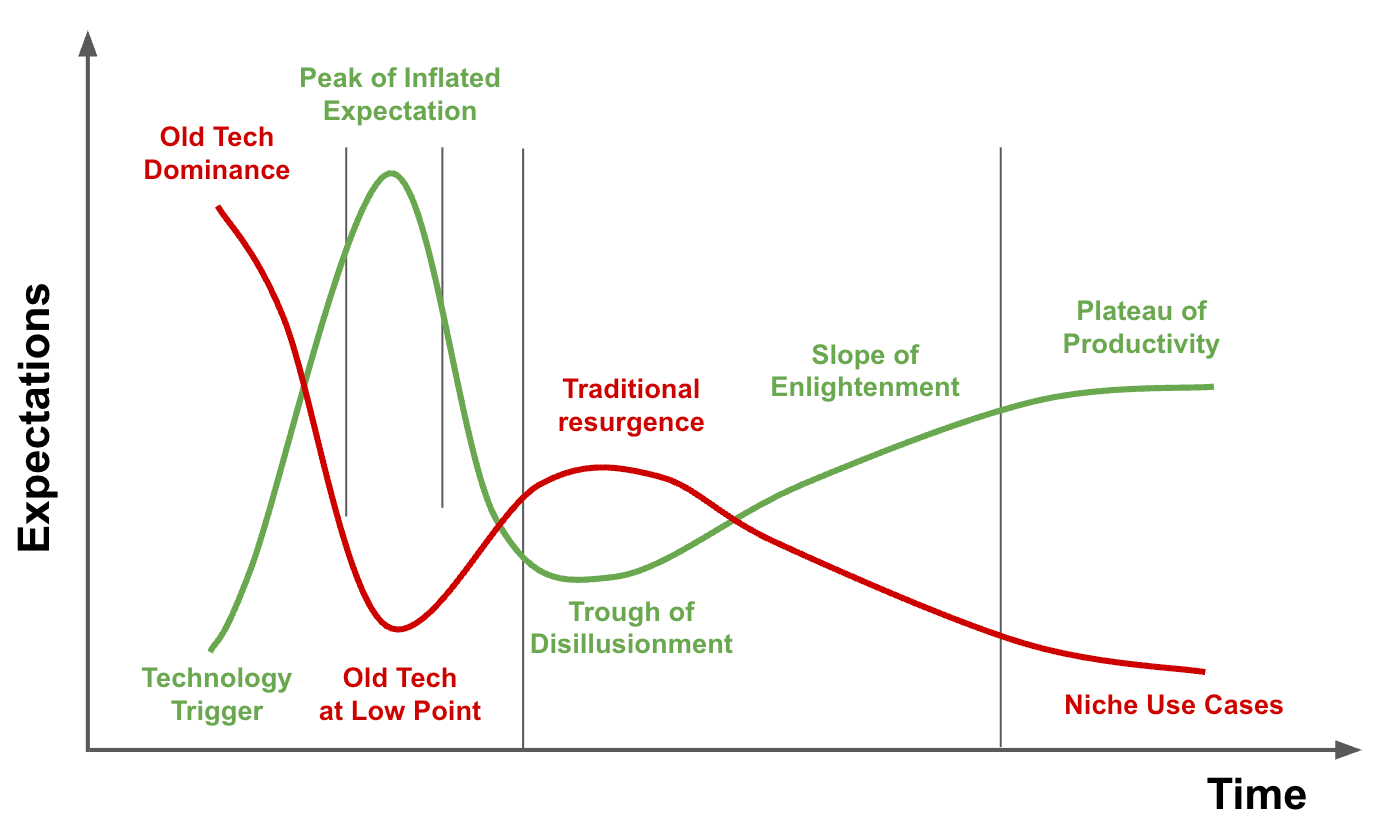

One way to think about this is to expand the Gartner Hype Cycle, which charts how new technologies move from “Innovation Trigger” through the “Peak of Inflated Expectations,” the “Trough of Disillusionment,” and eventually to the “Plateau of Productivity.” Alongside this curve, we can draw a mirror image: the traditional technology “survival” curve.

- At the Peak of Inflated Expectations:

When enthusiasm for the new technology surges, the old one is prematurely declared obsolete. In our space, this is happening with rule-based IVRs. The hype around conversational AI has created the perception that deterministic call flows are already irrelevant, even though they remain highly efficient for predictable, repetitive tasks. - In the Trough of Disillusionment:

As the limitations of the new technology become clear—high costs, reliability gaps, compliance risks—traditional systems often see a resurgence. Enterprises rediscover the stability and predictability of rule-based systems, appreciating their cost-effectiveness and precision. During this phase, organizations lean more heavily on hybrids to stabilize operations while continuing to experiment with AI. - At the Plateau of Productivity:

Eventually, the new technology matures, its use cases become well understood, and deployment frameworks make it safe and scalable. At this point, the old technology doesn’t disappear, but it shifts into very specific, undisputed use cases. For IVR, this will mean rules remain the best tool when compliance requires absolute control over wording, or where the cost of even a minor misinterpretation is unacceptable.

Figure 1: New technology hype cycle and resistance of old technology

Where We Are Today

By most indicators, AI-driven voice agents are still approaching the Peak of Inflated Expectations. Enterprises are excited about their transformative potential but are also beginning to encounter their fragility at scale. The truth is, we are still in the process of figuring out the right balance: where AI enhances the experience, and where rules provide guardrails.

This balance is not a compromise; it’s a strategic bridge across the curves. By embracing hybrid approaches now, enterprises position themselves to capture the benefits of AI innovation without discarding the reliability of proven systems. Over time, as AI moves toward its Plateau of Productivity, the role of traditional IVR will narrow, but it will never vanish—it will persist in those niche, mission-critical contexts where predictability remains king.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.