Beyond ClickHouse: Why We Built a Zero-Maintenance, S3-Native Logging Architecture

Introduction: The Hidden Cost of Analytics

Every LLM Gateway in the market offers logs and analytics. On the surface, they seem like a standard feature. But the architectural choices made behind the scenes have massive, hidden consequences for your reliability, security, and bottom line. The how is a critical detail that separates a truly enterprise-grade platform from a risky proposition.

When we first set out to provide our customers with fast, scalable analytics, we faced this exact challenge. The goal was clear: deliver powerful insights without creating an operational nightmare for our customers' platform teams.

We realized early on that to build a solution worthy of our enterprise customers, we had to innovate beyond the industry-standard approach. This post details our journey from the powerful but problematic ClickHouse to a zero-maintenance, S3-native architecture we call "DeltaFusion"—a system that gives our customers a powerful and durable competitive edge.

The Problem: Why Shipping ClickHouse is a Risky Business

Our initial choice, like many others in the industry, was ClickHouse. It’s a phenomenal piece of open-source technology, renowned for its incredible speed in analytical queries. However, its power comes at a steep operational cost.

The core problem is this: managing a stateful, mission-critical database like ClickHouse inside a customer's cloud environment is an operational minefield. To do it right, you need to handle:

- High Availability (HA): What happens if ClickHouse goes down? Do you have a seamless failover plan?

- Disaster Recovery (DR): Are you performing regular, verified backups? What is the recovery-time-objective (RTO) if something goes catastrophically wrong?

- Maintenance: Who is responsible for version upgrades, security patching, and performance tuning?

This isn't just theoretical. A simple, mistaken kubectl delete pv command could accidentally wipe out a customer's persistent volume, erasing all of their historical logs and metrics data forever. For any enterprise, this level of risk is simply unacceptable. We were effectively becoming a managed ClickHouse provider, which distracted from our core mission.

The Landscape of Compromises: How Competitors Fall Short

Faced with this challenge, most platforms in the LLM Gateway space have settled for one of three flawed compromises.

- The "Black Box" Approach (used by platforms like Portkey): They solve the management problem by running a Clickhouse database in their own cloud service.

The Compromise: Customers lose data sovereignty. To use the platform, you are forced to send your log data / metrics—which can contain Personally Identifiable Information (PII) or proprietary business information—outside of your secure cloud environment. For any security-conscious enterprise, this is a non-starter.

- The "DIY" Approach: Some platforms provide a Helm chart or a template and make the customer fully responsible for running their own ClickHouse instance.

The Compromise: The entire, complex operational burden is shifted directly onto the customer's already busy platform team. They are left to figure out the high availability, backups, and maintenance on their own.

- The "Limited Scale" Approach (used by tools like LiteLLM): This involves either using a standard transactional database (like Postgres) which cannot scale for serious analytical workloads, or relying on a messy web of third-party exporters.

The Compromise: This leads to chronically poor performance, a fragmented user experience, and an inability to provide deep, integrated insights.

We rejected all three. There had to be a way to deliver performance, security, and zero operational burden. So we built it.

Our Solution: The "DeltaFusion" Architecture

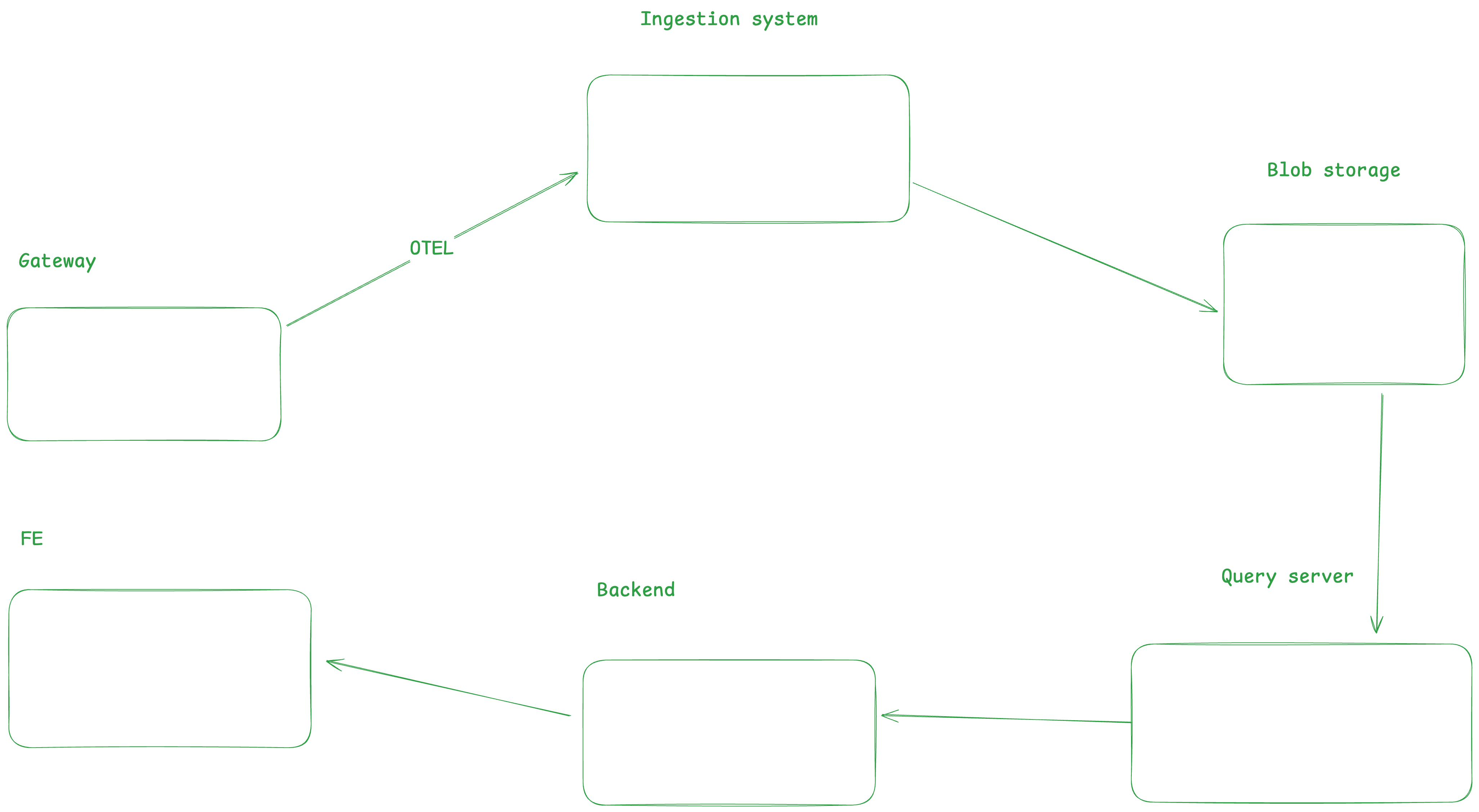

Our guiding principle was simple but powerful: decouple storage and compute. The data should live securely and durably in the customer's own object storage (like S3), while a stateless, scalable engine handles the queries. This led us to our "DeltaFusion" architecture.

The Storage Layer: Your S3 + Delta Lake

First, we eliminated the database server entirely and made the customer's S3 bucket the source of truth.

- Why S3? It instantly solves the HA, DR, and backup problems. AWS, GCP, and Azure have invested billions in making their object storage services incredibly durable and available. Your data is automatically protected within your own environment.

- Why Delta Lake? This is our engineering secret sauce. S3 is just a key-value store; it doesn't inherently understand database transactions. This is where Delta Lake comes in. It’s an open-source storage framework that brings ACID transactions (the atomicity and consistency of a traditional database) to your S3 data lake. It's the "magic" that allows ingestor to write data concurrently without corruption.

- Why the Parquet format? All data is stored in the Apache Parquet format, a highly efficient, columnar storage format that is the industry standard for analytics. This ensures that you truly own and can access your data with any tool you want—be it Spark, DuckDB, or Polars library. This completely eliminates vendor lock-in.

The Query Engine: Apache's DataFusion

With a rock-solid data foundation, we needed a powerful engine to query it. We chose DataFusion, a lightning-fast query engine from the Apache project. It's a modern, stateless engine that reads Parquet files directly from S3. To overcome S3's inherent network latency, we built a sophisticated multi-level caching layer (in-memory and on-disk) that keeps hot data ready for querying, delivering a snappy and responsive UI experience.

What This Means For You: The TrueFoundry Advantage

This wasn't just an academic engineering exercise. Our DeltaFusion architecture translates into clear, compelling value that directly impacts your business.

- ✅ Zero Operational Overhead: You never have to think about managing, patching, scaling, or backing up a database for your logs and metrics. It just works, saving your platform team countless hours.

- ✅ Complete Data Sovereignty: Your logs and metrics never leave your cloud account. You retain full ownership and control, satisfying the strictest enterprise security and compliance requirements.

- ✅ Lower Total Cost of Ownership (TCO): S3 storage is dramatically cheaper than the provisioned SSDs required for a high-performance database. Our stateless, optimized compute footprint further reduces your cloud bill.

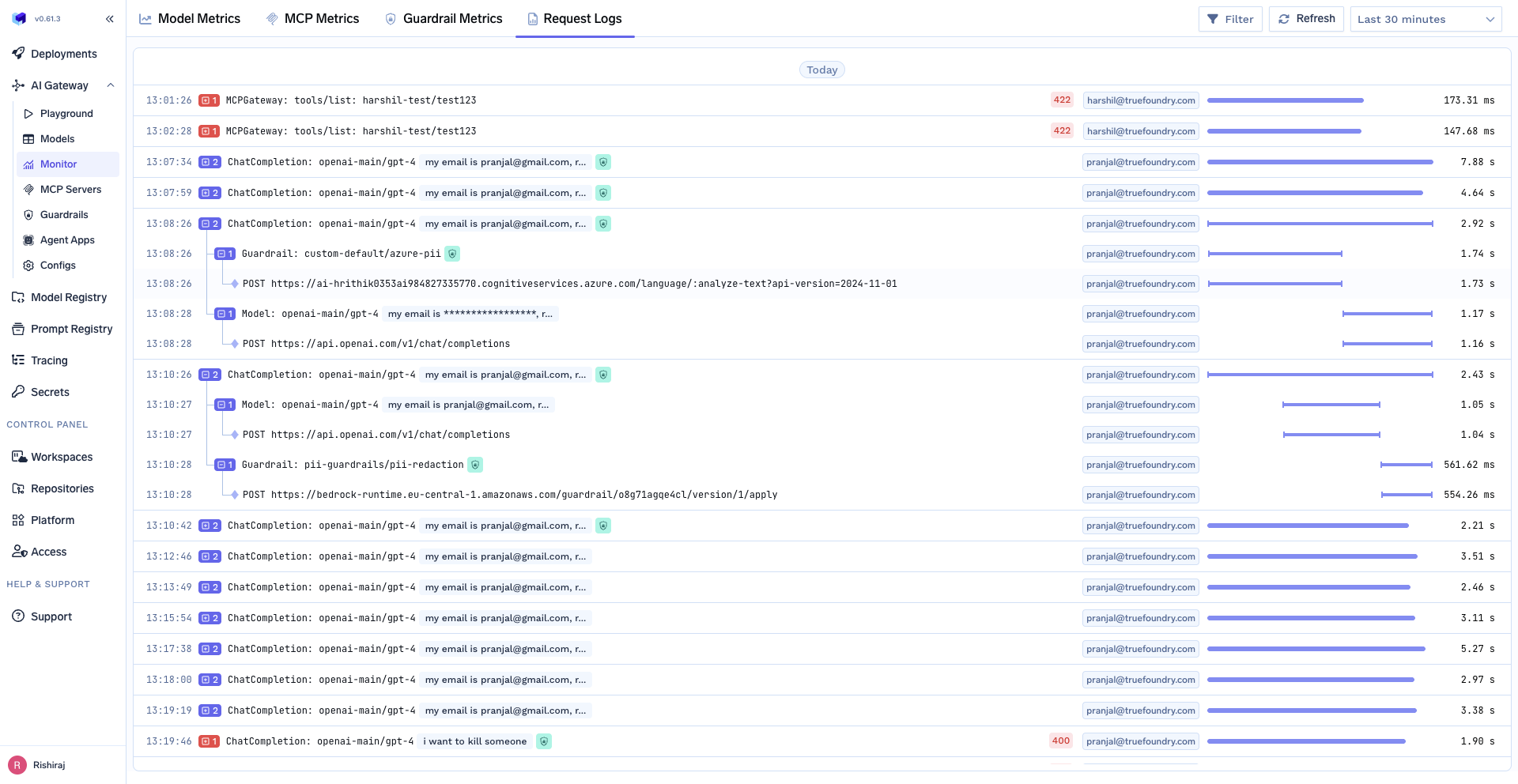

- ✅ Unmatched Observability & Open Standards: We built our ingestion pipeline on the OpenTelemetry (OTel) standard. This allows us to provide incredibly granular trace-level insights—like seeing the exact latency of a Guardrail check versus the LLM call itself. It also gives you the freedom to forward this standardized data to any other observability platform like Datadog with ease.

Conclusion: Raising the Bar for Enterprise Observability

The journey to provide world-class analytics is filled with tempting shortcuts and easy compromises. We faced a critical operational challenge, rejected the industry's standard solutions, and engineered a superior architecture from first principles.

The result is a platform that delivers on all fronts: blazing-fast analytics, ironclad security and data sovereignty, and a zero-maintenance experience that lets you focus on building, not on managing infrastructure. We believe this is the new standard for enterprise-grade observability in the AI space.

Ready to see what a truly zero-maintenance, secure, and powerful observability platform looks like? Schedule a demo with us today.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.