n8n integration with TrueFoundry's AI Gateway

n8n is popular because it is easy to use. You drag nodes onto a canvas and build automations quickly. Many teams now add AI steps to these flows, like drafting replies or summarizing text.

As these workflows move into daily operations, new problems show up. API keys end up in many places. Each workflow may call a different model vendor. Finance gets several invoices. Security loses a single audit trail. Engineering finds it hard to swap models across dozens of flows.

TrueFoundry AI Gateway fixes this. It becomes the single place that handles policy, cost limits, routing, and logs for every LLM call. Builders keep using n8n the same way. Platform, security, and finance get the control they need.

Why This Integration Matters Now: A Real-World Example

Imagine a common use case: automating customer support. In an n8n workflow, this might look simple. An incoming email is passed to an LLM to draft a reply. The generated text is then sent to a second sentiment-analysis model to flag urgent cases for human review.

But when these model calls are routed directly to vendors, chaos ensues for the platform teams:

- Finance sees multiple invoices from different AI vendors with no clear way to attribute costs or enforce budgets.

- Security loses a centralized audit trail, making compliance reviews for SOC 2 or HIPAA a nightmare.

- Engineering faces a rigid system. Swapping the sentiment model for a more cost-effective or better-performing alternative requires manually updating every n8n workflow that uses it.

By routing all n8n traffic through the TrueFoundry AI Gateway, the entire picture changes. The Gateway acts as a single, intelligent control plane. Support agents keep the drag-and-drop speed they love, while the organization gains complete governance and cost control—closing the blind spots for every single LLM call.

Connecting n8n to the Gateway: A 3-Step Guide

Wiring n8n into the Gateway is a one-time setup that takes minutes.

Prerequisites:

- A personal access token from your TrueFoundry account.

- Your organization's Gateway base URL (e.g., https://<your-org>.truefoundry.cloud/api/llm).

- An active n8n instance (either self-hosted or on n8n Cloud).

Steps:

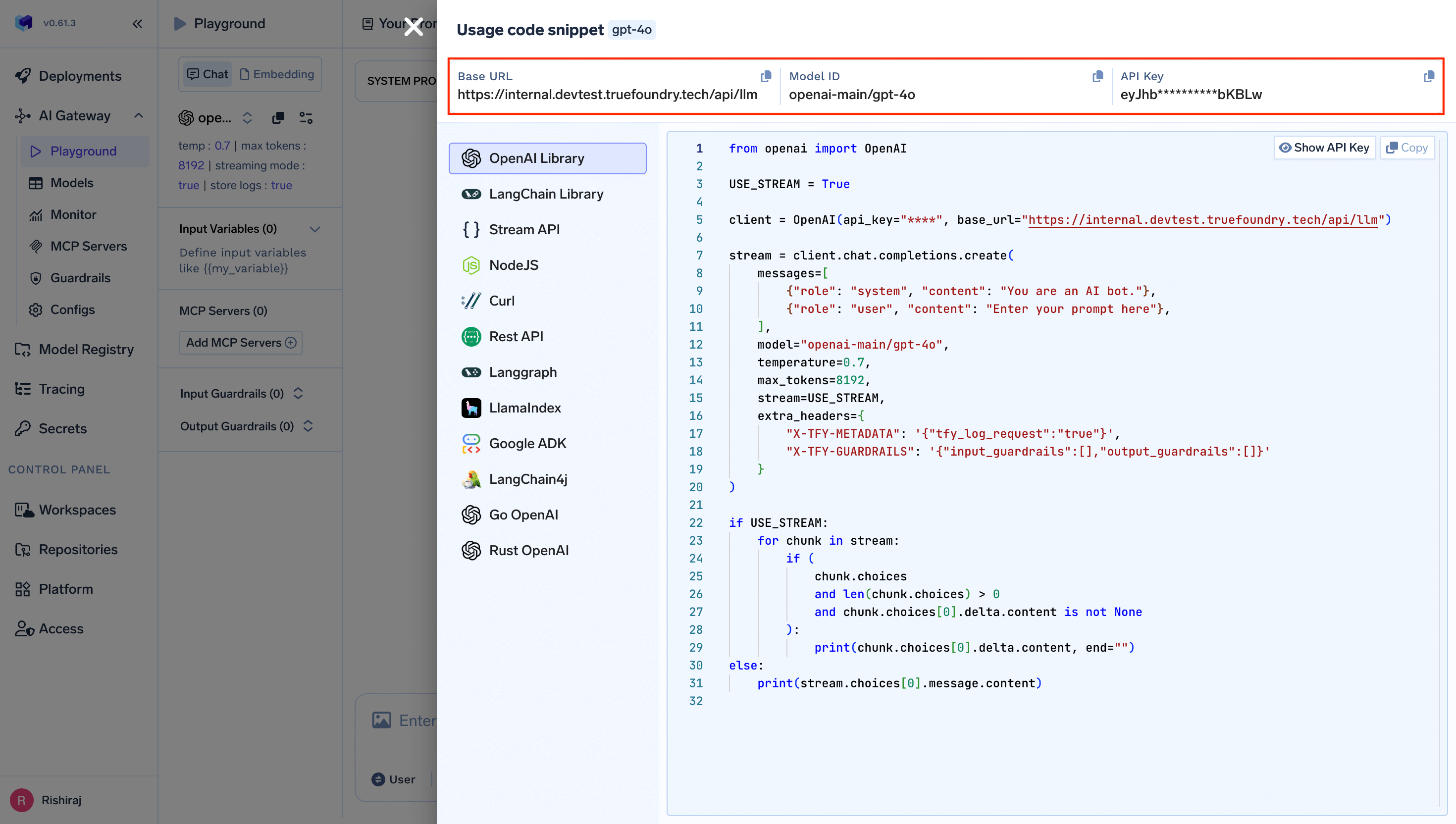

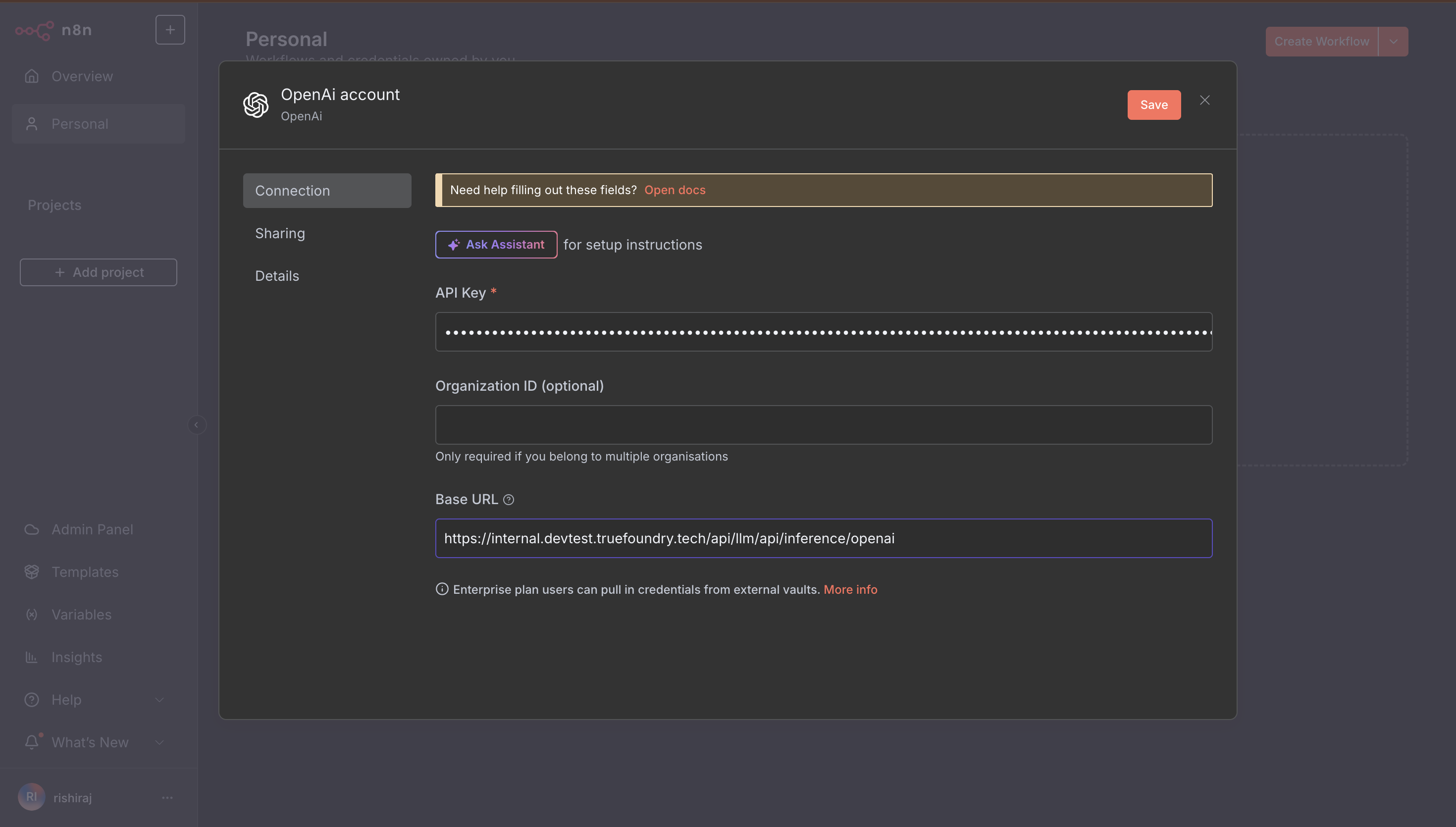

- Create a Credential in n8n: Navigate to the Credentials section in your n8n instance and click Create Credentials. Search for and select your desired model provider (e.g., OpenAI, Anthropic).

- Configure the Endpoint: In the credential configuration screen, paste your TrueFoundry Gateway URL into the Base URL field and your TrueFoundry access token into the API Key field.

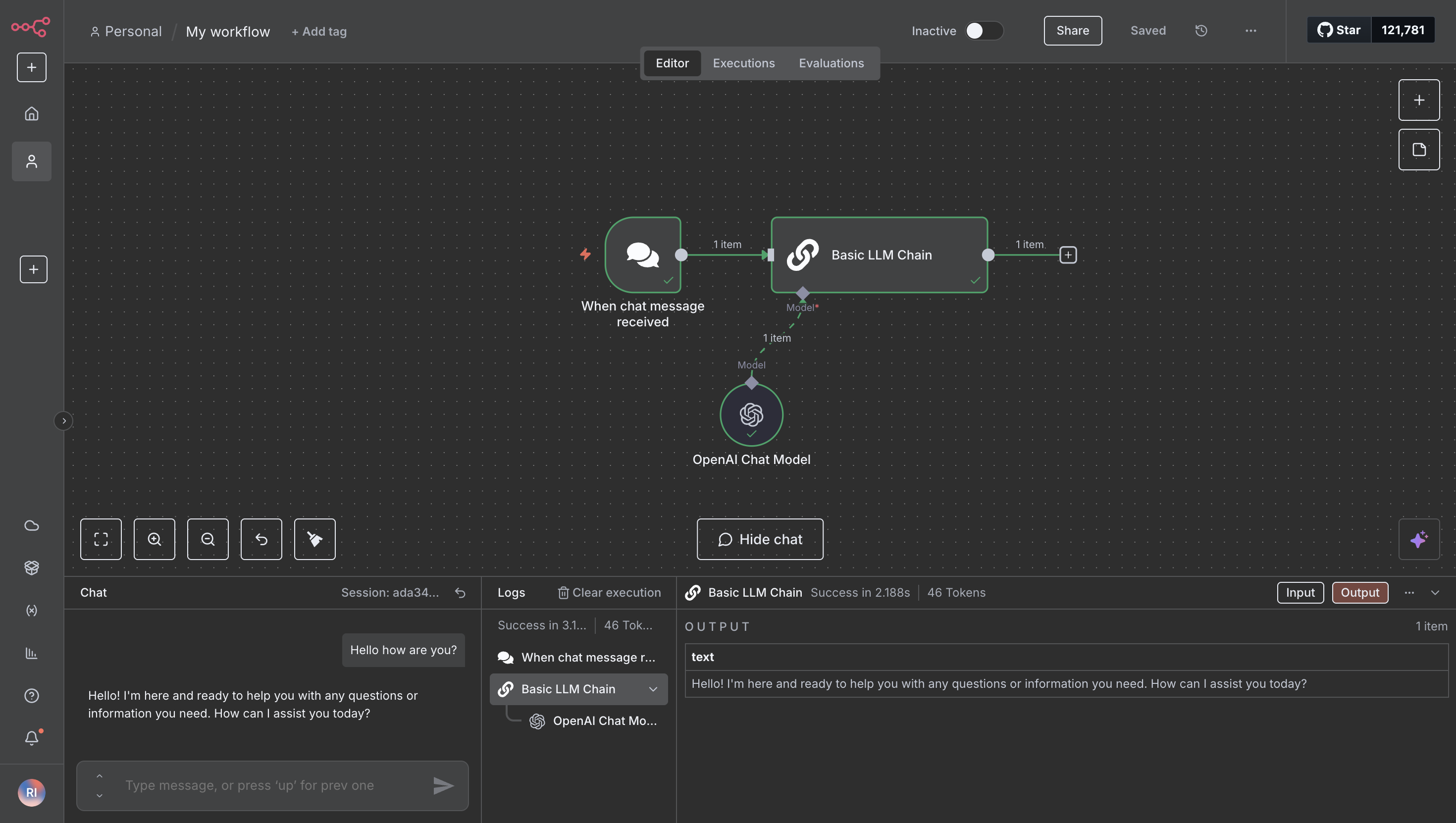

(Note: n8n may show a warning that it can’t list models from the base URL. This is expected and confirms the Gateway is securely intercepting the calls.) - Specify Your Model in the Workflow: Drop a chat node (e.g., OpenAI Chat) into your workflow canvas. In the node settings, set Model selection to By ID and paste the specific model ID from your TrueFoundry dashboard (e.g., openai-main/gpt-4o, groq/gemma-7b-it, etc.).

That’s it. Run your workflow. Every LLM request from that point forward will be routed through the TrueFoundry Gateway, giving you immediate observability and policy enforcement.

What Your Teams Gain Instantly

When n8n runs through TrueFoundry AI Gateway, your organization gains powerful new capabilities without altering existing workflows. By centralizing control, the gateway provides immediate, tangible benefits for observability, security, and financial governance. Here’s a breakdown of what that looks like.

n8n cost tracking

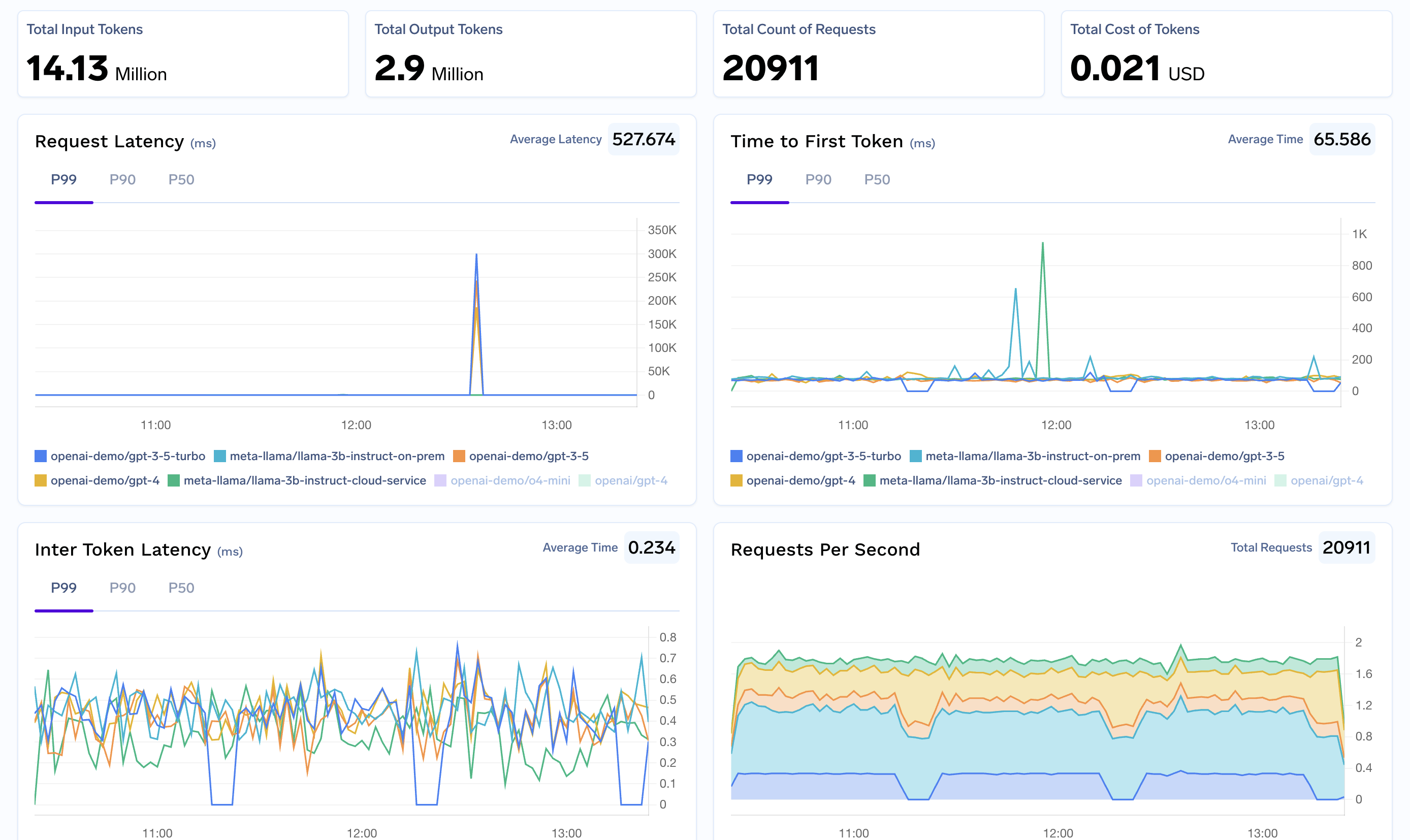

For finance and operations teams, gaining real-time visibility into AI expenditure is critical. The AI Gateway provides a comprehensive solution for n8n cost tracking from day one.

- Centralized Cost Dashboard: A single dashboard offers complete n8n cost monitoring, breaking down spend with detailed metrics like token usage, request counts, and p50/p95 latency. All data can be mapped directly to specific projects and teams, giving you a granular view of where your budget is going.

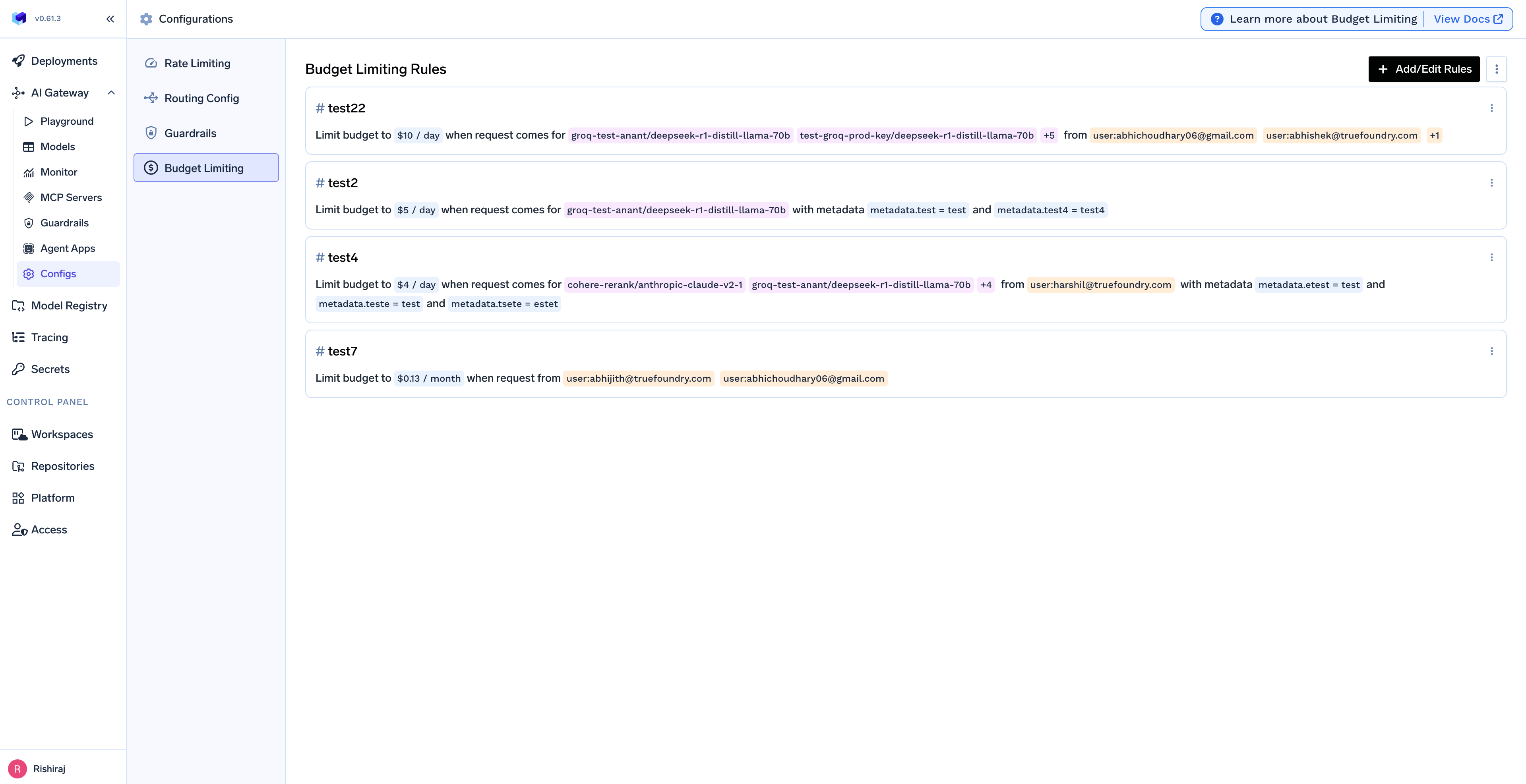

- Proactive Budget Management: Set budgets and configure alerts to prevent cost overruns before they happen. If a workflow starts consuming too many resources, you'll be notified instantly. This allows you to experiment safely, knowing that spikes during testing will be caught before they impact production budgets.

- Simplified Vendor Billing: The gateway can route calls to any model provider—including OpenAI, Anthropic, Mistral, Groq, or self-hosted engines. However, the finance team sees a single, unified billing surface, dramatically simplifying invoicing and vendor management.

- Isolate Expensive Workflows: With deep n8n tracking at the gateway level, it’s simple to spot specific nodes or workflows that drive the most cost. You can then apply caps or routing rules directly from the gateway, optimizing spend without ever needing to touch the n8n canvas.

n8n security and guardrails

Security can't be an afterthought. The AI Gateway acts as a central checkpoint, applying powerful n8n guardrails to every LLM request automatically, ensuring your data and operations remain secure.

- Access Control at the Edge: Every request is validated against robust security policies. With project-scoped tokens, Role-Based Access Control (RBAC), and custom policy checks applied at the edge, you have fine-grained control over who can access which models and data.

- Automated Data Protection: Reduce data risk without slowing down your builders. The gateway can be configured for automatic PII redaction and to enforce deny-lists, preventing sensitive information from ever reaching the models. These central policies are applied across all n8n workflows by default.

- Unified Audit Trails: For internal reviews and external compliance, clean evidence is key. The gateway’s audit trails link "who ran what, when, and with which model." These records combine neatly with native n8n logs to provide a complete, irrefutable trail for any audit.

n8n rate limit

Platform engineering teams can ensure system stability and high performance without editing individual workflows. The gateway provides a central control plane for reliability.

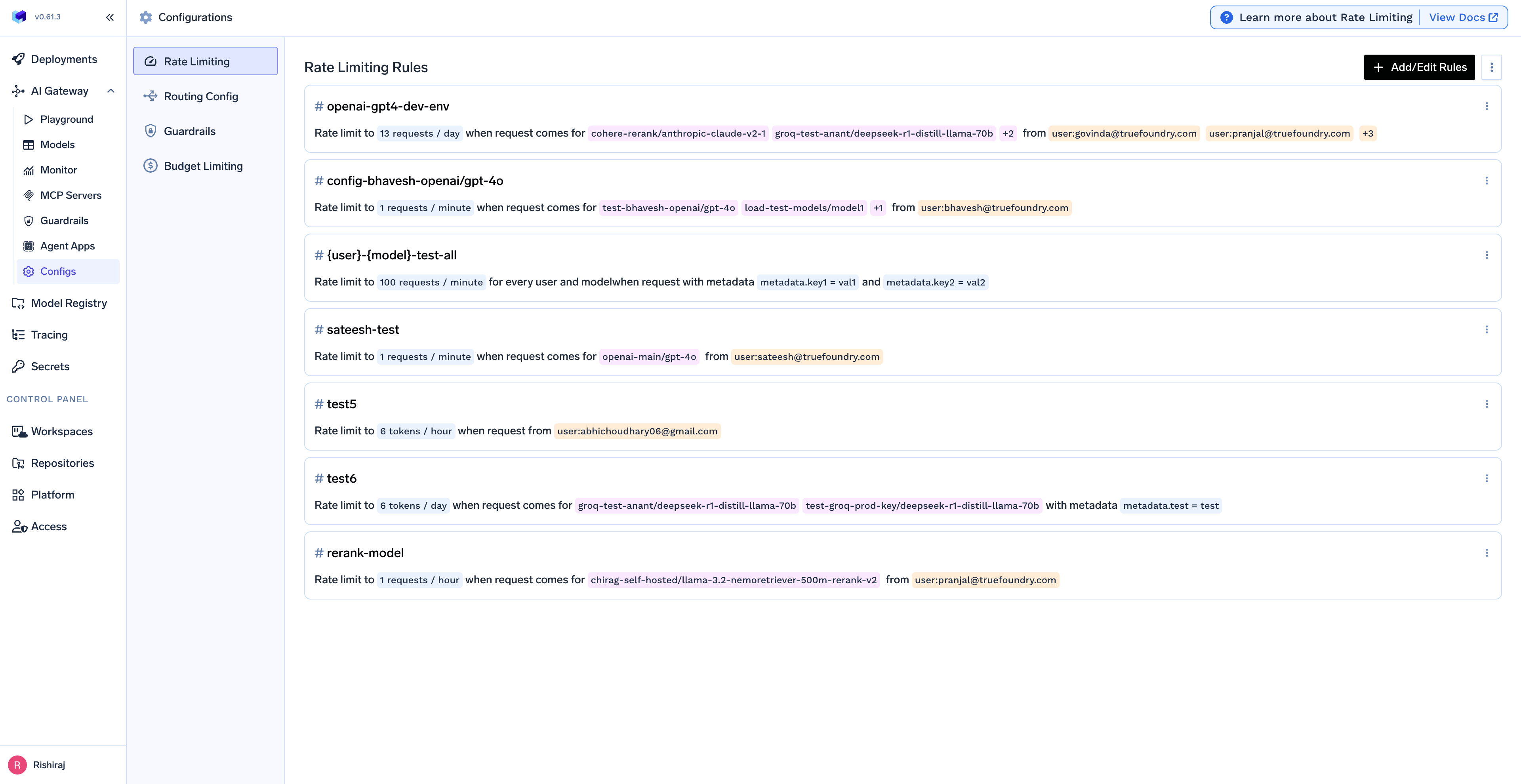

- Consistent Rate Limits and Timeouts: Implement consistent n8n rate limiting and SLOs for each project. This prevents any single workflow from overwhelming a model provider and ensures fair resource allocation across teams, all managed from one place.

- Automated Fallbacks and Retries: Keep your critical workflows running even when a provider has an incident. You can configure the gateway with automatic fallbacks to an alternative model and intelligent retries. This logic is handled centrally, meaning you don't need to update dozens of n8n nodes to build resilience.

- Safe, Seamless Upgrades: Use model aliases to de-risk upgrades. Your n8n nodes can point to a stable alias like

claude-3-haiku, while the platform team can switch the underlying model version behind the scenes in the gateway to roll out changes safely and without downtime.

n8n logging and end-to-end observability

For data, analytics, and operations teams, debugging complex AI workflows can be a challenge. The gateway integrates n8n tracking with deep model tracing to provide a single, unified view of system health.

- End-to-End Request Tracing: Every call passing through the gateway is stamped with correlation IDs. This makes it easy to follow a single request all the way from the initial n8n node to the LLM and back, eliminating guesswork in distributed systems.

- Faster Debugging: Combine the power of two logging systems. Use n8n logs for high-level workflow context and dive into the gateway's traces for granular breakdowns of model latency, token counts, and error details. You can even replay traces to reproduce issues quickly.

- Out-of-the-Box Health Signals: The gateway exposes a rich set of metrics ready for your existing dashboards and alerting tools (like Datadog or Prometheus). This, combined with n8n's monitoring endpoints, gives you a complete and immediate overview of your entire system's health.

With these pieces in place, finance gets predictable costs, security gets clean audit trails, platform teams get reliable operations, and builders keep their speed. It’s the fastest path to scale n8n safely, with real‑time n8n tracking, strong n8n logs, and simple, centralized n8n cost monitoring that works from the first run.

This is just the beginning. Over the next quarter, the TrueFoundry team plans to deepen the integration to further streamline the builder experience. Your feedback is crucial in guiding our roadmap. Feel free to connect with us with your feature requests.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.