Langfuse vs Portkey – Key Differences & Features

A production LLM application fails in ways that would make traditional software engineers uncomfortable. Your chatbot starts hallucinating product information, your RAG system returns irrelevant documents, or your AI assistant begins making expensive API calls in an endless loop. Unlike web servers that crash visibly or databases that throw clear error messages, LLM failures often appear as a subtle degradation in response quality that can persist for hours before anyone notices.

This fundamental observability challenge affects every organization running AI in production. Unlike traditional software, where you can trace every database query and API call, LLM applications operate in a black box where a single user prompt can trigger dozens of model calls, complex retrieval operations, and multi-step reasoning chains. When something goes wrong, teams find themselves debugging in the dark.

The LLM observability market has responded with dozens of solutions, but two platforms have emerged as quite popular in recent times: Langfuse, the open-source darling with 15.5K GitHub stars, and Portkey, the comprehensive platform processing 2.5+ trillion tokens. Each takes a fundamentally different approach to solving the same critical problem: How do you see what your AI is actually doing?

What is Langfuse?

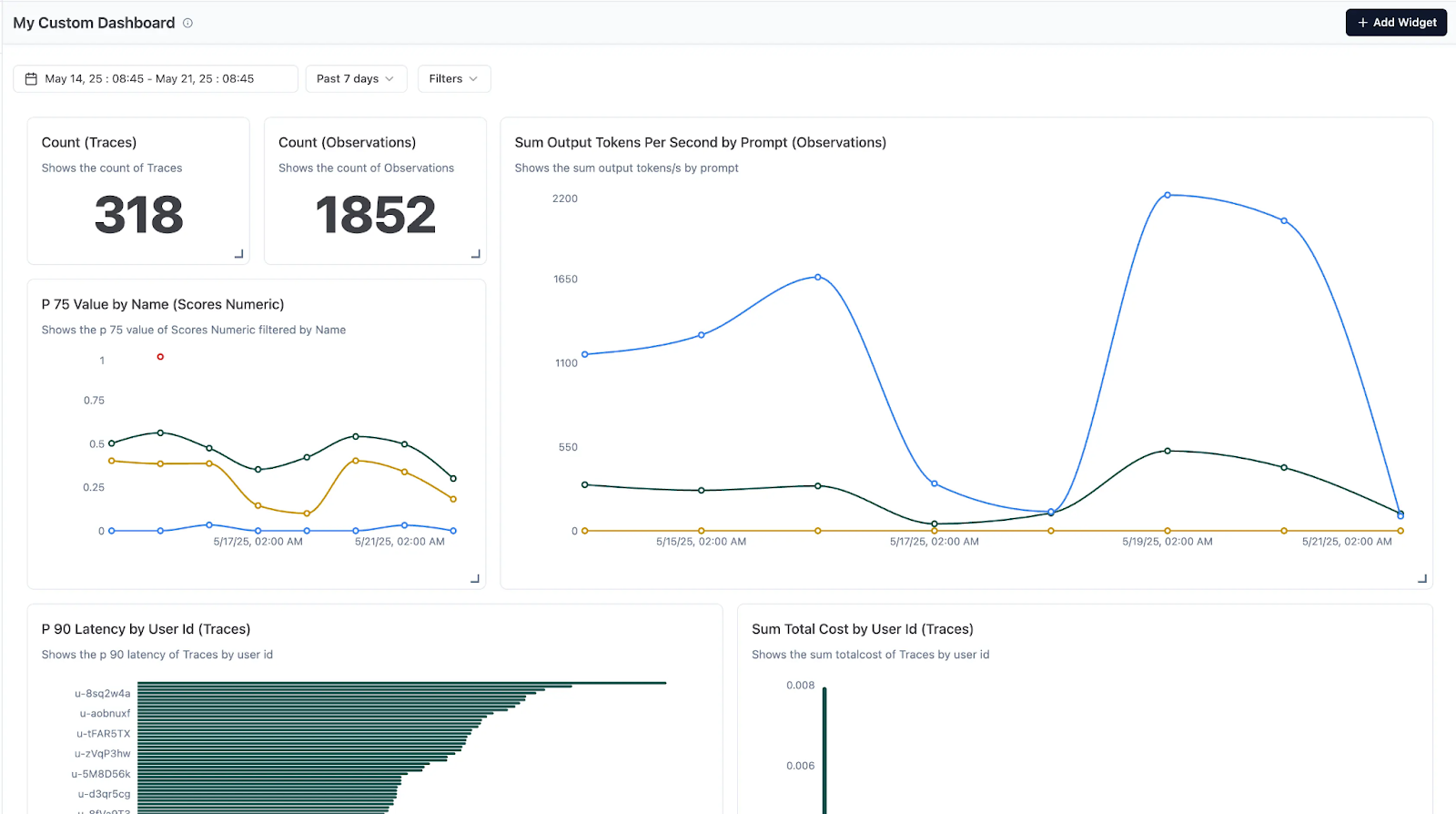

Langfuse is an open-source observability and analytics platform for LLM-powered applications. It lets you monitor prompts, responses, costs, and user feedback in one place. With Langfuse, teams can better understand, debug, and optimize their AI workflows.

Langfuse has become the go-to choice for teams that want comprehensive LLM observability without vendor lock-in. Built by a team in Berlin after going through Y Combinator, the platform has attracted 40,000+ active users and raised $4.5 million in funding, impressive for an open-source project.

Advanced Traceability Architecture

Langfuse's hierarchical tracing system creates a comprehensive map of every interaction within your LLM application stack. Each trace represents a complete user session or workflow, with nested spans capturing individual operations like model calls, retrievals, function executions, and data transformations. This tree-like structure mirrors the actual execution flow of complex AI applications.

Technical Architecture That Scales

Langfuse's new ClickHouse-based architecture is built for performance and scale, delivering much faster processing for most queries and handling over a billion rows and tens of gigabytes of data per server per second. This enables enterprise customers like Twilio and Khan Academy to run production workloads at scale, with Khan Academy deploying across over 100 users spanning 7 products and 4 infrastructure teams.

Enterprise-Grade Security

For an open-source project, Langfuse takes security seriously. They've achieved SOC 2 Type II and ISO 27001 certifications, with HIPAA compliance available for healthcare applications. The platform supports both cloud and self-hosted deployments, giving security-conscious organizations full control over their data.

The Open-Source Advantage

What sets Langfuse apart is its commitment to openness. The entire codebase is available on GitHub, the data export APIs have no restrictions, and there's no artificial feature gating between free and paid tiers. This philosophy resonates strongly with engineering teams who've been burned by vendor lock-in.

The community has responded enthusiastically. The documentation includes integrations for every major LLM framework: LangChain, LlamaIndex, OpenAI SDK, Anthropic SDK, and dozens of others. Community-contributed connectors handle edge cases that proprietary platforms often ignore.

Limitations in the Real World

Despite its strengths, Langfuse faces challenges that emerge at scale. The learning curve is steep. Teams report spending weeks understanding the full feature set before seeing value. The interface can feel overwhelming for simple use cases where teams just want basic cost and performance monitoring.

Resource requirements grow quickly with usage. While the minimum specs seem reasonable, production deployments often need significantly more memory and compute than advertised. The ClickHouse requirement, while powerful, adds operational complexity that not all teams want to manage.

Most critically, Langfuse is purely an observability platform. Teams need separate solutions for LLM routing, fallback handling, rate limiting, and cost controls. This works well for organizations with strong infrastructure teams, but creates integration challenges for others.

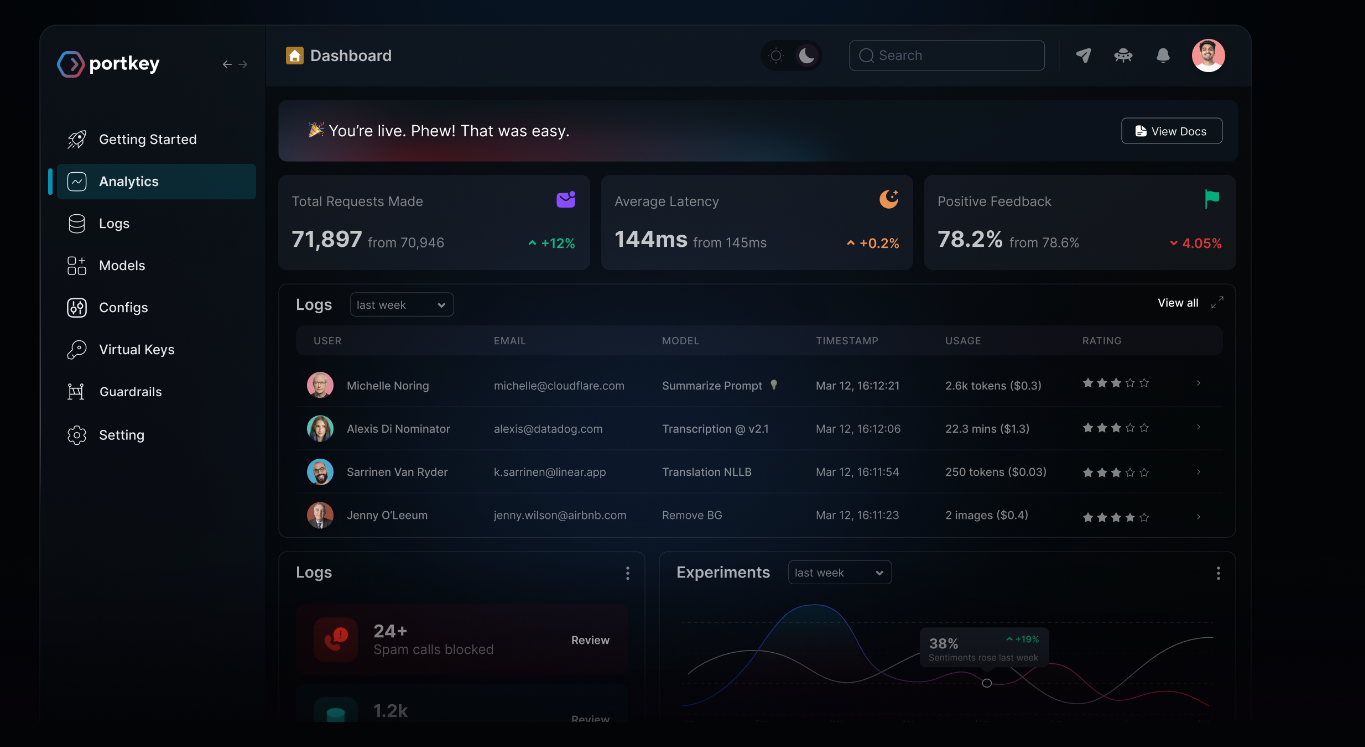

What is Portkey?

Portkey is a platform that helps manage and optimize LLM usage across different providers. It offers features like caching, fallback, rate limiting, and observability to make AI apps more reliable and cost-efficient. Teams use Portkey to streamline their AI infrastructure and scale with confidence.

Portkey has built a comprehensive AI infrastructure platform that extends far beyond observability into the realm of production LLM operations. Serving 650+ organizations and processing 2.5+ trillion tokens, Portkey positions itself as a solution for enterprise AI teams.

Comprehensive Feature Coverage

Unlike pure observability platforms, Portkey provides the full stack of LLM infrastructure capabilities (a complete AI Gateway infrastructure). The platform's configuration-driven approach lets teams define complex routing logic, fallback strategies, and governance policies without code changes. This appeals to organizations that need sophisticated LLM operations but lack the engineering resources to build everything from scratch.

Enterprise-First Design

Portkey's enterprise focus shows in its comprehensive compliance certifications: SOC 2 Type II, ISO 27001, HIPAA, and GDPR. The platform supports deployment models ranging from SaaS to fully air-gapped on-premises installations.

Role-based access control integrates with enterprise identity providers, audit logging meets regulatory requirements, and the platform provides detailed cost attribution across teams, projects, and cost centers. These capabilities matter enormously for large organizations where LLM usage can quickly become ungovernable.

Performance Reality Check

Here's where Portkey's comprehensive approach reveals its costs. Independent benchmarking shows Portkey performing significantly slower than specialized gateways, with 228% higher latency than Kong AI Gateway in standardized tests. Even Portkey's own claims of 20-40ms additional latency can become problematic for latency-sensitive applications.

The performance penalty stems from Portkey's feature-rich architecture. Every request passes through multiple layers: routing logic, guardrails evaluation, prompt management, observability collection, and cost calculation. While each feature adds value, they collectively create substantial overhead.

Resource consumption reflects this complexity. Production Portkey deployments require significantly more compute and memory than pure gateway solutions. Teams report difficulties optimizing performance for high-throughput applications.

Pricing Complexity

Portkey's pricing model reflects its comprehensive nature but creates predictability challenges. The platform offers multiple tiers:

- Starter: $49/month for basic features

- Production: Usage-based pricing with various add-ons

- Enterprise: Custom pricing with full feature access

The usage-based model can create budget surprises at scale, pushing many organizations to look into portkey alternatives. Unlike simple per-request pricing, Portkey's costs vary based on feature usage, data retention periods, and compliance requirements. Teams report difficulty predicting monthly costs, especially during development phases with unpredictable traffic patterns.

The platform's focus on external API orchestration also limits flexibility. Organizations wanting to deploy self-hosted models or implement custom routing logic may find themselves constrained by Portkey's architecture decisions.

Langfuse vs Portkey: Key Differences

When comparing Langfuse and Portkey, the main differences come down to focus and scope. Langfuse is built as an open-source observability and evaluation layer, giving developers granular insights into prompts, costs, and model performance.

Portkey, meanwhile, positions itself as a full AI gateway, combining observability with advanced features like multi-model routing, caching, guardrails, and governance. In essence, Langfuse is more about transparency and debugging, while Portkey is about reliability and scaling production workloads.

Here are the key differences between the two platforms:

Both Langfuse and Portkey bring unique strengths to LLM development. Langfuse is best suited for teams that want open-source observability, detailed evaluation, and complete control over their workflows. Portkey, on the other hand, is the stronger choice for organizations focused on scaling, reliability, and managing multi-model infrastructure at the production level.

Langfuse vs Portkey : When to Use Portkey

Portkey is designed for teams moving beyond experimentation and into production with LLMs. It serves as a universal AI gateway, observability layer, and governance tool, making it ideal when reliability, scale, and multi-provider flexibility matter.

Multi-Model and Multi-Provider Access: If your application needs to work across several LLMs (OpenAI, Anthropic, Cohere, Mistral, or open-source models), Portkey’s unified API removes provider lock-in. You can swap or route between 250+ models without rewriting code, which is critical for resilience and performance optimization.

Reliability at Scale: For production workloads, Portkey offers automatic retries, failover, circuit breakers, and conditional routing. These features ensure uptime and consistent performance even under heavy traffic or when a provider has latency spikes.

Cost and Latency Optimization: Built-in simple and semantic caching reduces redundant requests, cutting inference costs and speeding up responses. This is especially useful for apps with repeated queries or high-traffic user bases.

Security and Governance: Portkey manages API credentials through virtual keys, applies rate limits, and enforces budget controls. It also includes 50+ guardrails for filtering unsafe or non-compliant outputs, making it enterprise-ready.

Agentic and Complex Workflows: When building AI agents or multi-agent systems, Portkey provides the orchestration layer that manages routing, observability, and guardrails across agents, keeping workflows stable as they scale.

Use Portkey when you need to scale LLM applications reliably, optimize costs, manage multiple providers, and enforce enterprise-grade governance, all from one control plane.

Langfuse vs Portkey : When to Use Langfuse

Langfuse is built for teams who want deep visibility into how their LLM applications behave. It’s an open-source observability and evaluation platform, making it ideal when debugging, monitoring, and improving model performance are top priorities.

Tracing and Observability: Use Langfuse when you need detailed traces of every interaction, prompts, responses, tool calls, retries, and latency. It helps developers see the full journey of a request, making it easier to identify bottlenecks or errors in complex LLM workflows.

Prompt Management and Experimentation: If your application relies heavily on prompt engineering, Langfuse is invaluable. It supports versioning, playground testing, and side-by-side prompt experiments. This makes it easier to iterate quickly while keeping track of what’s working best.

Evaluation and Feedback Loops: Langfuse comes with built-in evaluation pipelines, from automated LLM-as-a-judge scoring to manual labeling and structured datasets. Use it when you want to measure accuracy, reliability, or user satisfaction systematically.

Open-Source and Self-Hostable: If your team values transparency and control, Langfuse is fully open-source and can be self-hosted. This makes it especially appealing for startups and enterprises with strict data governance requirements.

Debugging and Continuous Improvement: Langfuse excels when your focus is on improving quality rather than just scaling. It acts as the “black box recorder” for your AI system, helping you understand failures, refine prompts, and ship better experiences. Go for Langfuse when you need granular insights, evaluation, and open-source flexibility to continuously improve your LLM applications.

TrueFoundry: Comprehensive Enterprise Capability

The LLM infrastructure landscape today is fragmented—teams often juggle separate tools for observability, gateways, and model deployment, leading to trade-offs in performance and functionality. TrueFoundry solves this by delivering a unified platform that combines speed, enterprise-grade capabilities, and cost optimization.

At its core, TrueFoundry achieves sub-3ms latency while offering full gateway and observability features. Designed for enterprises from the ground up, it manages authentication, authorization, and rate limiting in-memory, ensuring performance even when agents make hundreds of tool calls per conversation. Intelligent routing further enhances efficiency by dynamically selecting the best model for each request based on cost, performance, and compliance needs.

The platform also supports flexible deployment models—cloud-native, on-premises, air-gapped, or hybrid—backed by SOC 2 Type 2 and HIPAA certifications. Its Kubernetes-native design ensures smooth integration with enterprise infrastructure.

On the cost side, TrueFoundry goes beyond provider switching with semantic caching, auto-scaling, and traffic-aware resource management—helping organizations cut costs while maintaining reliability.

A key differentiator is its self-hosted model excellence. TrueFoundry supports leading serving frameworks, GPU optimization, and unified APIs for both cloud and on-prem models. This empowers organizations to deploy proprietary models securely, reduce vendor lock-in, and meet data sovereignty requirements—all within one streamlined platform.

Conclusion

Choosing between Langfuse and Portkey depends on your organization's technical capabilities and performance requirements. Langfuse excels for teams that prioritize open-source flexibility and can manage operational complexity. Portkey suits organizations needing comprehensive LLMOps capabilities, accepting performance trade-offs for feature richness.

TrueFoundry eliminates this fundamental choice by delivering sub-3ms latency alongside enterprise-grade observability, unified model deployment, and comprehensive governance. Rather than forcing teams into complex multi-tool infrastructures, TrueFoundry's unified architecture provides the performance, security, and operational depth required for mission-critical AI deployments. As AI capabilities rapidly commoditize, the infrastructure that enables reliable, observable, and cost-effective deployment becomes the real differentiator.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.