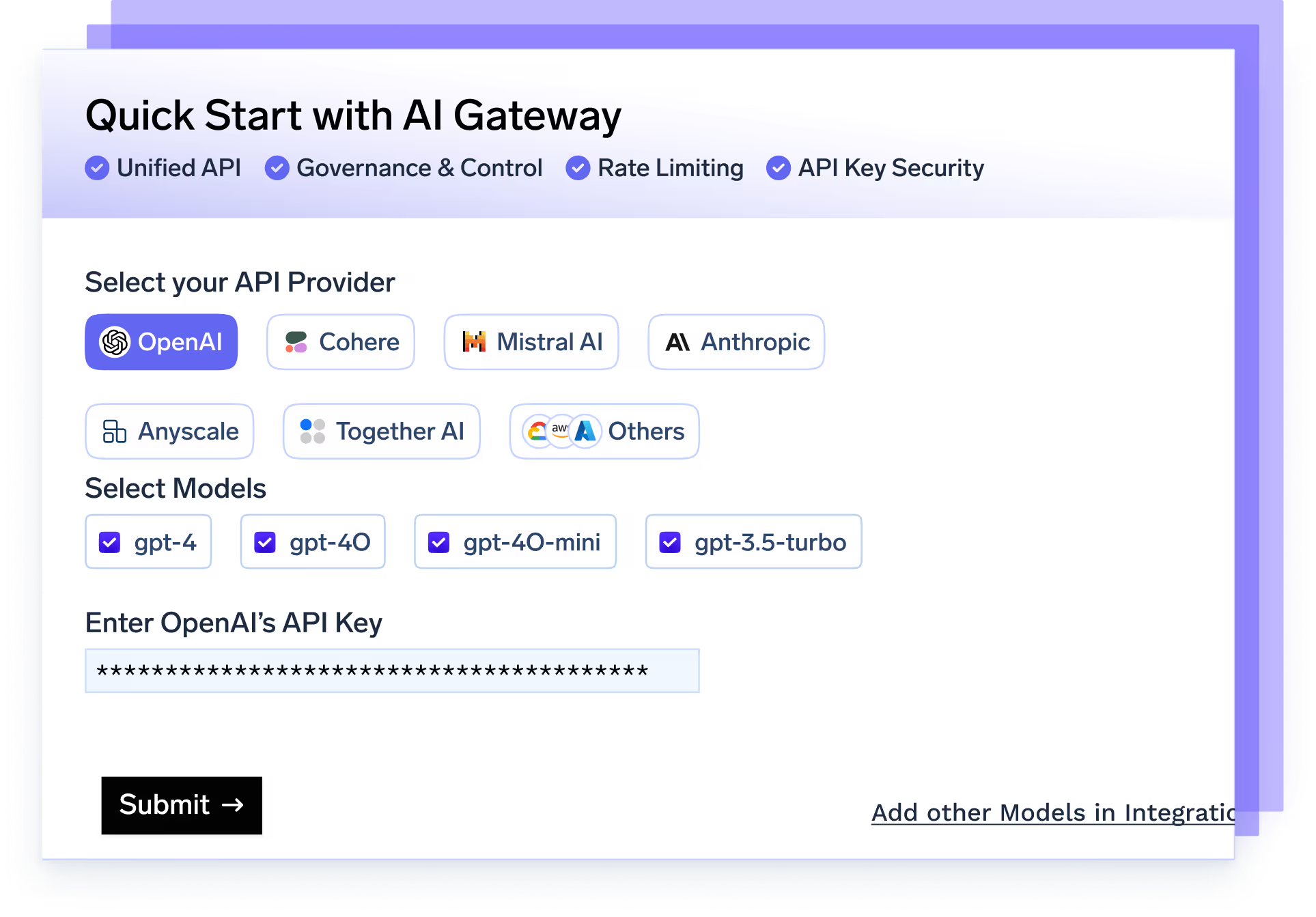

On-Prem AI Gateway: Unified LLM API Access

- Connect to OpenAI, Claude, Gemini, Groq, Mistral, and 250+ LLMs through one AI Gateway API

- Use the platform to support chat, completion, embedding, and reranking model types

- Orchestrate workloads across your on-prem GPUs and approved external endpoints with smart routing and fallbacks

- Policy-based governance, enforce rate limits, quotas, RBAC, and audit logs at the gateway level

On-Prem/Hybrid LLMOps: Model Serving & Inference

- Launch any open-source LLM via pre-tuned, production-ready pipelines in your on-prem or VPC/hybrid cluster

- Leverage industry-leading model servers like vLLM and SGLang for low-latency, high-throughput inference

- Leverage industry-leading model servers like vLLM and SGLang for low-latency, high-throughput inference

- Enable GPU autoscaling, auto shutdown, and intelligent resource provisioning across your LLMOps infrastructure

Why choose TrueFoundry for hybrid cloud AI?

Deliver high-performance AI infrastructure that optimizes itself - reducing cost, complexity, and manual intervention.

Data Sovereignty & safety

- 100% of tokens, files, and traces stay inside your DC/VPC — no vendor access.

- Per-tenant controls with strict residency compliance.

- 42% of enterprise architects now view independent storage as safer than primary clouds

Agentic Workflow Toolkit

- Compose multi-step agents with tools, prompts, and policies.

- Built-in evaluation and observability for trust + repeatability.

- Rapid iteration enables scaling to complex workflows.

Unified GPU Fleet Orchestration

- On-prem models deliver up to 90% latency savings vs. cloud runs.

- Single dashboard to manage racks, clusters, and edge nodes.

- Automated scheduling, autoscaling, and real-time monitoring.

Predictable & Reduced Cost

- Enterprises report 80–90% cost reductions by shifting workloads on-prem.

- Own hardware and cut egress fees for financial control.

- Dynamic routing to lowest-cost models within SLA.

Technical challenges teams face on-prem

Financial Services

- Customer data never leaves the bank → easier SOC 2 audits

- Sub-10 ms inference → tighter bid/ask spreads

- Ring-fenced pipelines → zero data-leak headlines

Real-time fraud scoring

Score every transaction in milliseconds and quarantine anomalies before they clear

T-1 risk back-testing

Compress VaR runs to overnight so books close with fresher stress results.

Personalised wealth bots

Compliant, on-prem advisors that remember portfolio context, without leaking customer data.

Healthcare

- PHI stays on-site → HIPAA/GDPR peace of mind

- Instant model inference → faster diagnostics

- Full audit trail → smoother FDA submissions

Radiology image triage

Score scans in milliseconds next to PACS and auto-prioritise suspected criticals.

Drug-discovery fine-tuning

Fine-tune on de-identified trial data inside your firewall; IP and PHI never leave.

Hospital-bed demand forecasting

Local EHR/ADT feeds power daily bed-need forecasts and staffing alerts, no data export.

Automotive

- Customer data never leaves the bank → easier RBI/SOC 2 audits

- Sub-10 ms inference → tighter bid/ask spreads

- Ring-fenced pipelines → zero data-leak headlines

Driver-assist testing lab

Deterministically replay edge cases on an on-prem AV/HPC cluster and sweep model versions with safety-lifecycle traceability

Predictive maintenance

Fuse telemetry and service history locally to forecast wear and schedule fixes before failures.

In-plant robotics vision

Run inspection models at the far edge (cameras/robots) to catch defects in-line, no cloud dependency.

Semiconductors

- Yield slips from microscopic defects → inline AI inspection boosts first-pass yield

- Lab-only pilots & siloed EDA logs → one governed platform across design, test, and fab

- Tool downtime & scrap costs → predictive maintenance and SPC reduce excursions

Wafer & mask defect detection

CV+ML flags hot spots inline

Virtual metrology & SPC

Predict out-of-spec before it hits yield

EDA/log mining for D₀ ramp

Correlate design/test/fab signals to speed yield learning

Manufacturing

- Analyze production data without cloud latency

- Keep proprietary processes and IP secure on-site

- Deploy vision models for real-time quality control

Defect heat-map overlay

Pixel-level anomaly maps on live cameras to guide inspectors in real time.

Energy-use optimisation

Learn optimal setpoints and auto-adjust drives/ovens to trim kWh without hurting throughput.

Demand-driven scheduling

Pull live ERP/WMS signals to re-sequence jobs and reduce WIP bottlenecks.

Media & Telecom

- Terabytes of raw footage stay in-house → protect IP rights

- Real-time, on-prem render & edit → slash post-production time

- First-party viewer data processed locally → privacy-compliant personalization

Auto-editing

AI stitches multi-cam footage, Auto-sync angles, assemble a first cut, and generate captions, without raw media leaving your vault

Smart recommendations

Personalize without third-party cookies, Drive recs from first-party viewing behavior stored in your own infra; no external trackers

Secure asset vault

Rights management & watermarking, Centralized access control plus forensic watermarking to trace leaks across screeners and cut

Defense

- Air-gapped training clusters → meet DoD Top-Secret / SCI mandates

- Sub-20 ms inference at the tactical edge → faster decision cycles

- Immutable audit logs → pass DevSecOps & zero-trust reviews

Tactical model training

Update vision models in-theater

Real-time targeting support

On-device detection/labeling to aid situational awareness in low-connectivity settings.

Secure audit trail

Hash-chained/append-only logs with verifiable history for investigative and compliance needs.

Frequently asked questions

How should we choose between cloud‑based and on‑prem AI governance systems?

How to choose between on‑prem vs cloud AI finance solutions?

large language models. It includes capabilities like model server orchestration, prompt

management, token-level observability, agent frameworks, and secure API access.

TrueFoundry’s LLMOps platform handles these GenAI-specific workflows natively—unlike

generic MLOps tools.

Is cloud or on‑prem edge AI security in data centers better—and when?

model serving, fine-tuning, RAG, agent orchestration, observability, and governance—so your

team can focus on building instead of stitching infrastructure. It also supports enterprise needs

like compliance, quota management, and VPC deployments.

How do self‑hosted LLM evaluation platforms usually store & secure prompt logs?

Model Serving & Inference with vLLM, SGLang, autoscaling, and right-sized infra

Finetuning Workflows using LoRA/QLoRA with automated pipelines

API Gateway for unified access, RBAC, quotas, and fallback

Prompt Management with version control and A/B testing

Tracing & Guardrails for full visibility and safety

One-Click RAG Deployment with integrated VectorDBs

Agent Support for LangChain, CrewAI, AutoGen, and more

Enterprise Features like audit logs, VPC hosting, and SOC 2 compliance

I need a self‑hosted platform to log every LLM request with metadata—options?

cloud (AWS, GCP, Azure), in a private VPC, on-premise, or even in air-gapped

environments—ensuring data control and compliance from day one.

How do AI vendors manage infrastructure diversity across air‑gapped deployments?

request-level logs. You can track every prompt, response, and error in real time, making it easy

to debug and optimize your LLM applications.

GenAI infra- simple, faster, cheaper

Trusted by 30+ enterprises and Fortune 500 companies

.jpg)