Pangea Integration with TrueFoundry's AI Gateway

Modern LLM teams move fast but they also need real, practical AI security. We have integrated with many guardrail providers and we bring another integration for our enterprise clients- Pangea integration to the TrueFoundry AI gateway, so that teams can detect prompt-injection, redact sensitive data, and enforce content policies without rewiring their stack.

What is Pangea (and why pair it with an AI Gateway)?

Pangea provides a suite of programmable security services tailored for AI workloads - most notably AI Guard for detecting risky content and enforcing policies, and Redact for automatically removing sensitive data. It introduces the idea of recipes: reusable guard configurations you define in the Pangea console and call from your app or platform. Bringing Pangea into your AI gateway means you can apply these safeguards to every request and response across providers, models, tools, and agents without touching application code paths.

Why security matters for an AI Gateway

- Centralized defenses. Enforce org-wide guardrails at the AI gateway.

- Data stays home. Traffic flows through your controlled environment; you decide what’s logged and where.

- Defense-in-depth. Detect injections, defang URLs, block exfiltration attempts, and redact PII before it reaches models or users.

- Operational simplicity. One place to wire credentials, one policy surface to manage less drift, more consistent AI security.

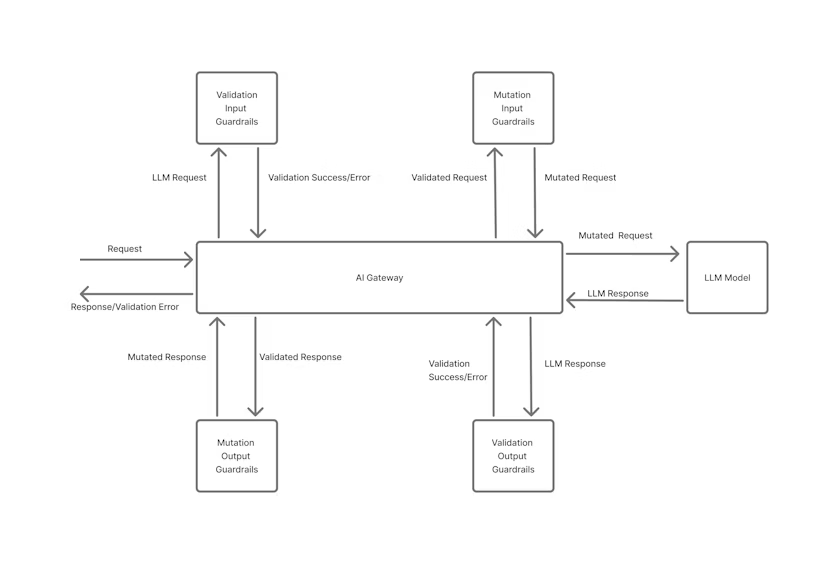

How the integration works

At a high level:

- Create an AI Guard recipe in Pangea (e.g., block prompt-injection, sanitize URLs, redact patterns).

- In TrueFoundry, add a Pangea guard to your route or organization policy -point it to your Pangea domain, project domain, and recipe ID; reference a stored API key.

- The AI gateway calls Pangea inline for prompts and/or completions, then enforces the decision (allow, block, redact, transform) before forwarding to the model or client.

Supported guard types

You can attach Pangea checks to any of these phases:

- Prompt (pre-model)

- Completion (post-model)

- Prompt & Completion (both directions)

They’re configured as “guards” in the gateway, with Pangea as the provider.

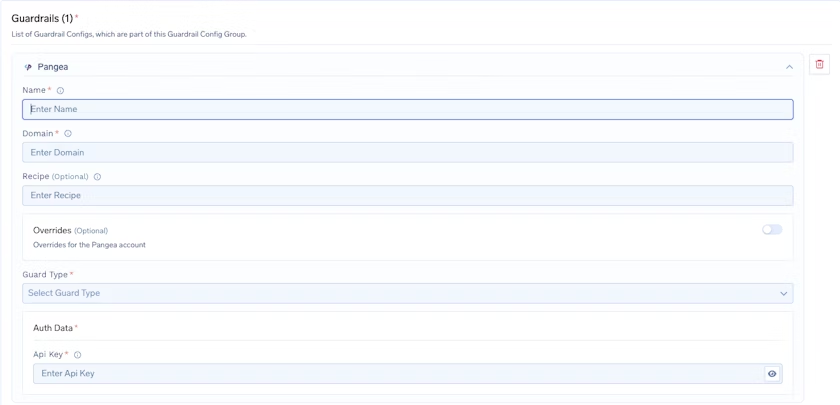

Adding Pangea Integration

To add a Pangea integration, start by entering a name for your guardrails group and then add any collaborators who should have access to manage or use this group. After that, configure the Pangea Config by giving it a name and specifying the domain for the cloud provider and region where your Pangea project is set up. For example, if your endpoint is https://<service_name>.aws.us-west-2.pangea.cloud/v1/text/guard, then the domain you should enter is aws.us-west-2.pangea.cloud. You can also optionally provide a recipe key, which points to a predefined configuration in the Pangea User Console that defines what rules should be applied to the text, such as defanging malicious URLs. If you want to apply custom settings that override your default Pangea account configuration, you can enable Overrides. Next, choose the Guard Type from the dropdown, based on the type of protection you want to apply.

Finally, under Pangea Authentication Data, provide the API Key used to authenticate requests to Pangea services. This key is required for the integration to work, and you can obtain it from the Pangea Console by going to your project dashboard and opening the Tokens or API Keys section. Make sure this key is kept secure, since it provides access to your Pangea security services.

What enforcement looks like

- Block: request/response is stopped with a clear reason and code path for observability.

- Redact: sensitive spans are removed before forwarding to the LLM or client (using Redact).

- Transform: unsafe constructs can be defanged (e.g., URLs), then safely passed along via the AI gateway.

All decisions are visible in your gateway logs; Pangea also maintains an audit trail within your project for investigations and reviews.

Once Pangea is wired into the gateway, the biggest operational win is consistency. Teams don’t have to remember to “turn on” security in every microservice or agent workflow, because the same checks apply wherever traffic flows whether it’s a simple chat completion, an agent tool call, or a retrieval-augmented pipeline. This reduces policy drift over time and makes it much easier to roll out new protections (or tighten existing ones) without coordinating code changes across multiple teams.

It also improves day-two operations when something goes wrong. When a user reports unsafe output or suspicious behavior, platform teams can trace exactly which guard fired, what action was taken, and which route and model were involved all from the gateway’s logs and audit signals. That makes investigations faster and helps security and AI teams build a shared, repeatable workflow for reviewing incidents, tuning recipes, and validating changes before they reach production.

Over time, teams typically evolve from “basic blocking and redaction” to more nuanced policies that balance safety and user experience. For example, you may choose to block clear prompt-injection attempts, redact specific PII types, and transform risky content like URLs or code snippets, while still allowing the rest of the request to proceed. With Pangea recipes and gateway-level enforcement, those changes become configuration updates rather than rewrites, letting teams iterate on security controls at the same pace they iterate on prompts, models, and product features.

Frequently asked

Does this add latency?

The call happens at the AI gateway; with caching and concise recipes, the overhead is typically small relative to model latency.

Is model choice constrained?

No. Policies apply across providers and models since they’re enforced at the AI gateway boundary.

Can we combine with other guardrails?

Yes, stack Pangea with additional gateway guards for layered AI security.

Get started

- Follow the step-by-step TrueFoundry docs for Pangea configuration. Link here

- Review Pangea’s AI Guard concepts (recipes, actions) to design the right policy.

If you’re scaling LLM workloads, this pairing gives you a clean, centralized control point: AI security that travels with every call, and an AI gateway that keeps your apps fast, consistent, and compliant.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)