LLM Load Balancing

LLMs are compute-intensive resources, which are costly, stateful, and variable in performance. Load balancing ensures every prompt is matched to the optimal model, replica, or provider, considering latency, health, and cost. For anyone managing enterprise AI applications, LLM load balancing is not a luxury—it’s a necessity. In this in-depth guide, we’ll demystify the core concepts, walk through real-world strategies, and show how TrueFoundry’s AI Gateway eliminates the operational burden.

What is LLM Load Balancing?

At its core, LLM load balancing is the process of distributing incoming inference requests across a fleet of model instances—these may be different APIs, different cloud vendors, or fine-tuned checkpoints on your own GPUs.

However, LLM load balancing is more than a classic “round robin” router. Because LLM requests stream over seconds, can spike in volume, and interact with vendor rate limits, an effective load balancer does much more:

- Tracks the token generation state for streamed outputs.

- Adapts to varying workloads: Some prompts are trivial, some are reasoning-intensive.

- Handles model diversity: Each endpoint or vendor may have different rate-limits, reliability, and cost.

- Automates health checks and failover, so user experience and SLAs aren’t at the mercy of a single provider failure.

- Offers scaling levers so new endpoints can be added with zero downtime.

Key Objectives of LLM Load Balancing

- Performance: Minimize average and tail (p95/p99) latency.

- Availability: Provide continuous service, rerouting around failures.

- Cost Optimization: Use high-cost models only when necessary.

- Scalability: Dynamically add/remove compute without affecting user experience.

Example Scenarios

- A surge of chat prompts at 9am clogs your OpenAI endpoint; a load balancer spreads requests to alternate vendors.

- An expensive gpt-4o model is reserved for research; most traffic is routed to smaller, cost-effective models.

- A/B testing of a fine-tuned GPT-4 checkpoint is managed with a weighted rollout—a fraction of traffic is canaried to the new model.

Why Load Balancing Matters in LLM Workflows

1. User Experience (Latency)

LLM-based products are only as good as their perceived responsiveness. End-users expect near-instant time-to-first-token (TTFT) and fluid streaming. Without a load balancer, traffic clumps onto a single model, causing spikes in wait times and deteriorating the user experience. Research on vLLM (a high-performance inference engine) confirms that smart, latency-aware routing can cut p95 latency by over 30% under bursty workloads.

2. SLA Compliance and Reliability

Modern AI apps are bound by strict service-level agreements (SLAs), often requiring 99.9% uptime and tail latencies below 600 ms. Unmitigated model failures or rate-limiting events can cascade across your stack, jeopardizing these targets. Load balancing protects SLAs by:

- Detecting and ejecting unhealthy endpoints automatically.

- Providing fallback paths and automatic recovery.

- Balancing traffic proactively to avoid hitting vendor-side rate limits.

3. Cost Efficiency

LLM providers bill by token and by the model used—premium models run up quick bills if not managed carefully. By routing “easy” prompts (lookups, simple completions) to cheaper models and reserving heavy computational endpoints for complex queries, organizations can cut spending by up to 60% without sacrificing output quality.

4. Scalability and Elasticity

Traffic to LLMs is unpredictable: sudden product launches, viral news, or time-of-day effects lead to sharp spikes. A static provisioning leads to overpaying for idle resources or risk of overload at peaks. With load balancers that work hand-in-hand with autoscalers, you maintain optimal service levels with minimal waste.

Key Engineering Challenges in LLM Load Balancing

Load Balancing Strategies for LLMs

1. Weighted Round-Robin

The simplest strategy: assign static weights to each model/endpoint. For example, you might send 80% of gpt-4o traffic to Azure, 20% to OpenAI. This is excellent for canarying new versions or distributing load for known, stable patterns.

Pros: Simple, deterministic, easy to audit.

Cons: Blind to live latency or failures.

2. Latency-Based Routing

More sophisticated load balancers keep real-time stats (moving windows of response times) and route most requests to the fastest-responding endpoints, shifting dynamically as things change.

Pros: Reduces tail latency, adapts to traffic bursts or vendor slowdowns.

Cons: Needs ongoing monitoring and dynamic rule adjustment.

3. Cost-Aware Routing

Here, requests are pre-classified (either automatically or via hints) as “simple/completable by small model” or “needs heavyweight reasoning.” Traffic is steered accordingly—maximizing use of cost-efficient resources.

Pros: Big savings on token spend.

Cons: Requires reliable prompt classification logic.

4. Health-Aware Routing

All models are continuously monitored for error rates (timeouts, 429, 5xx errors). If a target exceeds a defined error threshold, it’s removed from the pool for a set cooldown, then automatically restored.

Pros: Highly resilient; prevents cascading failures.

Cons: May need tuning to avoid “flapping” (frequent ejection/restoration).

5. Cascade (Multi-Step) Routing

Runs a request on a cheap model first and, only if confidence is low or the output unsatisfactory, promotes it to a strong model. Saves costs on “easy” queries and provides fallback without user-perceived delays.

6. Autoscaling-Integrated Balancing

Combined with compute orchestrators, the balancer tracks both request queuing and model/GPU utilization, autoscaling endpoints up or down as needed.

Simplifying Multi-LLM Load Balancing with TrueFoundry

TrueFoundry offers a robust solution for LLM (Large Language Model) load balancing as part of its AI Gateway. This feature enables teams to deploy, manage, and optimize multiple LLMs and endpoints with production-grade reliability, performance, and cost control. Here’s a comprehensive, step-by-step guide covering the fundamentals of TrueFoundry’s load balancing product, the strategies it supports, and explicit instructions—backed by code examples—on how to implement and manage these features using YAML configuration.

What Is TrueFoundry’s LLM Load Balancing Product?

TrueFoundry AI Gateway acts as a “smart router” for LLM inference traffic. It automatically distributes incoming requests across your configured set of LLM endpoints (for example, OpenAI, Azure OpenAI, Anthropic, self-hosted Llama, etc.) to achieve four main goals:

- High availability: Automatic failover and traffic rerouting if an endpoint is unhealthy or rate-limited.

- Low latency: Minimizes user wait time by choosing the optimal endpoint.

- Cost efficiency: Enforces rate and budget limits, directs simpler prompts to cheaper models.

- Operational simplicity: All rules and policies are defined declaratively “as code,” making production management auditable and fast.

Key Product Features include:

- Weighted and latency-based routing strategies.

- Environment, user, and team aware custom routing.

- Usage, rate, and failure limits per model.

- Support for custom model parameters per endpoint.

- Observability and analytics for every routed request.

Strategies Supported by TrueFoundry

TrueFoundry’s load balancer primarily supports two strategies for distributing inference requests:

Weight-Based Routing

You set what percentage of traffic each model (or version) receives. This is ideal for canary rollouts, A/B testing, or splitting traffic between similar endpoints.

Latency-Based Routing

The system dynamically routes new requests to the models serving responses the fastest, ensuring consistent low-latency experiences even as endpoint performance fluctuates.

Additional Capabilities

- Environment/metadata-based routing: For example, send “production” traffic to one pool and “staging” traffic to another.

- Usage and failure limits: Automatically eject/models endpoints that exceed error thresholds or rate limits, pausing them for a configurable cooldown period.

- Override params per target: Adjust model generation parameters like temperature, max_tokens, etc., on a per-endpoint basis.

Implementing LLM Load Balancing

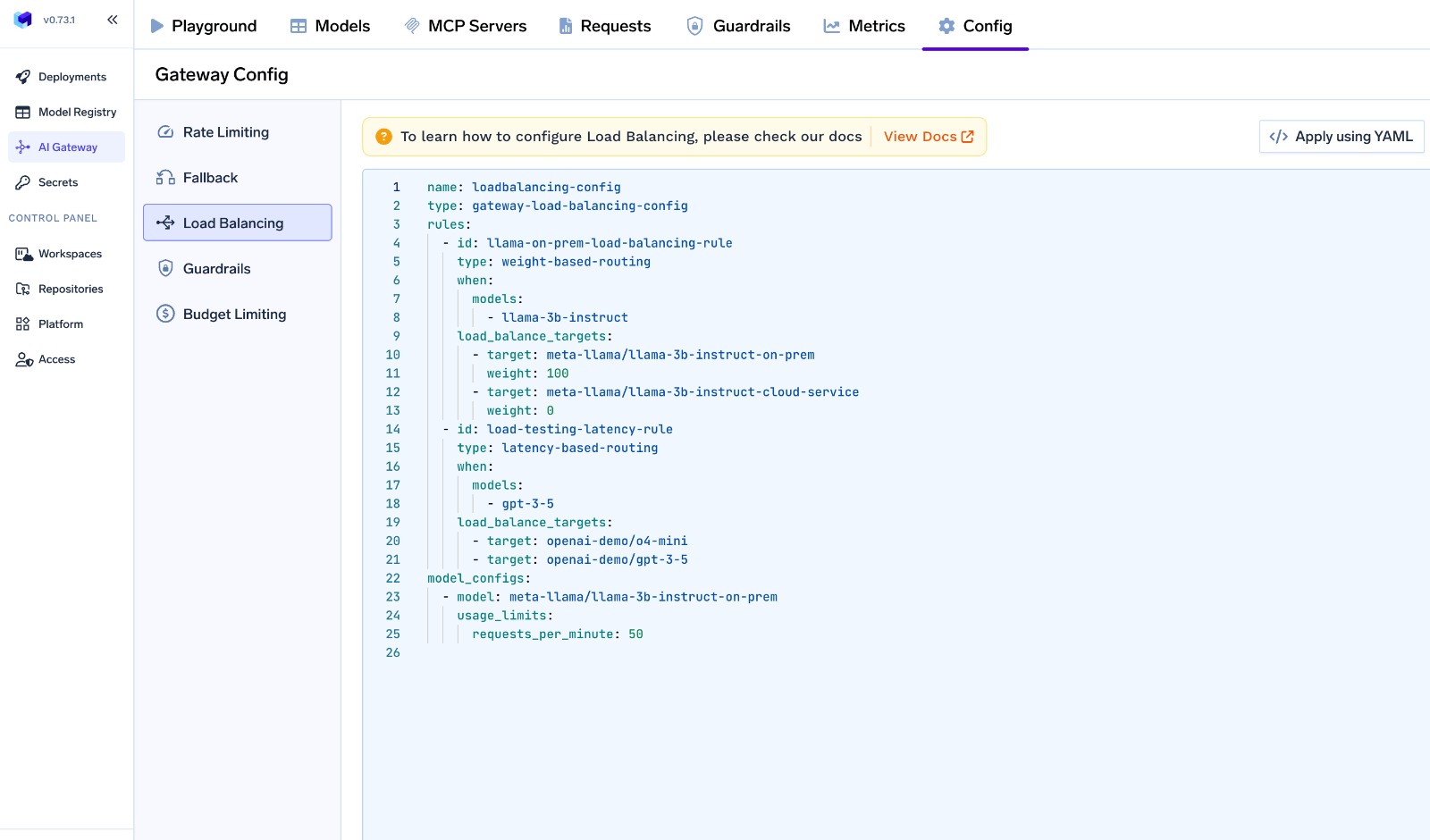

All configuration in TrueFoundry is managed via a gateway-load-balancing-config YAML file. This file specifies your models, rules, constraints, and targets in a transparent, version-controlled manner.

Key YAML Structure

- name: Identifier for the config (for logging and versioning)

- type: Set to gateway-load-balancing-config

- model_configs: Specifies usage limits and failure tolerance per model

- rules: Implements actual traffic distribution logic (by weights, latency, or custom metadata)

Step 1: Structure Your YAML

Here’s a template you can adapt:

name: prod-load-balancer

type: gateway-load-balancing-config

model_configs:

# Model-specific constraints (rate, failover, etc.)

- model: azure/gpt-4o

usage_limits:

tokens_per_minute: 50_000

requests_per_minute: 100

failure_tolerance:

allowed_failures_per_minute: 3

cooldown_period_minutes: 5

failure_status_codes: [429, 500, 502, 503, 504]

- model: openai/gpt-4o

rules:

# Weighted traffic split (canary rollout)

- id: rollout

type: weight-based-routing

when:

models: ["gpt-4o"]

metadata: { environment: "production" }

load_balance_targets:

- target: azure/gpt-4o

weight: 90

- target: openai/gpt-4o

weight: 10

# Latency-based routing for another model

- id: latency-strat

type: latency-based-routing

when:

models: ["claude-3"]

metadata: { environment: "production" }

load_balance_targets:

- target: anthropic/claude-3-opus

- target: anthropic/claude-3-sonnet

Step 2: Add Fine-Grained Controls

Usage and Failure Limits:

You can set strict cost guards and resilience policies directly:

model_configs:

- model: azure/gpt4

usage_limits:

tokens_per_minute: 50000

requests_per_minute: 100

failure_tolerance:

allowed_failures_per_minute: 3

cooldown_period_minutes: 5

failure_status_codes: [429, 500, 502, 503, 504]

If a model reaches the failure threshold, it is marked unhealthy and automatically receives no requests for the cooldown period.

Metadata and Subject routingFor tenant-aware or environment-specific rules, use metadata and subject filters:

rules:

- id: prod-team-special

type: weight-based-routing

when:

models: ["gpt-4o"]

metadata: { environment: "production" }

subjects: ["team:ml", "user:alice"]

load_balance_targets:

- target: azure/gpt-4o

weight: 60

- target: openai/gpt-4o

weight: 40

This sends traffic from the ML team or user “alice,” specifically in production, using given weight splits. Override Model Parameters per Target - You can customize model behavior per endpoint within your rules:

- target: azure/gpt4

weight: 80

override_params:

temperature: 0.5

max_tokens: 800

Step 3: Deploy and Operate

Apply config: Use the CLI to deploy:

tfy apply -f my-load-balancer-config.yaml

This ensures all changes are versioned, reviewed, and auditable.

Monitor: All route decisions, failures, rate-limits, and load-distribution logs are available via TrueFoundry’s dashboard, with OpenTelemetry support for advanced analytics.

Example 1: Basic Weighted Rollout

name: prod-gpt4-rollout

type: gateway-load-balancing-config

model_configs:

- model: azure/gpt4

usage_limits:

tokens_per_minute: 50_000

requests_per_minute: 100

failure_tolerance:

allowed_failures_per_minute: 3

cooldown_period_minutes: 5

failure_status_codes: [429, 500, 502, 503, 504]

rules:

- id: gpt4-canary

type: weight-based-routing

when:

models: ["gpt-4"]

metadata: { environment: "production" }

load_balance_targets:

- target: azure/gpt4-v1

weight: 90

- target: azure/gpt4-v2

weight: 10

What Happens here:

90% of gpt-4 traffic is routed to azure/gpt4-v1, 10% to a new candidate, only for production requests. Rate and failure limits are strictly enforced—unhealthy models are automatically ejected for 5 minutes if there are >3 failures per minute.

Example 2: Latency-Based Routing

name: low-latency-routing

type: gateway-load-balancing-config

model_configs:

- model: openai/gpt-4

usage_limits:

tokens_per_minute: 60_000

rules:

- id: latency-routing

type: latency-based-routing

when:

metadata: { environment: "production" }

models: ["gpt-4"]

load_balance_targets:

- target: openai/gpt-4

- target: azure/gpt-4

What Happens Here:

For each request, the Gateway checks recent response times for both targets and prefers the one performing better within a fairness band (such as "choose any target within 1.2× of the fastest average latency").

Example 3: Using Metadata and Subject-Based Routing

For advanced multi-tenant or environment-specific use-cases, leverage the metadata and subjects fields.

rules:

- id: prod-weighted

type: weight-based-routing

when:

models: ["gpt-4"]

metadata: { environment: "production" }

subjects: ["team:product", "user:jane.doe"]

load_balance_targets:

- target: azure/gpt4

weight: 60

- target: openai/gpt4

weight: 40

What Happens Here:

Only requests originating from the "product" team or user "jane.doe", and tagged as production, will be routed by this rule.

Example 4: End-to-End Example Combining Multiple Strategies

name: full-enterprise-llm-config

type: gateway-load-balancing-config

model_configs:

- model: azure/gpt-4

usage_limits:

tokens_per_minute: 70_000

requests_per_minute: 150

failure_tolerance:

allowed_failures_per_minute: 4

cooldown_period_minutes: 4

failure_status_codes: [429, 500, 502, 503, 504]

- model: anthropic/claude-3

usage_limits:

tokens_per_minute: 35_000

rules:

- id: prod-latency-claude

type: latency-based-routing

when:

models: ["claude-3"]

metadata: { environment: "production" }

load_balance_targets:

- target: anthropic/claude-3-opus

- target: anthropic/claude-3-sonnet

- id: cost-path-gpt4

type: weight-based-routing

when:

models: ["gpt-4"]

metadata: { environment: "staging" }

load_balance_targets:

- target: azure/gpt-4

weight: 60

- target: openai/gpt-4

weight: 40

What Happens Here:

Sets usage limits and smart routing for Azure GPT-4 and Anthropic Claude-3 models. Caps tokens and requests per minute, auto-pauses Azure GPT-4 on repeated failures, and routes "claude-3" production traffic to the fastest Anthropic endpoint. Meanwhile, "gpt-4" staging traffic splits 60% to Azure and 40% to OpenAI.

Operational Guidance & Best Practices

- Start with basic rules (simple weight-based splits), then add latency and cost-based logic as traffic matures.

- Always define usage and failure limits per endpoint to avoid runaway costs or cascading failures.

- Leverage metadata and subject filters to create granular routing for different teams, environments, or use-cases.

- Test changes in staging, and rely on pull requests for config review in production.

- Use observability data to continuously tune weights and thresholds in response to usage and model performance trends.

Beyond YAML: Observability & Monitoring

Every routing event, whether triggered by latency, weight, or failure logic, is logged and can be exported via OpenTelemetry for post-mortem debugging or cost allocation. Dashboards and logs trace:

- Model/target chosen

- Failure and recovery events

- Cost metrics (tokens, requests, error codes)

- Latency distribution per model

By using TrueFoundry’s AI Gateway, technical teams can build robust, fail-safe, and cost-effective multi-LLM deployments—all managed, versioned, and governed as code.

LLM Load Balancing in Production: Case Scenarios

1. Enterprise Copilot App

A Fortune 500 builds a chat assistant. Most employee queries are simple (“find these files,” “summarize this article”). Only rarely are deep research or strategic questions asked. By using prompt complexity tagging and routing basic tasks to low-cost endpoints, the company cuts LLM spend by $70k/month. When OpenAI has a service interruption, Azure is auto-promoted, and users see no downtime.

2. AI Content Writing Platform

A SaaS product offers marketing copy generation to 10,000+ concurrent users every morning. TrueFoundry’s Gateway deploys latency-based routing, constantly adjusting to which vendor (OpenAI or Azure) is faster at that time, optimizing both cost and tail latency for real-time streaming.

3. ML Research Lab

Rolling out a fine-tuned version of Llama-3 for QA. Engineers use weighted round-robin to canary 5% of traffic to the new checkpoint for A/B testing, with all routing decisions and user feedback logged. After weeks of shadowing and metrics gathering, the load balancer shifts the majority of traffic automatically, with full rollback support if regressions are detected.

Conclusion

LLM load balancing is critical engineering infrastructure for every serious AI application. No matter your cloud mix or LLM vendor, naive request routing yields unpredictable latency, outages, and runaway bills. Production-grade load balancing blends classic algorithms (weighted, latency, cost-aware), session/caching best practices, robust failure detection, and automated scaling—with all logic expressed in a clear, auditable YAML configuration.

TrueFoundry’s AI Gateway provides these features out-of-the-box, letting teams ship robust products without worrying about vendor quirks, rate limits, or latency spikes. Modern observability and enterprise governance give you peace of mind as you scale from first prototype to high-traffic, multi-regional workloads.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)