LLamaIndex vs LangGraph: Comparing LLM Frameworks

As enterprises and developers build more advanced LLM-powered applications, two frameworks frequently come up in conversations: LlamaIndex and LangGraph. Both aim to simplify the complexity of working with large language models, but they tackle very different challenges.

LlamaIndex is primarily focused on data integration and retrieval-augmented generation (RAG), making it easier to connect LLMs with private or enterprise data sources. It provides indexing, querying, and retrieval pipelines that allow models to access the right context at the right time.

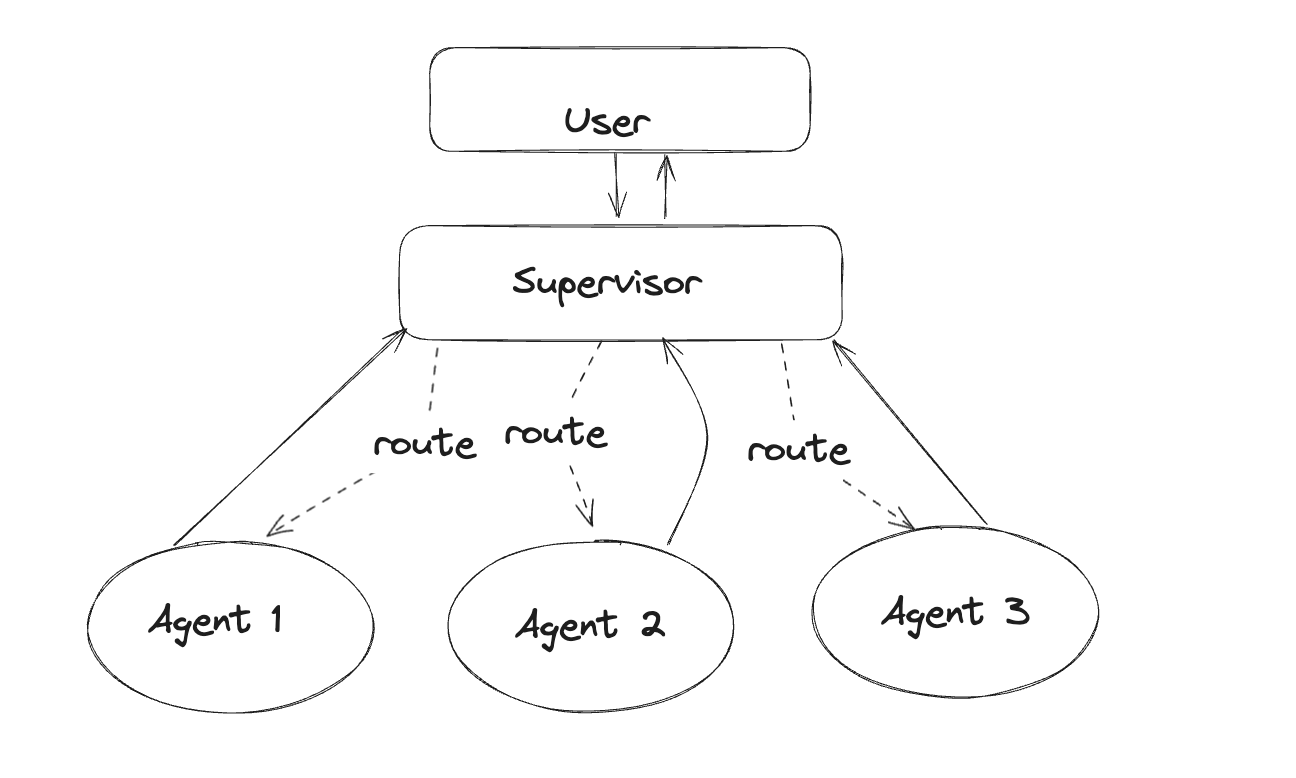

LangGraph, on the other hand, is designed for stateful workflow orchestration, where developers can build complex agent-based applications using a graph structure. It emphasizes loops, retries, branching, and multi-agent collaboration—features needed to move beyond simple prompt chaining into production-ready AI systems.

In this comparison, we’ll explore how LlamaIndex and LangGraph complement each other, where they differ, and which framework is better suited for your specific LLM development needs.

What Is LlamaIndex?

LlamaIndex is an open-source framework designed to help developers connect large language models (LLMs) to their own data in a structured, efficient way. Instead of relying only on what a model knows from its training, LlamaIndex makes it easy to give the model access to up-to-date, domain-specific information so it can answer questions more accurately and contextually.

It provides tools for ingesting, indexing, and querying data from multiple sources. These sources can include PDFs, databases, APIs, websites, and other document repositories. Once ingested, the data is transformed into embeddings and stored in an index that the LLM can search when generating responses.

The framework is modular, meaning you can choose exactly how your data is processed and retrieved. For example, you can use vector databases like Pinecone or Weaviate for storage, customize chunking strategies for better retrieval, and define query pipelines to match your application’s needs.

LlamaIndex is particularly popular in Retrieval-Augmented Generation (RAG) workflows. In these setups, the model retrieves relevant context from your indexed data before generating an answer. This reduces hallucinations, improves factual accuracy, and makes the AI more useful for real-world tasks such as customer support, research, compliance checks, and internal knowledge management.

With built-in integrations, flexible APIs, and support for both simple and complex retrieval pipelines, LlamaIndex has become a go-to choice for developers looking to bridge the gap between powerful LLMs and private, structured datasets.

What Is LangGraph

LangGraph is a framework that allows developers to build AI applications where the flow of execution is explicitly defined and state is maintained across multiple steps. Unlike a simple prompt-response setup, LangGraph makes it possible to create structured workflows that adapt dynamically based on the outcome of each step.

It uses a graph-based architecture where nodes represent actions or decisions, and edges define how the application moves between them. This design makes it easier to manage complex, non-linear processes that may involve loops, branching paths, or revisiting earlier steps. Each step in a LangGraph workflow can run an LLM call, trigger an external tool, or carry out a custom function.

One of LangGraph’s key strengths is state persistence. It can remember past interactions, variables, and decisions, even across long-running sessions. This makes it suitable for use cases like multi-turn assistants, investigative research agents, or guided troubleshooting systems that require continuity.

LangGraph also supports event-driven execution, meaning it can react to external triggers or user input at any stage of the workflow. This opens up possibilities for applications where the AI needs to respond in real time or pause for human review before continuing.

For developers, LangGraph provides better control over how AI systems behave and a clearer way to debug them. By making every decision and state change visible, it allows for more predictable, maintainable, and transparent AI applications.

LlamaIndex vs LangGraph

LlamaIndex is focused on solving one key challenge: connecting large language models to the right information at the right time. It provides the tools to gather data from multiple sources, index it efficiently, and query it in a way that improves accuracy. It is purpose-built for retrieval-augmented generation workflows, making it ideal when your primary need is giving an LLM access to structured, private, or domain-specific knowledge. With flexible data connectors, multiple storage backends, and customizable query pipelines, it streamlines the process of building search-augmented AI applications.

LangGraph, in contrast, is all about designing the logic and control flow of AI applications. It excels in situations where the process is not strictly linear and may involve branching, looping, or re-running earlier steps. Its graph-based execution model allows you to map out exactly how the AI should move between actions, tools, and decision points. This makes it a strong choice for building adaptive, long-running workflows where state persistence, decision visibility, and human-in-the-loop checkpoints are important.

When to Use LlamaIndex

LlamaIndex is the right choice when your application needs to provide a large language model with accurate, up-to-date, and context-rich information from external sources. By itself, an LLM only knows what it was trained on, which may be outdated or incomplete. LlamaIndex bridges this gap by allowing you to connect the model to your own data.

If your project involves retrieval-augmented generation (RAG), LlamaIndex should be one of your first considerations. It makes it straightforward to pull data from documents, databases, APIs, or other repositories, process it into embeddings, and index it for efficient search. This ensures the LLM can retrieve relevant context before answering a query, improving factual accuracy and reducing hallucinations.

You should consider LlamaIndex when your workflow requires:

- Private or proprietary data access without retraining the model.

- Search across multiple formats such as PDFs, CSVs, websites, and cloud storage.

- Custom retrieval pipelines that can be tuned for your specific use case.

- Integration with vector databases like Pinecone, Weaviate, or Milvus.

It is especially valuable in use cases like:

Internal knowledge bases where employees need precise, document-backed answers.

Customer support systems that must respond based on product manuals or FAQs.

Research assistants who combine public and proprietary information to produce reports.

Compliance and auditing tools where accuracy and traceability are critical.

While LlamaIndex is excellent at providing relevant information to an LLM, it is not designed to control the entire decision-making process of a multi-step workflow. If your AI needs complex orchestration or persistent state management, pairing LlamaIndex with a tool like LangGraph can give you both high-quality context and robust workflow control.

When to Use LangGraph

LangGraph is best suited for AI applications where the path to a solution is not strictly linear and where decisions depend on changing conditions or multiple stages of reasoning. If your workflow involves branching, looping, or revisiting earlier steps based on new inputs, LangGraph gives you the structure to design and control that process.

One of its main advantages is state persistence. In many AI applications, the ability to remember context across steps is critical. LangGraph stores and carries forward state throughout a workflow, making it ideal for long-running tasks, multi-turn conversations, or processes that need to pause for human approval before continuing.

You should consider LangGraph when your workflow requires:

- Complex decision-making paths with multiple possible outcomes.

- Human-in-the-loop checkpoints to validate results or approve actions.

- Integration with external tools at different points in the process.

- Full visibility into execution flow for debugging and performance monitoring.

LangGraph shines when you need predictable, transparent AI behavior that can adapt mid-process. While it is not built for managing large-scale data ingestion or retrieval like LlamaIndex, combining the two can be powerful. LlamaIndex can supply accurate, relevant data, while LangGraph ensures the workflow using that data runs efficiently and reliably.

LlamaIndex vs LangGraph – Which is Best?

Choosing between LlamaIndex and LangGraph depends on whether your priority is giving your AI access to the right information or controlling how it processes that information.

LlamaIndex is the better fit if your main challenge is data retrieval. It is designed to connect LLMs to private, structured, or domain-specific data and return relevant context at query time. This makes it ideal for RAG-based applications where the model’s accuracy relies on pulling the right information from multiple sources before generating a response.

LangGraph, on the other hand, is the stronger choice when your focus is on the structure, adaptability, and transparency of an AI workflow. It lets you map out every step, create branching or looping paths, and maintain state across long-running processes. This is especially useful in applications where decision-making changes based on context, human review is required, or tasks span multiple stages.

LlamaIndex ensures your AI knows what it needs to know, while LangGraph ensures it follows the right process to use that knowledge effectively. If your use case requires both accurate retrieval and controlled execution, the two can be combined with LlamaIndex, providing the data layer and LangGraph managing the workflow layer.

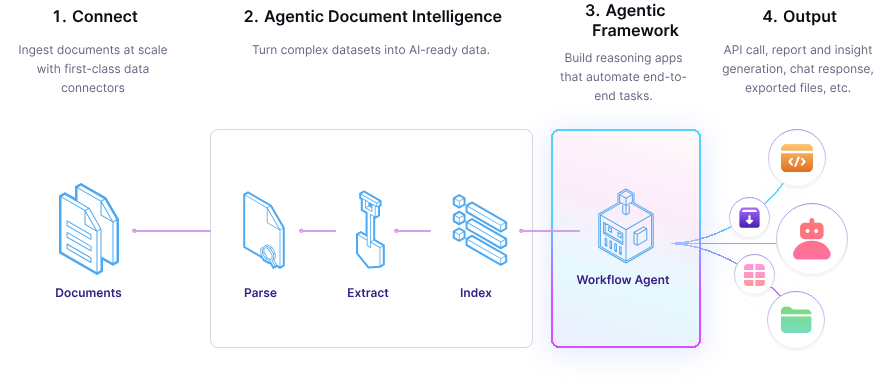

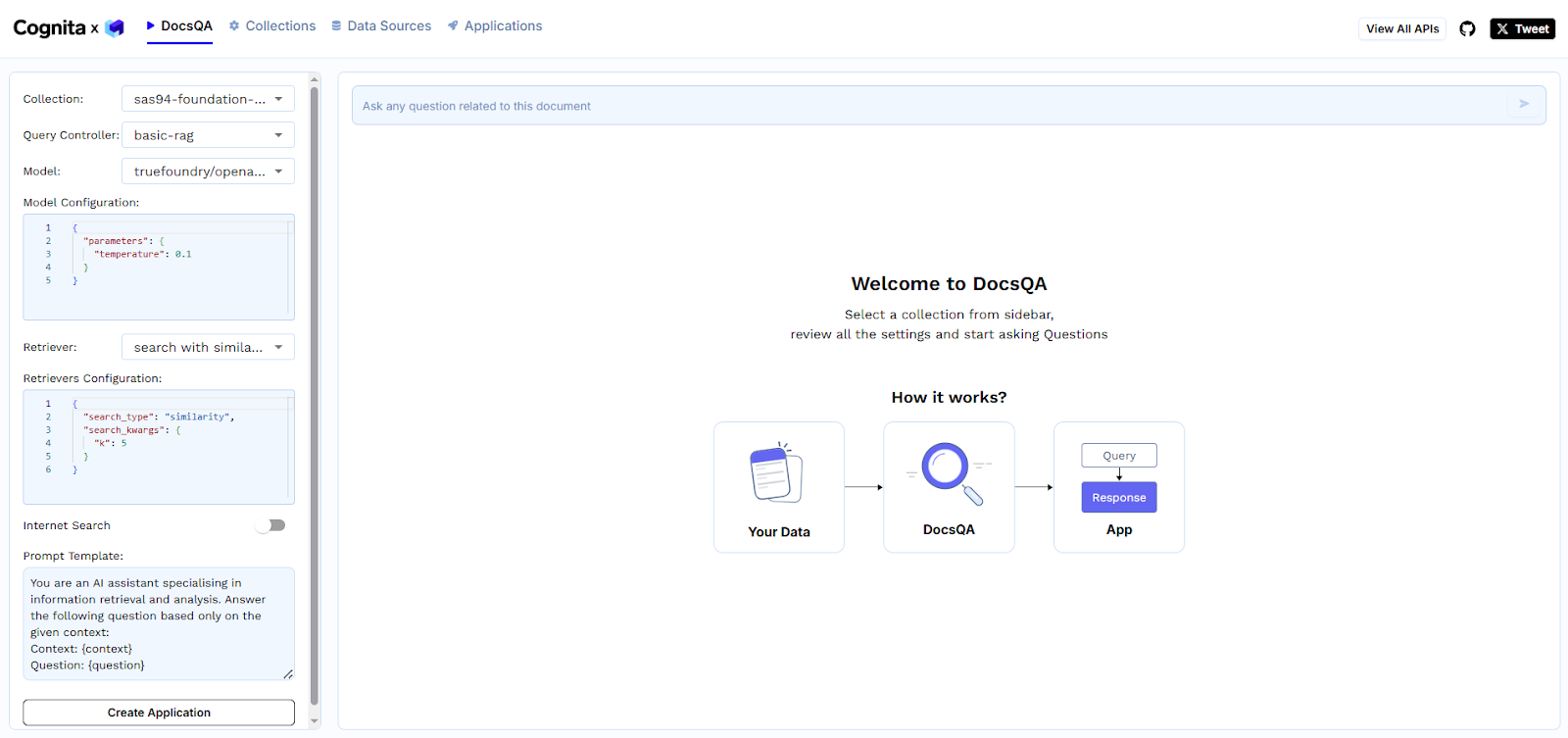

TrueFoundry Cognita – Enterprise RAG

TrueFoundry Cognita is an enterprise-ready framework for building and scaling retrieval-augmented generation (RAG) applications. It offers a modular, API-driven architecture with secure deployment in VPC, on-prem, or air-gapped environments. Cognita meets SOC 2, HIPAA, and GDPR compliance. It supports autoscaling for large, concurrent workloads. Built-in observability and tracing ensure accuracy, reliability, and auditability for mission-critical use cases.

Key features:

- Fully Modular – Swap parsers, loaders, embedders, and vector databases without code rewrites.

- Scalable & Reliable – Manage heavy traffic with autoscaling and concurrent query handling.

- Built-in Observability – Trace retrieval steps, monitor token usage, and debug with full transparency.

- Enterprise Security – Deploy in VPC, on-prem, or air-gapped setups with SOC 2, HIPAA, and GDPR compliance.

Building a RAG Application with Cognita

Creating an enterprise RAG app with Cognita is simple yet production-focused. Start by selecting your data sources, such as S3, local files, databases, or API, and parsing formats like PDFs or Markdown. Choose your embedding model and connect to a vector database such as Qdrant or Weaviate. Configure your retriever parameters, such as chunking, similarity search, and reranking, and design your prompt template to inject retrieved context into the LLM. Apply runtime configurations for scaling, caching, and tracing, then deploy via API. Cognita orchestrates the entire flow from retrieval to reranking to prompt execution while maintaining enterprise security and performance.

Why TrueFoundry Cognita is Better than LlamaIndex and LangGraph

Cognita goes beyond LlamaIndex’s retrieval capabilities and LangGraph’s workflow orchestration by combining both into an enterprise-ready RAG platform. It delivers secure, compliant deployments, scales seamlessly under heavy workloads, and offers end-to-end observability to debug and optimize pipelines. Unlike LlamaIndex, it includes infrastructure for running RAG at enterprise scale, and unlike LangGraph, it manages both the retrieval layer and execution environment in one integrated framework.

Conclusion

LlamaIndex and LangGraph serve different but complementary roles in AI development. LlamaIndex excels at connecting large language models to external, structured, and private data for accurate retrieval. LangGraph focuses on designing adaptive, stateful workflows that guide how AI processes information. Choosing between them depends on whether data access or workflow control is your priority. For enterprises aiming to combine both, TrueFoundry Cognita provides the ideal foundation. With secure, compliant deployment options, autoscaling, and full observability, Cognita enables building reliable, enterprise-grade RAG applications that integrate retrieval and orchestration seamlessly, ensuring AI systems perform accurately and efficiently in production environments.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)