5 Best AI Gateways in 2025

Many organizations adopting large language models (LLMs) quickly discover the gap between a successful demo and a production-ready system.

- The bills can be unpredictable and astronomical — in one case, a developer left a loop running overnight and racked up $3,000 in API charges.

- The security team raises concerns about sensitive financial or healthcare data flowing through third-party APIs without proper governance.

- Systems may fail unexpectedly when providers like OpenAI hit rate limits, with no fallback strategy in place.

- Worst of all, teams often have no clear visibility into what’s happening under the hood once models are in production.

These challenges highlight how wide the gap is between “AI that works in a demo” and “AI that works at enterprise scale.”

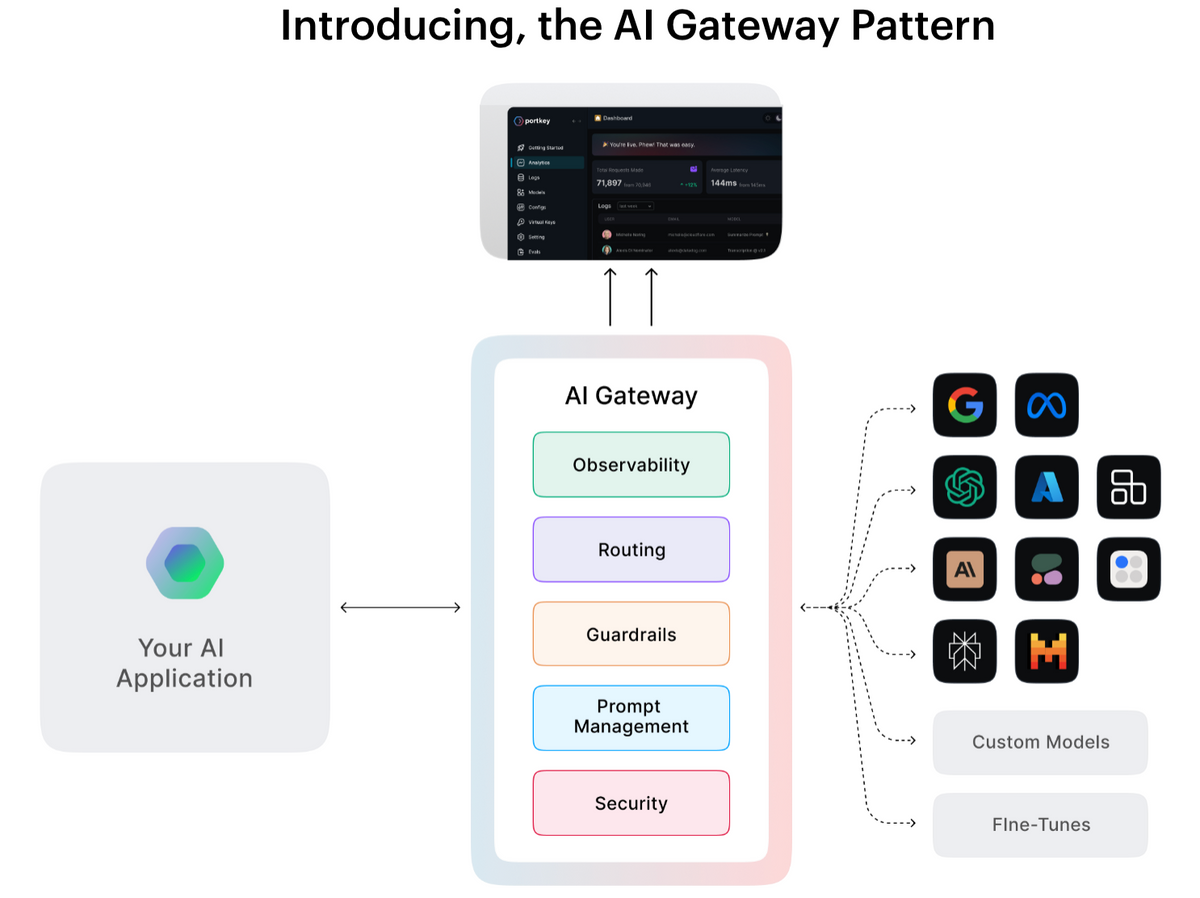

What is an AI Gateway?

Think of an AI gateway as the air traffic control system for your LLM operations. Just like how air traffic control manages hundreds of flights safely and efficiently, an AI gateway sits between your applications and multiple LLM providers, orchestrating requests, enforcing policies, and ensuring everything runs smoothly.

But unlike traditional API gateways, AI gateways understand the unique challenges of LLM workloads. They know how to handle token-based pricing, manage context windows, route requests based on model capabilities, and provide the observability you need to debug complex AI workflows.

The numbers tell the story of why this matters. The AI gateway market exploded from $400M in 2023 to $3.9B in 2024, and Gartner predicts that 70% of organizations building multi-LLM applications will use AI gateway capabilities by 2028. Companies like NVIDIA report 80% higher GPU utilization after implementing proper AI infrastructure, while smaller teams now increasingly serve millions of users with just a few people managing the entire AI stack.

Why Every AI Team Needs a AI Gateway

The problems Sarah faced aren't edge cases. They're the inevitable reality of running LLMs at scale. Here's why:

Cost Control Gone Wild: LLM costs can spiral out of control faster than any other cloud service. Unlike traditional APIs, where you pay per request, LLMs charge per token, and token usage is inherently unpredictable. A single complex query might use 10x more tokens than expected. Without proper guardrails, a small bug can bankrupt your AI budget in hours.

The Vendor Lock-in Trap: Starting with one provider feels simple, but it creates dangerous dependencies. What happens when OpenAI is down for maintenance? When a model gets deprecated? When the pricing changes overnight? When there is a new high-performing model from another vendor, like Gemini or Anthropic? Teams that hard-code provider-specific APIs find themselves scrambling to rewrite code during outages and these scenarios, making them fall behind their competitors.

Security and Compliance Nightmares: Enterprise data flowing through third-party APIs creates compliance headaches. How do you ensure sensitive customer data isn't logged by third-party LLM routers like OpenRouter, for example? How do you implement role-based access control when different teams need different model permissions? How do you audit AI decision-making for regulatory compliance?

Operational Blindness: LLM applications fail in unique ways. Models can produce incorrect outputs that look correct, use unexpected amounts of compute, or hit rate limits at unpredictable times. Without proper observability, debugging feels like working in the dark.

The solution isn't to build all this infrastructure yourself. That's like building your own database instead of using PostgreSQL. The smart move is choosing the right AI gateway for your needs.

Best 5 AI Gateway Solutions

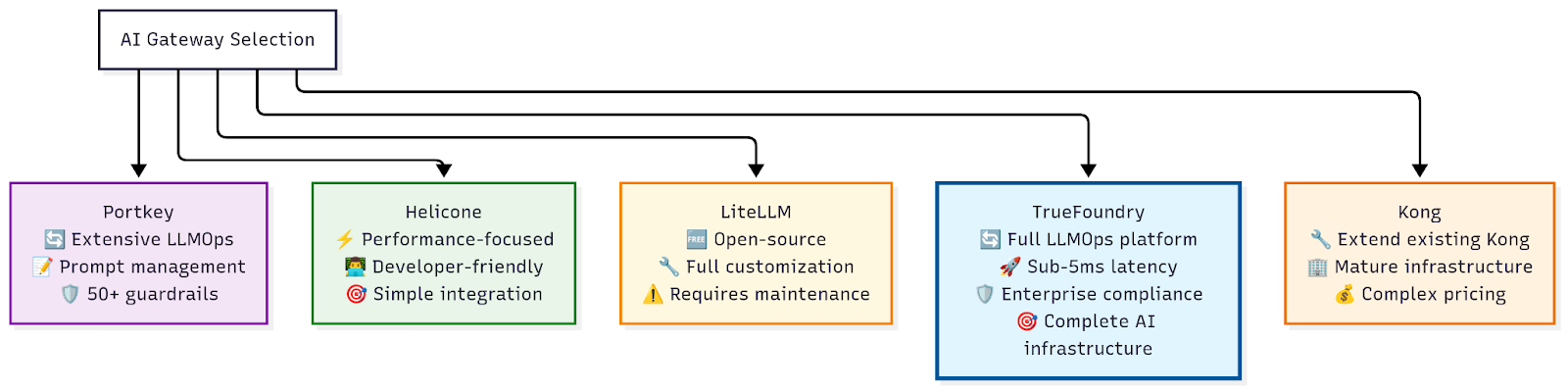

After analyzing dozens of solutions and talking to teams running AI in production, five platforms stand out for their technical excellence and enterprise readiness. Each takes a different approach to solving the core challenges, and the right choice depends on your specific requirements.

1. TrueFoundry AI Gateway

TrueFoundry isn't just another AI proxy. It's a purpose-built platform designed by engineers who've felt the pain of scaling AI at companies like Meta, Apple, and WorldQuant. The results speak for themselves: sub-5ms latency overhead, 350+ requests per second per CPU core, and production deployments serving millions of daily requests.

What Makes TrueFoundry Different

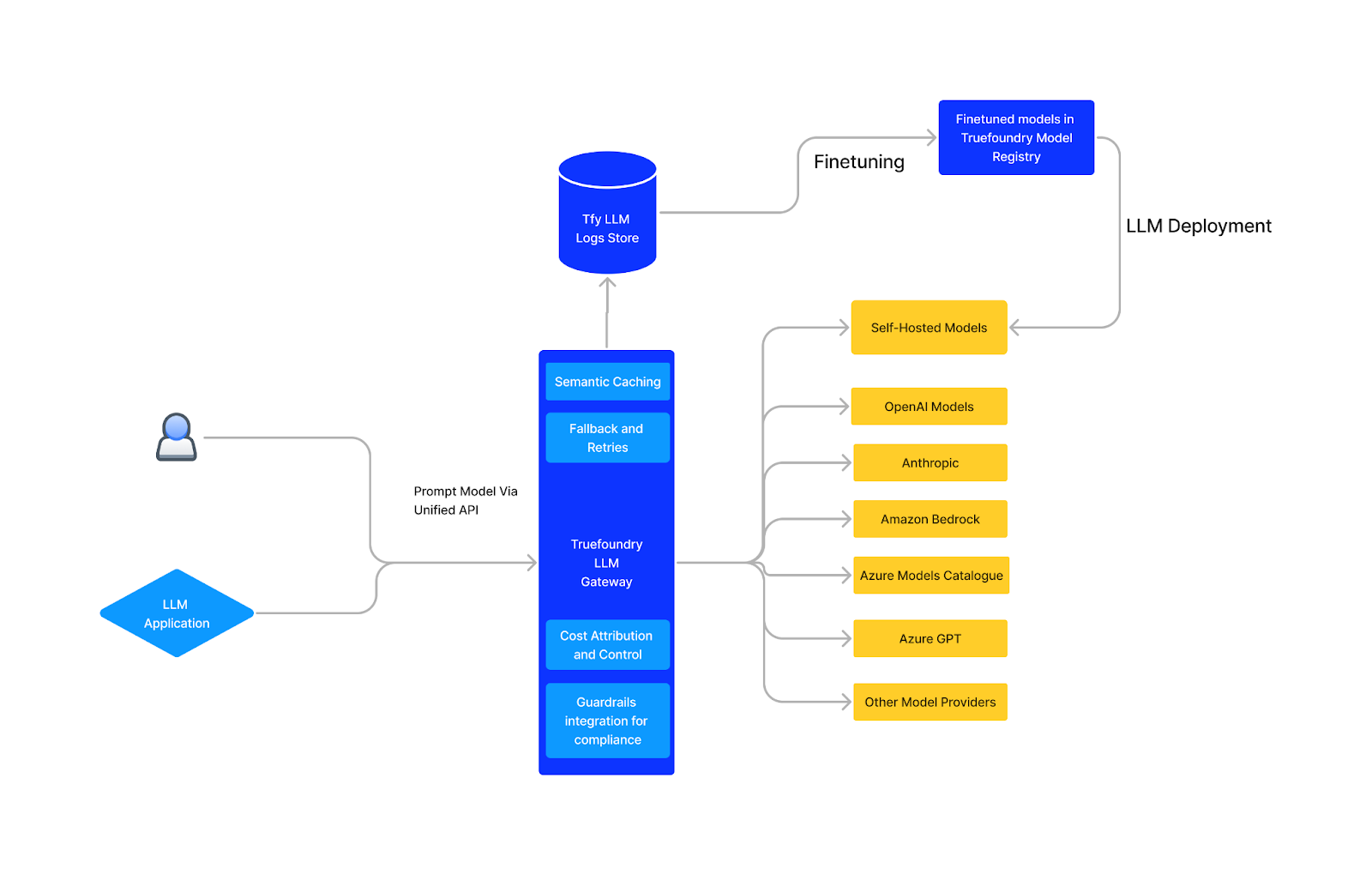

The platform's architecture separates the control plane from the data plane, enabling both operational flexibility and performance optimization. Unlike solutions that add latency with every feature, TrueFoundry processes authentication, authorization, and rate limiting in-memory, ensuring consistent sub-millisecond response times even with complex governance rules.

The unified API gives you access to hundreds of LLMs through various vendors (OpenAI, Anthropic, Gemini, Azure, AWS, Databricks, Mistral, Groq, Together, etc.), with support for all OpenAI-compatible providers, along with self-hosted models.

Enterprise Features That Actually Work

TrueFoundry achieved SOC 2 Type 2 and HIPAA compliance in 2024, with authentication systems supporting Personal Access Tokens for development and Virtual Account Tokens for production, plus OAuth 2.0 integration for enterprise identity providers.

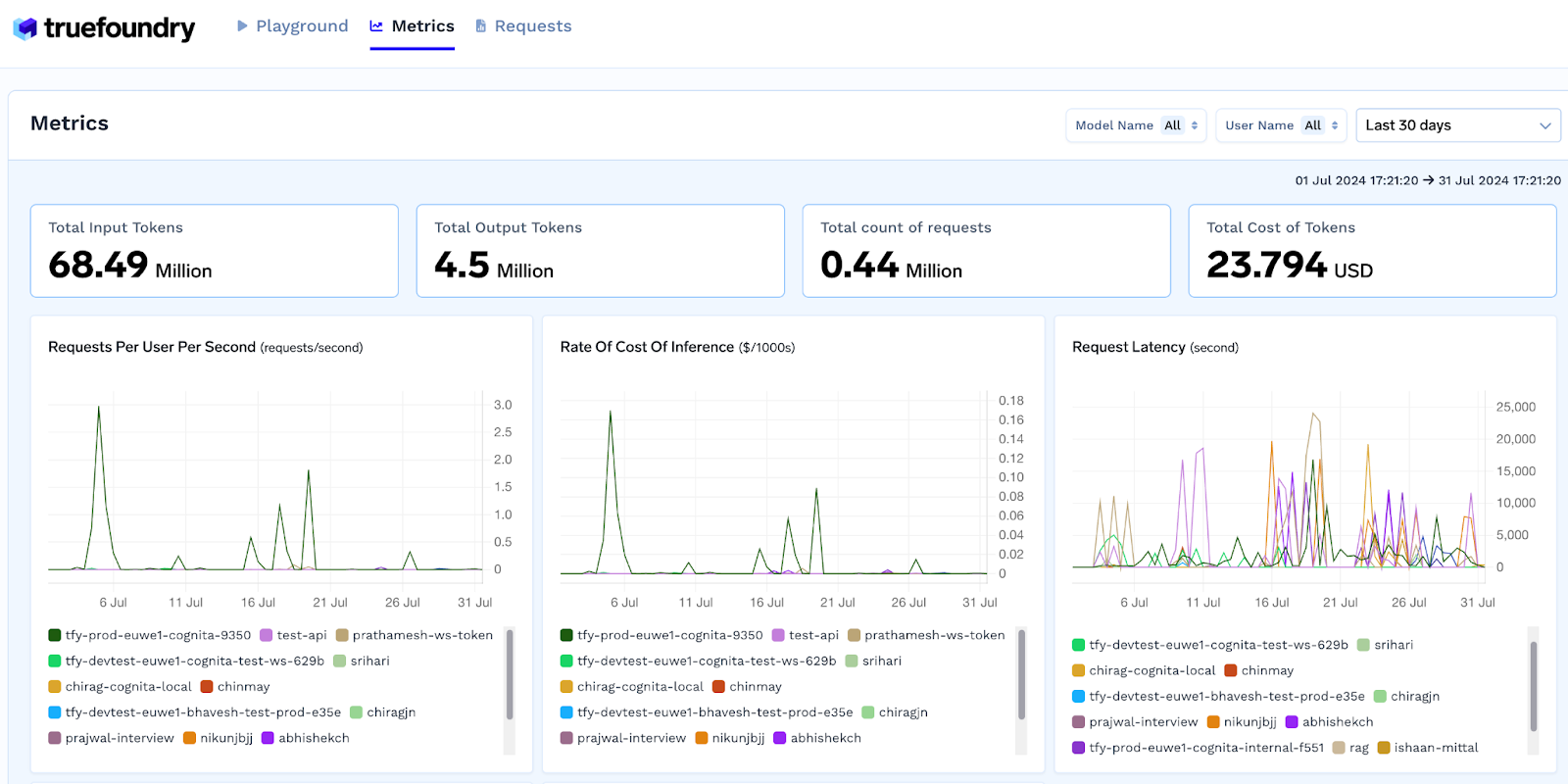

What sets TrueFoundry apart is its comprehensive cost management that goes beyond basic tracking. Token-level usage attribution lets you understand costs by user, team, geography, or any custom dimension. Real-time budget enforcement prevents surprises, while detailed analytics help optimize spending patterns. Teams typically see 30-70% cost reduction compared to direct provider usage.

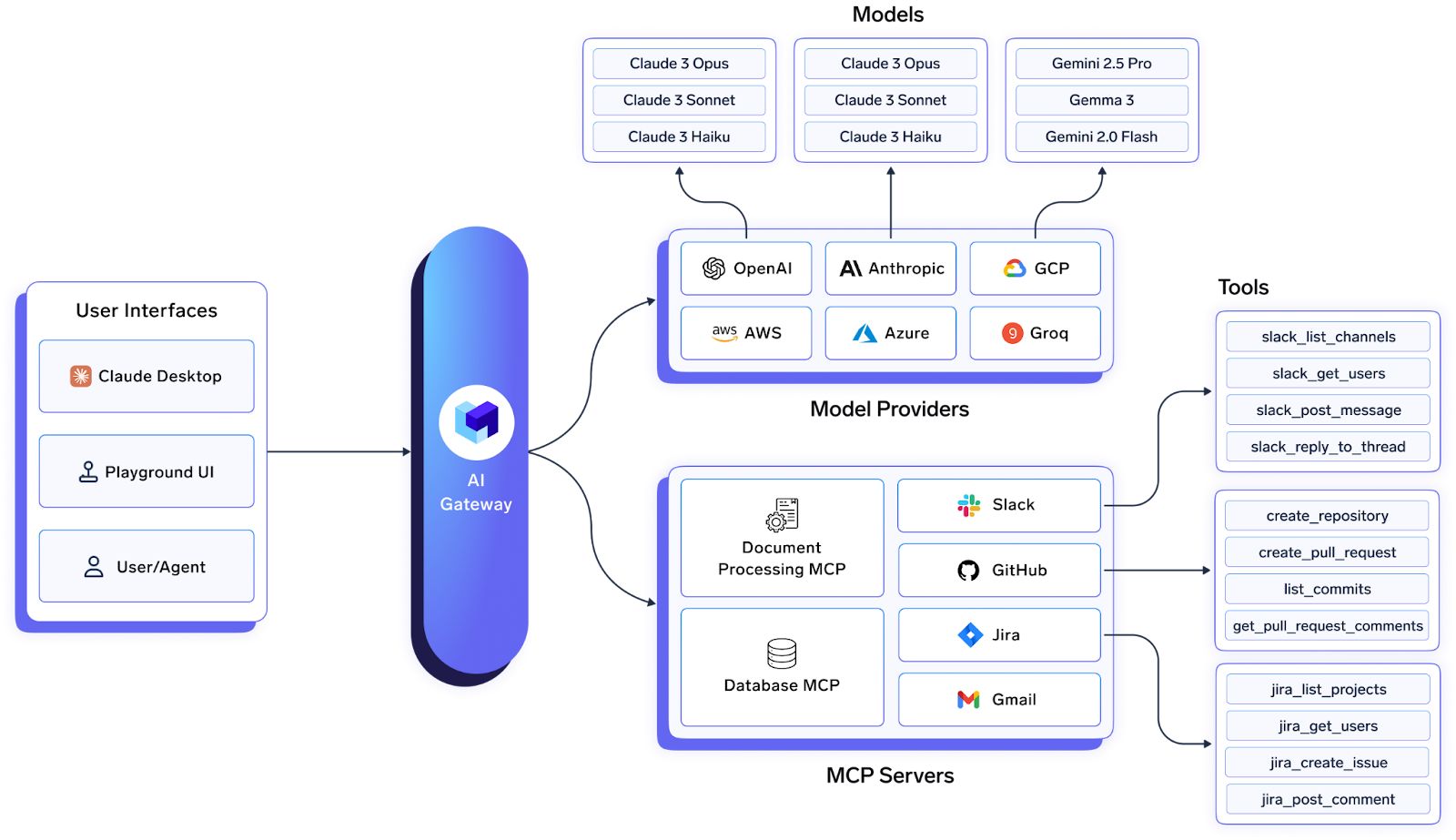

The Model Context Protocol (MCP) Gateway represents forward-thinking architecture for enterprise tool integration. Instead of building custom connectors for every enterprise tool, you get centralized MCP server management with OAuth 2.0 secured access to tools like Slack, GitHub, and Confluence, plus comprehensive observability across agent workflows.

TrueFoundry's containerization and deployment capabilities support flexible model servers (vLLM, SGLang, TRT-LLM), automatic model caching, and GPU optimization with sticky routing for KV cache optimization. The platform even supports air-gapped deployments for maximum security requirements.

Who Should Choose TrueFoundry

Organizations that need enterprise-grade reliability and governance without sacrificing performance. The platform particularly appeals to teams that value comprehensive observability and monitoring, predictable costs, extensive security management, and integration with existing enterprise infrastructure. If you're managing multiple LLM providers and need granular control over access, costs, and compliance, TrueFoundry delivers the most complete solution. Truefoundry also enables the complete AI-Stack for your team, including managing ML and LLM deployments, integration across providers, and accessing custom and pre-existing mcp server integrations (Slack, GitHub, Sentry, etc.)

Potential Considerations: The comprehensive feature set may be more than needed for simple individual use cases (or use-cases for just starter teams), and the enterprise focus means pricing reflects the full-stack nature of the platform.

2. Kong AI Gateway

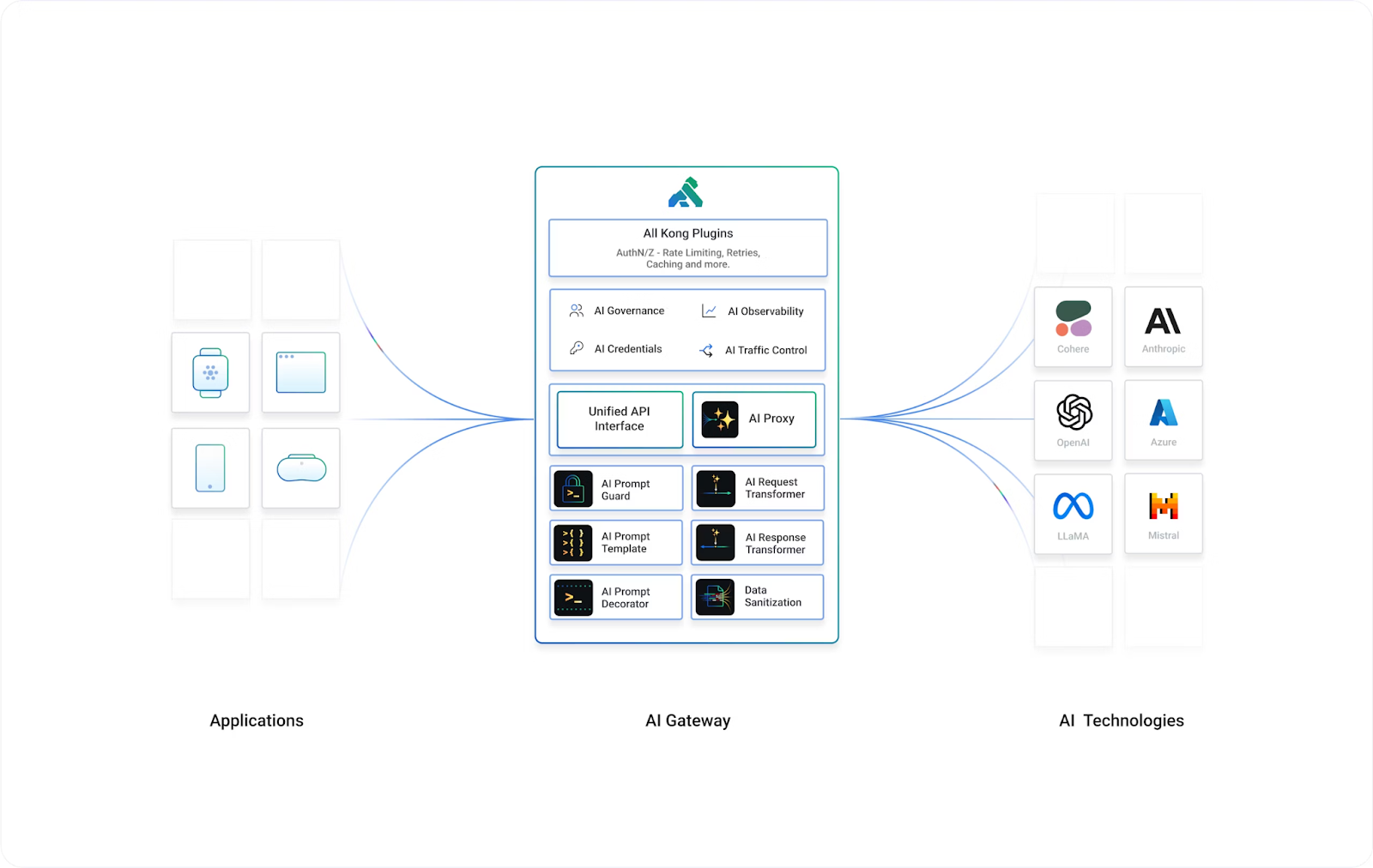

Kong brings mature API management capabilities to AI workloads, extending their battle-tested platform with AI-specific features. If your organization already runs Kong for traditional APIs, the AI gateway provides familiar operational patterns with new capabilities designed for LLM traffic.

Leveraging Mature Infrastructure

Kong's strength lies in its comprehensive plugin ecosystem and enterprise-grade operational features. The AI-specific plugins include semantic routing and advanced load balancing with six routing strategies. Token-based rate limiting supports various strategies, though the implementation can be complex compared to more modern alternatives.

Enterprise Integration Depth

Kong's enterprise heritage shows in security and governance capabilities. OAuth 2.0, JWT, mTLS, and role-based access control integrate with existing enterprise identity providers, though teams report the documentation can be challenging to navigate for AI-specific features.

Best Fit for Kong AI Gateway

Organizations with existing Kong infrastructure that want to extend familiar operational patterns to AI workloads. The hybrid approach works well for teams that need comprehensive API management alongside AI-specific capabilities.

Potential Considerations: Kong's pricing complexity is well-documented, with costs exceeding $30 per million requests compared to alternatives. The multi-dimensional pricing model (gateway services, API requests, paid plugins, premium plugins) creates cost unpredictability that can be prohibitive for high-volume AI workloads. Additionally, enterprise pricing requires sales consultation, making cost planning difficult.

Also explore: Top 5 Kong AI Alternatives

3. Portkey

Portkey positions itself as an LLMOps platform rather than just a gateway, offering end-to-end AI application lifecycle management alongside traditional proxy functionality. The LLMOps functionality of the platform is, however, limited, missing key features like deployment.

Beyond Basic Gateway Features

The platform provides access to 100s of LLMs through a unified API while extending into prompt management, guardrails, and governance tools. 50+ pre-built guardrails address security and compliance concerns, with automated content filtering and PII detection.

Advanced prompt management includes collaborative templates and versioning capabilities. Real-time monitoring provides comprehensive visibility, though some users report the platform can be overwhelming for new users due to the vast array of features (suggested on AWS marketplace by reviewers of the product).

Enterprise Reliability and Security

SOC2, ISO27001, HIPAA, and GDPR compliance certifications, combined with deployment options spanning SaaS, hybrid, and fully air-gapped environments, address enterprise security requirements. The 99.99% uptime SLA provides reliability guarantees.

When to Choose Portkey

Organizations requiring integrated LLMOps capabilities beyond basic gateway functionality. The comprehensive feature set justifies the investment for teams building complex AI applications that require sophisticated prompt management and extensive guardrails.

Potential Considerations: Enterprise pricing is complex, key features like budget limits are restricted to Enterprise customers only. Some users report limited export functionality requiring manual support team intervention for data access. The platform’s LLMOps functionality is also limited, as key options like deployment are also not natively supported.

4. Helicone

Helicone differentiates itself through performance engineering and developer-centric design. Built in Rust to achieve speed metrics, the platform processes requests with ~8ms P50 processing time, though this still represents substantially higher latency than more optimized solutions.

However, teams looking for more enterprise-focused features may consider a Helicone alternative to meet requirements beyond performance, such as governance, cost management, and compliance.

Performance-First Architecture

The unified API supports 100+ models across major providers with intelligent caching and load balancing capabilities. Built-in rate limiting and automatic failovers provide reliability for production deployments, though the scope is narrower than comprehensive enterprise solutions.

Developer Experience Focus

Developer experience emphasizes simplicity with single-line code integration and OpenAI SDK compatibility. The observability dashboard provides integrated monitoring without requiring additional tooling setup, though it lacks the depth of enterprise-focused alternatives.

Ideal for Performance-Focused Teams

Organizations where developer simplicity is valued over comprehensive enterprise features. The performance-first approach appeals to teams building consumer-facing applications where simplicity matters more than governance.

Potential Considerations: While faster than some alternatives, the 8ms overhead is still significantly higher than optimized solutions. The feature set is narrower than enterprise platforms, lacking advanced governance, compliance features, and comprehensive cost management capabilities. Most of the features offered by the platform are also present across other solutions, thus reducing the product differentiation.

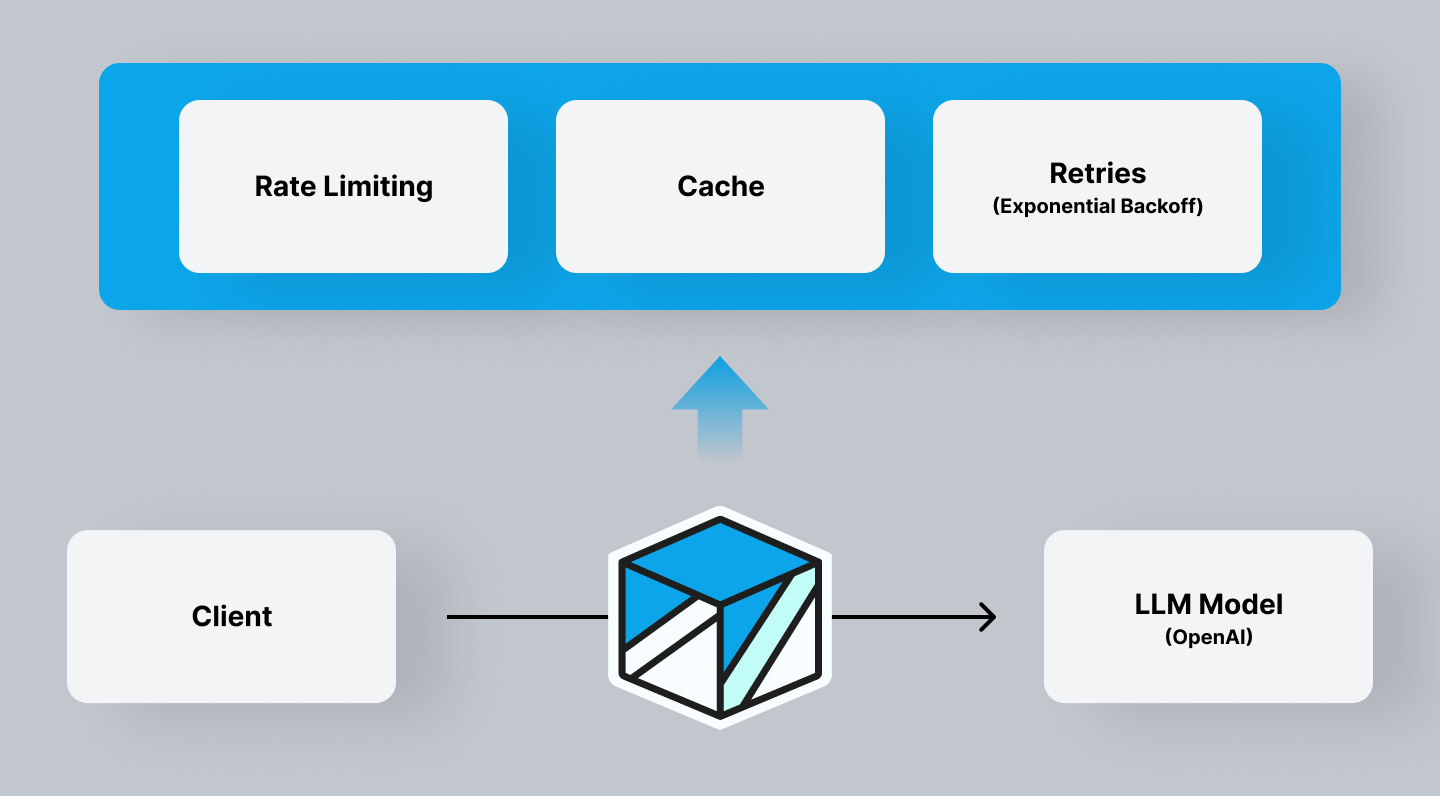

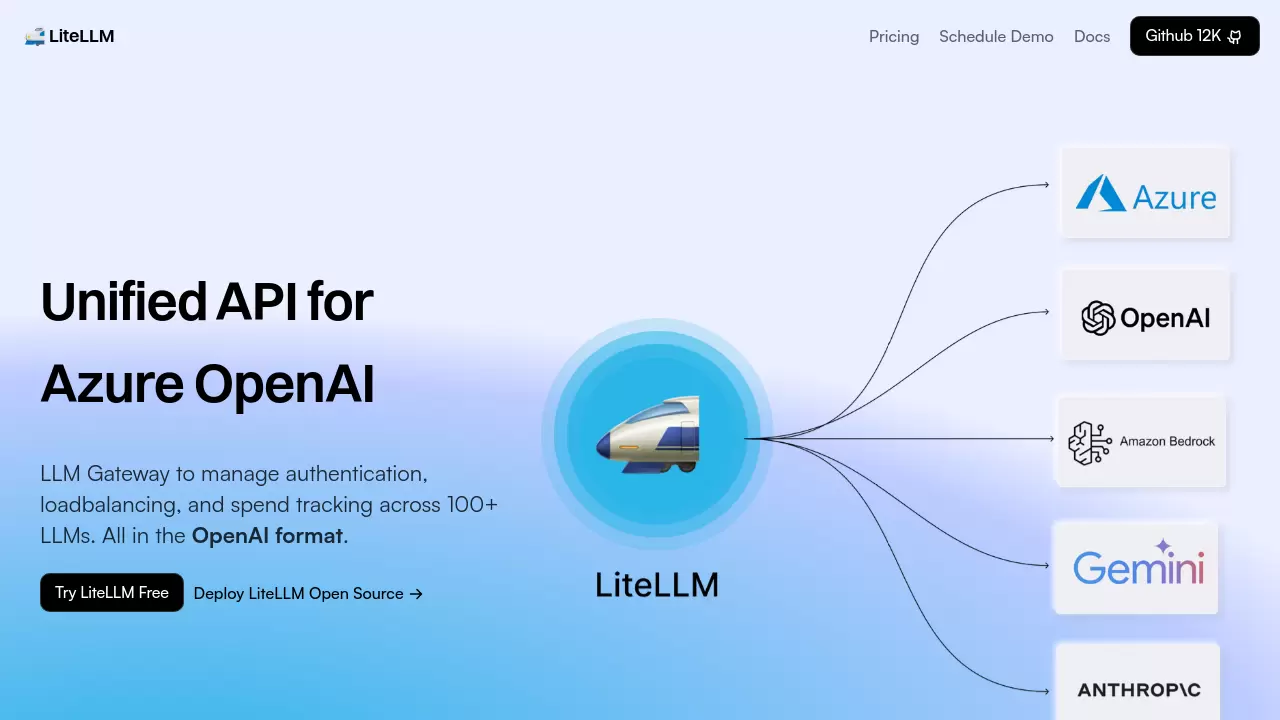

5. LiteLLM: Open-Source Flexibility and Cost Control

LiteLLM takes an open-source approach to AI gateway functionality, providing a Python-based proxy server that unifies access to 100s of LLM APIs in OpenAI format.

Universal API Compatibility

The platform's strength lies in universal API compatibility, supporting major providers with advanced load balancing and retry logic. Cost management features provide basic spend tracking and budget limits, though without the sophistication of enterprise alternatives.

Open-Source Advantages and Considerations

The open-source model provides transparency and customization flexibility. YAML-based configuration management enables infrastructure-as-code approaches, while Docker deployment options support basic production environments.

Best for Platform Teams and Cost-Conscious Organizations

Teams that value open-source transparency and want to maintain full control over their AI infrastructure.

Potential Considerations: LiteLLM has significant limitations for enterprise use: no formal commercial backing means no enterprise support plan, no SLAs for uptime, and no dedicated escalation path. Users report frequent regressions between versions, edge-case bugs, and instability at scale. The significant latency overhead becomes a bottleneck for real-time applications. Additionally, it lacks advanced observability, security controls, and enterprise features beyond basic routing. The updates also happen slowly and miss out on some lesser-used models, providers, thus hindering basic things like model support for newer models, slowing the development pipeline. In some use-cases, users have to manually raise GitHub issues and add support for newer models across providers.

Best AI Gateway : Comparison: Performance, Security, and Scalability

When evaluating AI gateways, three technical dimensions matter most:

Performance Characteristics: TrueFoundry's sub-5ms overhead represents best-in-class latency performance, critical for real-time applications and agent workflows. Helicone's 8ms is respectable but still significantly higher, while others introduce substantially more latency that can impact user experience.

Security and Compliance: TrueFoundry's SOC 2 Type 2 and HIPAA compliance, Portkey’s SOC 2, ISO, HIPAA, and GDPR compliance, combined with comprehensive access controls and audit capabilities, provide enterprise-grade security. Other solutions either lack formal compliance certifications or require complex configuration to achieve similar security levels.

How to Choose the Right AI Gateway ?

The optimal AI gateway selection depends on your specific requirements, existing infrastructure, and strategic priorities. Here's a practical framework:

Choose TrueFoundry if you need enterprise-grade compliance, extensive LLMOps capabilities, performance, and governance without compromising on any dimension. The platform particularly suits organizations managing multiple LLM providers with granular cost and access control requirements. The unified architecture with comprehensive MCP support and self-hosted model capabilities appeals to teams that want the most complete AI infrastructure management solution. TrueFoundry's sub-5ms latency and proven enterprise compliance make it ideal for mission-critical AI applications.

Choose Kong if you're already running Kong for traditional APIs and want to extend familiar operational patterns to AI workloads, despite the pricing complexity and higher costs. The hybrid approach works for organizations with complex service architectures, though be prepared for the learning curve and cost management challenges.

Choose Portkey if you need integrated basic LLMOps capabilities and can justify the enterprise pricing for sophisticated prompt management and governance tools. Consider the feature complexity and limited data export capabilities when evaluating.

Choose Helicone if performance and developer simplicity are your primary concerns, and you can accept the limitations in enterprise governance features. The approach suits teams building consumer-facing applications where enterprise compliance is not critical.

Choose LiteLLM if you have strong engineering capabilities to manage the open-source complexity and can accept the limitations around enterprise support, stability, and performance overhead. Be prepared for potential production issues and the need for internal maintenance.

The Future of AI Infrastructure

The AI gateway market continues evolving rapidly, with traditional API management vendors adding AI-specific features while AI-native solutions mature toward enterprise requirements. Three trends will shape the next generation:

Agentic AI Integration: As AI agents become more autonomous, agentic AI platforms and gateways will need sophisticated orchestration capabilities for multi-agent workflows, tool chaining, and complex reasoning processes. TrueFoundry's MCP Gateway positions it well for this evolution.

Multimodal Support: The expansion beyond text to images, audio, and video will require gateways that can handle diverse data types, manage varying processing costs, and optimize for different latency requirements.

Edge and Hybrid Deployment: Organizations will demand flexible deployment models that support on-premises, cloud, and edge environments while maintaining consistent governance and observability.

Conclusion

The enterprise AI gateway market represents a critical inflection point in AI infrastructure maturity. Teams that get this layer right will have sustainable competitive advantages in the AI-powered future. Those who don't will find themselves constantly fighting infrastructure problems instead of building innovative AI applications.

The choice you make today will significantly impact your ability to adapt as AI capabilities advance. While each solution has merits, TrueFoundry's combination of enterprise-grade performance, comprehensive compliance, and forward-thinking architecture provides the most complete foundation for scaling AI operations. The platform's sub-5ms latency, proven enterprise adoption, and unified approach to LLM management, MCP integration, and self-hosted model support offer the best balance of immediate value and future flexibility.

For teams ready to move beyond experimental AI projects toward production-scale deployments, the choice of gateway platform will determine operational efficiency, security posture, and strategic flexibility. The solutions profiled here represent the current state-of-the-art, but TrueFoundry's comprehensive approach and enterprise-first design make it the strongest choice for organizations serious about scaling AI infrastructure.

Ready to get started? The journey from AI demos to production systems doesn't have to be painful. With the right gateway choice and implementation strategy, you can build AI infrastructure that scales, stays secure, and keeps costs under control.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.webp)