Nexos AI vs TrueFoundry: Features & Performance Comparison

The AI landscape in 2025 is evolving at a breakneck pace, and businesses are increasingly relying on large language models to drive innovation, streamline operations, and deliver smarter customer experiences.

But with so many platforms promising to simplify model deployment, orchestration, and governance, choosing the right solution can be overwhelming. Two names rising to the top of enterprise AI discussions are Nexos AI and TrueFoundry. While both aim to help organizations manage multiple LLMs efficiently, they serve slightly different audiences and use cases.

Nexos AI focuses on centralized, cloud-first orchestration for rapid integration, whereas TrueFoundry emphasizes enterprise-grade control, scalability, and on-premises flexibility. Understanding their differences, strengths, and ideal scenarios is critical for companies that want to future-proof their AI strategy.

What is Nexos AI ?

Managing dozens of AI models can quickly become chaotic. Each model comes with its own API, quirks, and billing. Nexos AI solves this problem by acting as a centralized control hub for all your AI models. It connects you to over 200 top-tier models through a single platform, eliminating the need to juggle multiple integrations.

Key features:

- Unified AI Gateway: Centralizes access to over 200 large language models through a single, secure API, simplifying integration and management.

- AI Guardrails & Compliance Controls: Provides input and output filtering and permission settings to enforce responsible AI usage and prevent data leaks.

- Intelligent Caching & Cost Controls: Reduces latency and optimizes token usage with built-in caching while tracking usage to manage AI costs effectively.

- Full LLM Observability: Offers detailed logs and execution traces with configurable retention policies, allowing teams to monitor performance and usage trends.

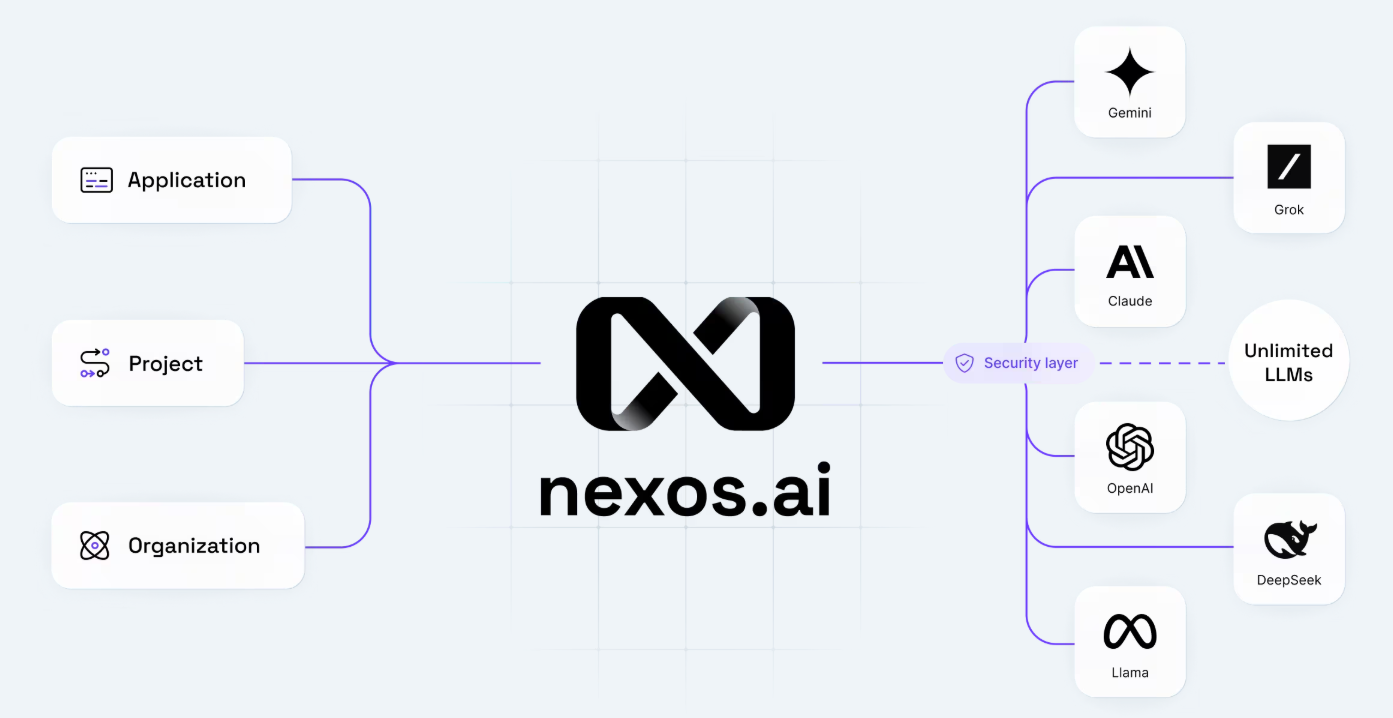

What is TrueFoundry?

Deploying AI at scale isn't just about plugging in models; it's about managing complexity, ensuring compliance, and maintaining performance. TrueFoundry is a Kubernetes-native platform built to simplify the deployment, inference, and scaling of AI and GenAI workloads across both cloud and on-premise environments.

TrueFoundry provides a unified interface to access over 250+ large language models (LLMs), including OpenAI, Claude, and Gemini. It offers intelligent model routing, automatic failover, and geo-aware traffic distribution, ensuring high availability and optimal performance. The platform supports multimodal inputs, including text, image, and audio, across compatible models, and integrates seamlessly with Model Control Planes (MCPs) for enhanced agent workflows.

Key features:

- Orchestrate with AI Gateway: TrueFoundry’s AI Gateway acts as a centralized hub for agent workflows. It manages memory, tool orchestration, and multi-step reasoning, allowing agents to plan actions, leverage external tools, and maintain context with full visibility and control.

- Build with MCP & Prompt Lifecycle Management: The platform includes a Model Control Plane (MCP) and Agents Registry, offering a discoverable library of tools and APIs with schema validation and fine-grained access control. Coupled with prompt lifecycle management, teams can version, test, and monitor prompts to ensure consistent and auditable agent behavior.

- Deploy Any Model, Any Framework: TrueFoundry supports deploying any LLM or embedding model using optimized backends like vLLM, Triton, and TGI. Fine-tuning integrates directly into workflows, making it simple to train on proprietary data. It fully supports agents built on LangGraph, CrewAI, AutoGen, or custom frameworks.

- Enterprise-Grade Compliance and Observability: The platform runs in VPC, on-prem, hybrid, or air-gapped environments to ensure data stays secure. It supports SOC 2, HIPAA, and GDPR compliance, with SSO, RBAC, and immutable audit logging. Teams get full observability from prompt execution to GPU utilization, integrating with Grafana, Datadog, or Prometheus.

- Optimized for Scale and Cost: TrueFoundry includes built-in GPU orchestration, fractional GPU support, and real-time autoscaling. Enterprises achieve higher utilization and lower costs, with reports of up to 80% better GPU cluster efficiency when running autonomous LLM agents.

Nexos AI vs TrueFoundry: Technical Comparison

Choosing between Nexos AI and TrueFoundry isn’t just about features on paper; it’s about understanding how each platform performs in real-world, technical scenarios. From deployment flexibility to model serving, observability, and cost management, the differences can significantly impact your AI workflows.

The table below highlights eight key technical aspects, helping you see at a glance where each platform excels and which one aligns best with your enterprise requirements.

When to Use Nexos AI

Nexos AI excels when organizations need a centralized, cloud-first platform to manage multiple large language models without juggling separate APIs or provider SDKs. It simplifies orchestration, reduces operational overhead, and allows teams to focus on building AI-powered applications instead of managing infrastructure.

Organizations should consider Nexos AI in the following scenarios:

- Multi-Model Access: Nexos AI provides a unified gateway to over 200 LLMs, including OpenAI, Anthropic, and Google, enabling teams to route requests intelligently and compare model outputs seamlessly.

- Rapid Experimentation & Development: Teams can test new models or update workflows quickly without extensive integration work, accelerating R&D and product development cycles.

- Observability & Monitoring – Nexos AI tracks every query and response, providing detailed logs, execution traces, and usage metrics. This allows teams to monitor performance, detect anomalies, and optimize workflows across departments.

- Secure & Policy-Driven Workflows – The platform includes customizable guardrails for inputs and outputs, preventing sensitive data leaks and ensuring outputs align with internal policies or regulatory requirements.

When to Use TrueFoundry

TrueFoundry excels when organizations need enterprise-grade control, scalability, and security for managing large language models and generative AI workloads. It is designed for teams that want to deploy, monitor, and optimize AI models at scale, whether on cloud, on-premises, or hybrid environments.

Organizations should consider TrueFoundry in the following scenarios:

- Complex AI Infrastructure – TrueFoundry’s Kubernetes-native architecture enables seamless deployment, autoscaling, and management of hundreds of AI models without manual orchestration.

- Scalable Model Serving & Inference – It supports optimized backends like vLLM and Triton, allowing teams to serve models efficiently, handle high throughput, and maintain low latency for production workloads.

- Observability & Performance Monitoring – TrueFoundry provides dashboards for token usage, latency, GPU utilization, and cost tracking. Teams can trace executions, debug issues, and ensure workloads run reliably across environments.

- Enterprise-Grade Security & Compliance – With Role-Based Access Control, audit logging, and VPC or on-prem deployment support, TrueFoundry ensures sensitive data remains secure and meets regulatory requirements.

Nexos AI vs TrueFoundry: Which is Best?

Choosing between Nexos AI and TrueFoundry ultimately comes down to your organization’s priorities, scale, and technical requirements. Both platforms deliver powerful AI orchestration, but they serve slightly different needs.

Pick Nexos AI if your focus is on rapid experimentation, cloud-native workflows, and centralized multi-model access. Its unified gateway simplifies connecting to over 200 LLMs, while intelligent caching, automated routing, and observability make it ideal for teams that need flexibility, speed, and cost-efficiency. Nexos AI works best when your team wants to streamline AI workflows without managing complex infrastructure or dealing with on-prem deployments.

Opt for TrueFoundry if your organization requires enterprise-grade scalability, compliance, and robust model deployment capabilities. TrueFoundry excels in managing high-volume production workloads with autoscaling, fine-tuned model serving, and GPU optimization. Its Kubernetes-native platform, advanced observability, and strong security features make it the preferred choice for regulated environments or enterprises with complex AI infrastructure.

Ultimately, the right choice depends on whether you value ease of use and cloud-first flexibility or full control, security, and scalable production-grade deployment. Understanding your team’s workflow, infrastructure needs, and AI s

Conclusion

Both Nexos AI and TrueFoundry offer powerful solutions for managing and deploying large language models, but they cater to different needs. Nexos AI shines for teams seeking cloud-native simplicity, multi-model orchestration, and rapid experimentation, while TrueFoundry stands out for enterprise-grade scalability, security, and complex production deployments.

Your choice should align with your organization’s infrastructure, workflow, and AI strategy. By understanding the strengths of each platform, you can select the one that maximizes efficiency, performance, and control, ensuring your AI initiatives run smoothly and deliver real business impact.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.