What Is Generative AI Gateway?

Over the last few years, generative AI has moved from research labs into the center of business and everyday applications. Large Language Models (LLMs) like GPT-4, Claude, and LLaMA have demonstrated remarkable capabilities—summarizing documents, generating software code, creating images, and even acting as conversational assistants. But with this rapid adoption comes a new challenge: how do enterprises manage, govern, and scale generative AI usage across multiple providers and teams, while ensuring security, compliance, and cost efficiency?

The answer lies in a concept that is quickly gaining momentum: the Generative AI Gateway.

What is a Generative AI Gateway?

A Generative AI Gateway is a middleware layer that sits between applications and generative AI services. Much like an API gateway routes and secures calls to backend services, a generative AI gateway is designed specifically for the unique needs of AI models. It centralizes governance, controls access, enforces security, and optimizes the use of AI models.

In simpler terms, it acts as a control tower for all AI traffic—deciding which model to call, how much usage to allow, how to handle risky responses, and how to log activities for compliance.

Whereas a traditional API gateway manages HTTP traffic, a generative AI gateway understands:

- Tokens, not just requests. AI costs are measured in tokens, so usage is tied to token quotas and rate limits.

- Sensitive outputs. LLMs can leak PII (personally identifiable information), hallucinate facts, or generate harmful content. The gateway can inspect, filter, or block such responses.

- Multi-provider routing. Instead of binding your app to one LLM provider, the gateway can switch between OpenAI, Anthropic, Hugging Face, or on-prem models.

A Real-Life Analogy: Airport Security for AI Traffic

To understand the role of a generative AI gateway, imagine an international airport. Every day, thousands of planes (AI requests) arrive from multiple airlines (AI providers), each carrying passengers (data) destined for the same country (enterprise applications). Before passengers can enter the country, they must pass through immigration and security checks. This is where the system ensures order, safety, and compliance.

Here’s how this analogy maps:

- Dangerous items are blocked (content filtering). Just as airport security prevents weapons or prohibited goods from entering, a generative AI gateway prevents sensitive data leaks, toxic language, or hallucinated outputs from flowing into enterprise applications.

- Each passenger is stamped with an entry quota (usage limits). Immigration officials control the number of days a traveler can stay. Similarly, the gateway enforces quotas—ensuring that no single user, team, or department exceeds their allocated AI usage.

- Travel logs are maintained (audit and compliance). Every passport is stamped, and passenger information is logged for future verification. Likewise, the gateway records every AI interaction for compliance, observability, and forensic audits.

But let’s extend the analogy further for clarity:

- Some passengers are VIPs or diplomats who get priority processing—this is like priority routing for mission-critical AI queries.

- Certain travelers may require extra screening if they come from high-risk areas—this resembles additional checks for prompts that could trigger harmful or non-compliant outputs.

- Immigration can redirect travelers to different terminals or destinations depending on their visa type—similar to the gateway routing requests to the most suitable model based on cost, performance, or accuracy needs.

- Airports also have duty-free shops and business lounges that provide enhanced services for select travelers. In the AI world, this could mean value-added services like semantic caching, content moderation, or bias reduction before responses are delivered to the user.

In essence, the generative AI gateway is like the airport’s security, customs, and immigration combined into one streamlined checkpoint. It ensures that regardless of the airline (AI provider) or the passenger (data), the entry into the enterprise ecosystem is safe, regulated, and optimized. Without such a system, the airport (enterprise AI adoption) would descend into chaos, with unchecked entries, security threats, and overwhelming traffic.

Why Enterprises Need a Generative AI Gateway

The demand for AI governance isn’t theoretical—it’s essential. Enterprises are under immense pressure to adopt AI responsibly. Without a gateway, generative AI adoption can spiral into chaos: uncontrolled costs, security breaches, regulatory violations, and inconsistent experiences.

Key Reasons Why a Generative AI Gateway Matters:

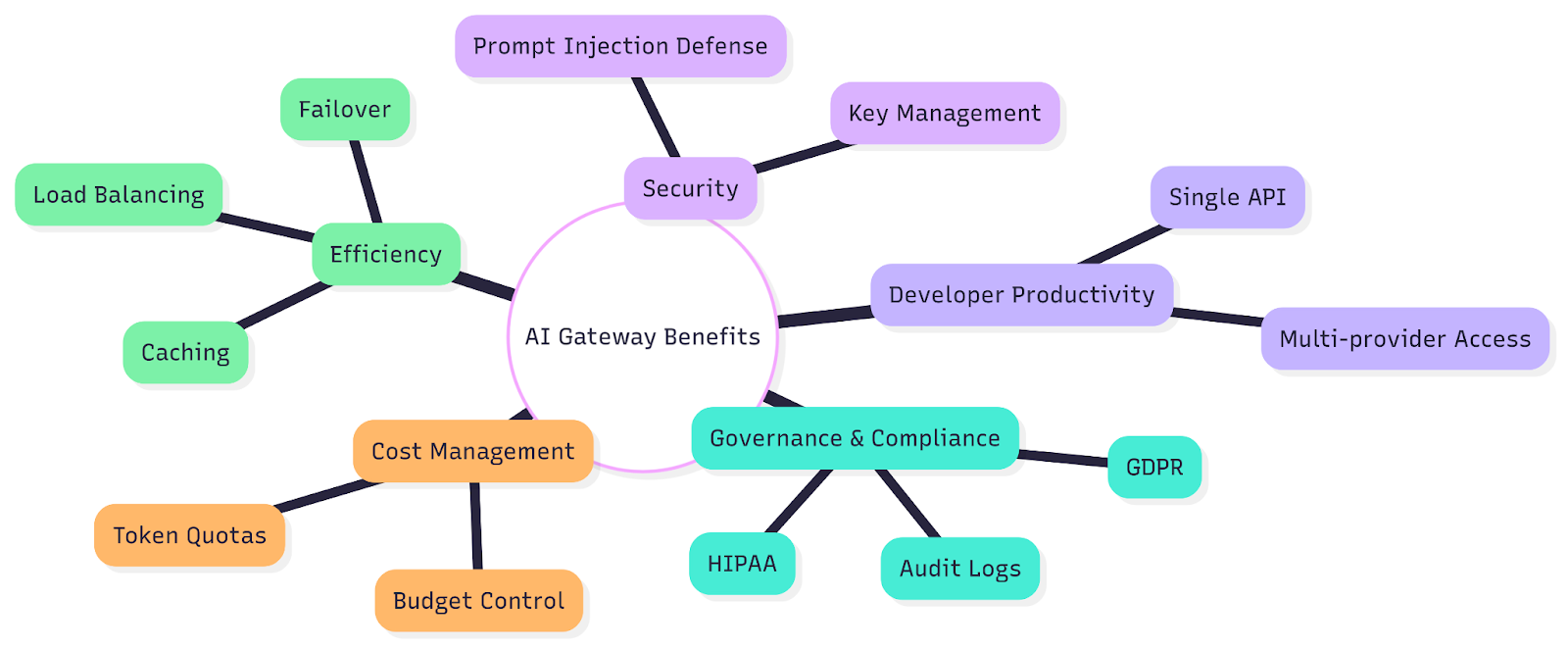

1. Governance & Compliance

- Enforce data policies and prevent leakage of sensitive information.

- Maintain audit logs for GDPR, HIPAA, and industry compliance.

2. Cost Management

- Monitor token usage across teams.

- Apply quotas to prevent runaway costs.

- Enable chargebacks and show-back models for business units.

3. Operational Efficiency

- Route requests to the right provider based on cost, latency, or accuracy.

- Cache frequent requests to reduce redundant API calls.

- Provide failover if one provider experiences downtime.

4. Security

- Centralize API key management.

- Detects and blocks prompt injection attacks.

- Mask or redact sensitive information in inputs and outputs.

5. Developer Productivity

- Provide a single entry point for multiple models.

- Allow self-service access while maintaining organizational guardrails.

Why a Generative AI Gateway Is Key to Successful AI Adoption

If you're running a business and thinking about using AI tools like ChatGPT or Claude, you've probably realized it can get pretty messy pretty fast. That's where something called a generative AI gateway comes in handy. Think of it as a smart middleman that makes everything easier and safer.

One Place for Everything

Instead of having your developers learn how to connect to OpenAI, then Anthropic, then whatever new AI company pops up next week, they just connect to one place - the gateway. It's like having one remote control for all your TVs instead of juggling five different ones. This saves time and headaches, especially when new AI models come out every few months.

Pick the Right Tool for the Job

Not every task needs the most expensive, powerful AI model. Sometimes you need super accurate results for important legal work, other times you just need quick answers for customer service. With a gateway, you can easily switch between different AI models without changing your code. It's like being able to choose between a sports car and a pickup truck depending on what you need to haul.

Keep Things Running When Stuff Breaks

AI services go down sometimes - it happens to everyone. A good gateway automatically switches to a backup when your main AI service is having problems. Your customers won't even notice the difference. It's like having a backup generator that kicks in during a power outage.

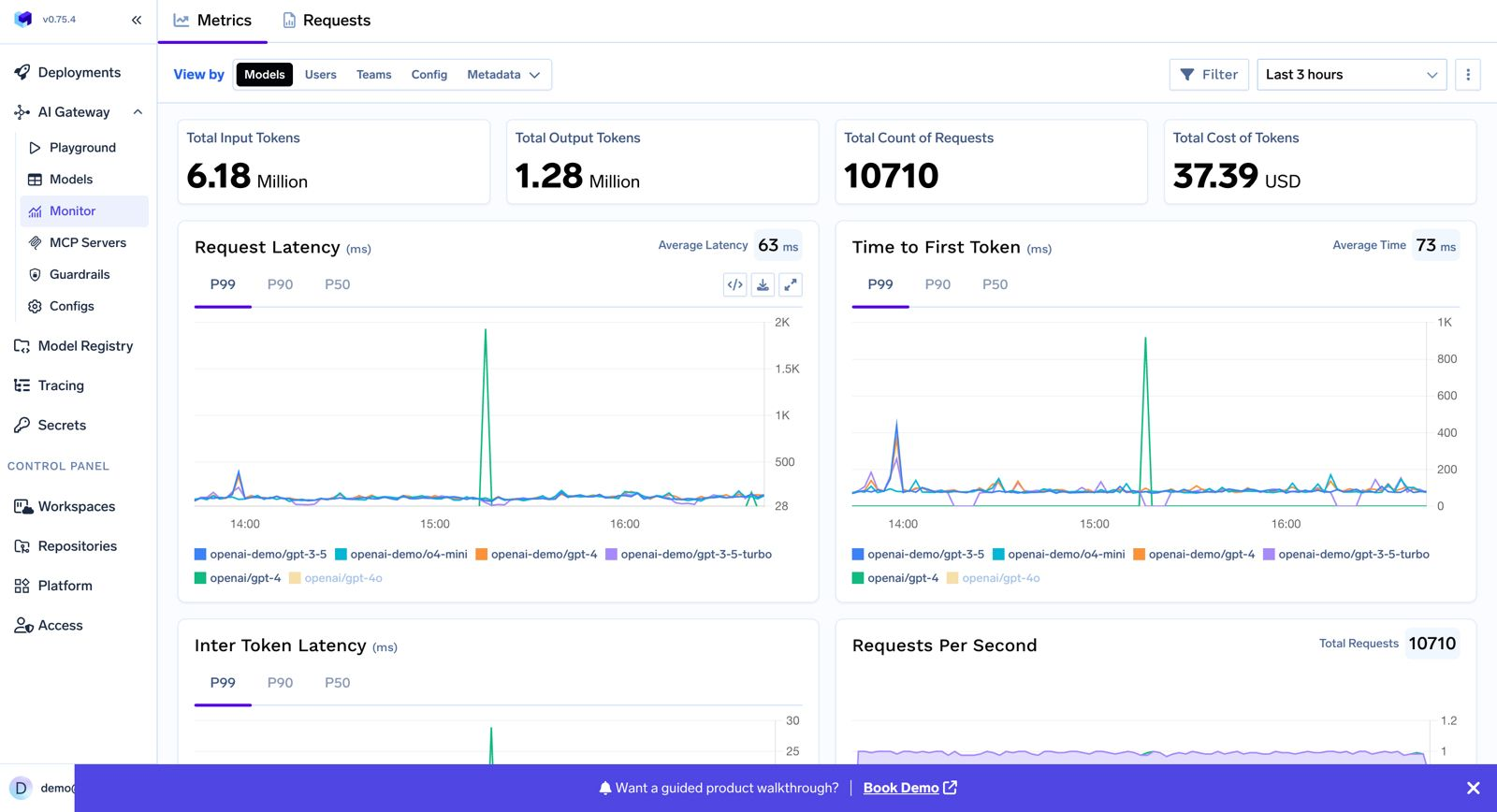

See What's Actually Happening

One big problem with AI is that it's hard to track who's using what and how much it's costing you. Gateways give you clear dashboards showing exactly how much each team is spending and what they're doing with AI. No more surprise bills at the end of the month.

Keep the AI in Line

AI can sometimes say weird or inappropriate things, or accidentally leak private information. A gateway acts like a filter, catching problematic responses before they reach your customers. It's like having a supervisor double-check everything before it goes out the door.

Control Your Spending

AI can get expensive fast if you're not careful. Gateways let you set spending limits for different teams or projects, so no one accidentally burns through your entire budget in a weekend. They also help reduce costs by avoiding duplicate requests and caching common responses.

Stay Legal and Secure

If you're in healthcare, finance, or any regulated industry, you have strict rules about data privacy and security. Gateways help you follow these rules by managing access keys securely and keeping detailed logs of everything that happens. This makes audits much easier.

Let Developers Focus on Building Cool Stuff

Instead of spending time figuring out API keys and rate limits, your developers can focus on building features that actually matter to your business. The gateway handles all the boring technical stuff behind the scenes.

Avoid Getting Locked Into One Vendor

When you connect directly to one AI company's service, switching to a competitor later means rewriting a lot of code. A gateway keeps you flexible - you can easily try new models or switch providers without major headaches.

Go from Testing to Real Use

The biggest advantage might be helping you move from small experiments to actual business use. A gateway gives you the safety and control you need to let your whole company use AI, not just a few tech-savvy teams.

TrueFoundry's AI Gateway Architecture & Capabilities

Let’s explore how TrueFoundry implements this powerful concept through its rich suite of features:

Unified API Access & Broad Model Support

- Offers a single API endpoint to access 1000+ LLMs, including hosted and on-prem models.

- Truly vendor-agnostic: OpenAI-compatible interface means minimal client changes and no lock-in.

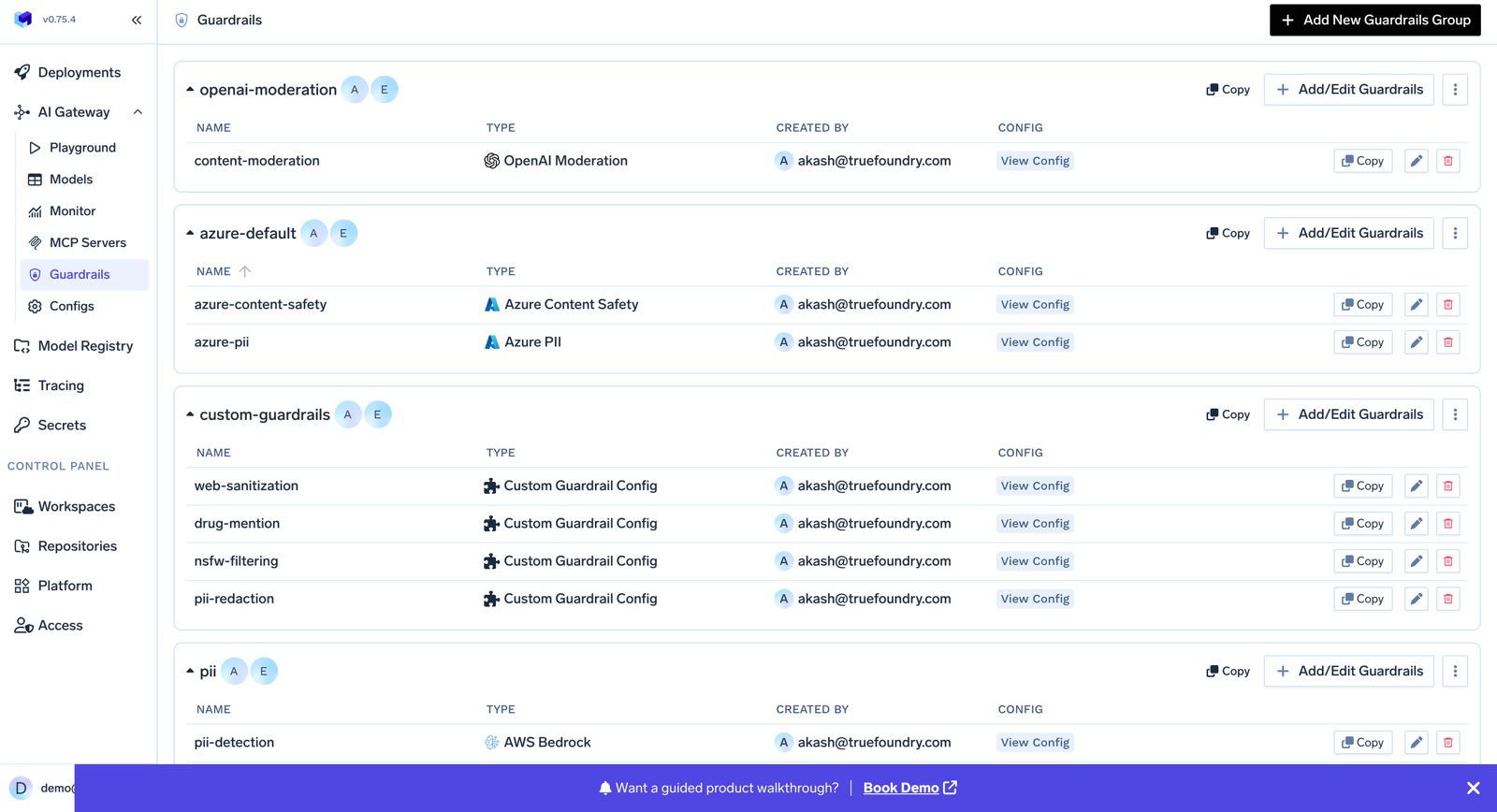

Enterprise-Grade Security & Governance

- Guardrails such as content filtering, hygiene checks, and PII protection help meet compliance standards like SOC 2, GDPR, and HIPAA.

- Features include access control with API key / Personal Access Token (PAT), Virtual Account Tokens (VAT), OAuth2, and role-based access management. (For more information you can visit this link)

Rate Limiting & Budget Controls

- Supports token- and request-based limits, configurable at user, team, model, or virtual account levels.

- Examples: restricting GPT-4 access to a user to 1,000 requests/day or adjusting quotas by team/project.

Load Balancing & Fallback

- Distributes traffic based on cost, latency, and availability.

- Automatic fallback on failures (HTTP 429/500 errors) to backup models, with parameter overrides such as temperature or token limits.

You can refer to this link if you want to know more about why we need load balancing.

Observability, Logging & Metrics

- Telemetry via OpenTelemetry-compatible logging, usage tracking, and model performance dashboards.

- Prompt playground with versioning and traceability help manage iterative prompt engineering.

Multimodal & Batch Processing

- Supports text, image, and audio inputs where compatible.

- Handles batch inference efficiently to process larger workloads.

Deployment Flexibility

- Can be deployed via Helm, in your own VPC, across AWS/GCP/Azure, on-prem, or air-gapped environments.

- Compatible with diverse inference engines (vLLM, Triton, SGLang, etc.) and supports autoscaling for self-hosted LLMs.

Future Directions of Generative AI Gateways

Generative AI gateways are still evolving, and the future looks promising. As enterprises push for greater trust, scale, and efficiency, gateways will take on even more sophisticated roles:

- Semantic Caching and Retrieval-Augmented Generation (RAG):

Gateways will not just cache by request text but by semantic similarity, reducing redundant LLM queries and cutting costs while improving performance. - Hallucination Detection and Fact Verification:

Built-in fact-checking layers will validate responses against trusted databases or internal knowledge sources, minimizing risks of misleading outputs. - Federated AI Governance:

In large enterprises with many AI teams, gateways will unify and enforce consistent policies across divisions, creating a shared foundation of trust and compliance. - Edge AI Gateways:

As on-device and private LLMs grow in capability, gateways will extend to edge deployments—powering low-latency, secure, and private AI interactions in industries like healthcare, finance, and manufacturing.

These advancements will make gateways more than just a control layer—they will become intelligent hubs that actively enhance outcomes, optimize spending, and guarantee compliance across the enterprise AI ecosystem.

Closing Thoughts

Generative AI has proven itself as more than just a technological novelty—it’s becoming the backbone of digital transformation across industries. From automating customer support to assisting in complex decision-making, the opportunities are endless. But as enterprises embrace this power, they face a paradox: the more value AI generates, the greater the risks of mismanagement, uncontrolled costs, and compliance failures.

This is where Generative AI Gateways emerge not just as a convenience, but as a strategic necessity. They act as the central nervous system of enterprise AI adoption—coordinating model usage, enforcing governance, managing security, and providing visibility into how AI is actually used at scale. Without such an infrastructure layer, organizations risk fragmentation, inefficiency, and exposure to significant reputational or financial harm.

Think about it this way: API gateways became indispensable when microservices took over enterprise architecture. Cloud management platforms became mandatory when businesses shifted from on-prem to hybrid cloud. Similarly, as enterprises transition into an AI-first era, AI gateways will be the linchpin of safe, scalable, and cost-effective adoption.

Over time, we’ll see these gateways evolve far beyond traffic routing and monitoring. They will embed intelligent orchestration—dynamically combining multiple models to produce verifiable, domain-specific, and bias-resistant results. They will become learning systems themselves, improving caching strategies, optimizing spend, and even self-tuning governance policies. And with the rise of edge AI, gateways will extend to new environments where speed, privacy, and autonomy matter just as much as accuracy.

Enterprises that invest early in robust generative AI gateway strategies won’t just gain efficiency—they’ll position themselves as leaders in trust, compliance, and innovation. Those who neglect it may find themselves overwhelmed by runaway costs, shadow AI projects, and regulatory scrutiny.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)

.png)