Enterprise AI Security with MCP Gateway & Runtime Guardrails

We conducted a webinar with the team at Palo Alto Networks with 400+ people joining from different regions and functions. Our goal at TrueFoundry was always to create infrastructure that supports your GenAI stack and we decided to pick up security as our next milestone. In this blog, we distill down all important key factors that we discussed and learnt during the webinar and boil it down to some key factors which will help you make decisions on scaling AI initiatives across your company. It’s a quick 6-7 min read, and also serves as a practical guide on AI Security that you can share with security leaders, platform teams, and AI builders.

From packets to prompts: the new perimeter is context and identity

Traditional security assumed that if an adversary couldn’t pass your firewall, they can't reach your sensitive data. Today, an engineer can paste snippets of source code into a chat to “fix a bug” and walk sensitive IP straight out the door, without any firewall being touched. In this world, context and identity become the new perimeters: who is asking, what are they asking for, and what data and tools are being pulled into that context?

It’s a fundamental shift in the threat model. Instead of blocking ports or hardening endpoints alone, we have to secure the language interface to systems and data, and everything that language can cause the system to do.

Why AI security feels different now?

Four failure modes show up first for most teams:

- Prompt injection & context tampering. Hidden instructions may be embedded in support tickets, SharePoint pages, lengthy chat histories, or retrieved documents and treated as valid context.

- Data leakage (exfiltration): Sensitive text can appear in prompts or outputs which includes PII, secrets, internal plans, even training artifacts.

- Toxic/off-brand content: Generations that fail company policy or brand guidelines.

- Confident hallucinations: Plausible-sounding but wrong assertions that drive real decisions.

If you want to learn more, OWASP's AI Top 10 is a solid map. In practice we have seen these four areas are where most programs begin.

What attendees told us live

We ran quick polls during the session. The top concern, by a wide margin: data leakage/exfiltration, followed by prompt injection and lack of observability into model and agent behavior. That aligns with what we see in enterprise rollouts: if you secure inputs and outputs first, and then make every interaction traceable, you have mostly covered what's needed for AI security

The five pillars of safe AI adoption

Here’s the short checklist we’ve seen work across industries:

- Authentication & Access Control: Don’t collapse everything behind a single shared model key. Preserve user/service identity end-to-end. Rotate keys. Avoid shared tokens.

- Input guardrails: Catch prompt injection, context tampering, and untrusted sources before they reach the model.

- Output guardrails: Block PII leaks, sensitive data egress, and toxic/off-brand text. Enforce “answer only if grounded in approved sources” for regulated workflows.

- Full observability: Trace every request: user identity, tool calls, model choice, parameters, cost, and guardrail decisions. No more shadow usage.

- Cost control & loop breakers: Agents can spiral out. Impose per-user and per-app budgets, loop detection, and hard circuit breakers. Reduce the blast radius from “one bad prompt.”

- Supply chain checks: Only use models and connectors you trust. Open-source isn’t automatically safe—check what you’re pulling before it runs in prod.

Why MCP raises the stakes

Now, LLMs no longer just talk—they can act. With the Model Context Protocol (MCP), agents discover tools and perform actions across GitHub, Jira, Slack, cloud APIs, internal systems, and more. That makes MCP a huge accelerator for developer productivity and a new class of risk. A poorly scoped agent can close 500 tickets overnight, exfiltrate data, or delete a branch because a sneaky instruction slipped in during a retrieval step.

Now you can see how the shift goes from “wrong answer” to “unauthorized actions”. Tools multiply both value and risk, so changes must be made to accommodate these.

What are the points to take care in an AI Gateway

When MCP enters the picture, elevate your baseline in these areas:

- Authentication & Authorization for MCP servers (who are you, what can you do).

- Granular access control (tool and operation level).

- Guardrails (input/output and tool-use policies).

- Observability (trace, attribute, audit).

- Rate limiting & quotas (per-user/app loop breakers).

- Supply chain security (vetted servers, signed artifacts, pinned versions).

Lets dig deep into some less talked about factors

- Guardrails in the traffic path:

The gateway sits between agents and tools/models and applies guardrails on both input and output. You can scope policies per user, per app, per model, per team, or via metadata. Typical checks:- Input: prompt injection, role escalation inside long conversations, malicious URLs/code in retrieved context.

- Output: DLP masking (SSNs/cards/tokens), toxicity and topic policies, grounding checks for regulated answer

- Input: prompt injection, role escalation inside long conversations, malicious URLs/code in retrieved context.

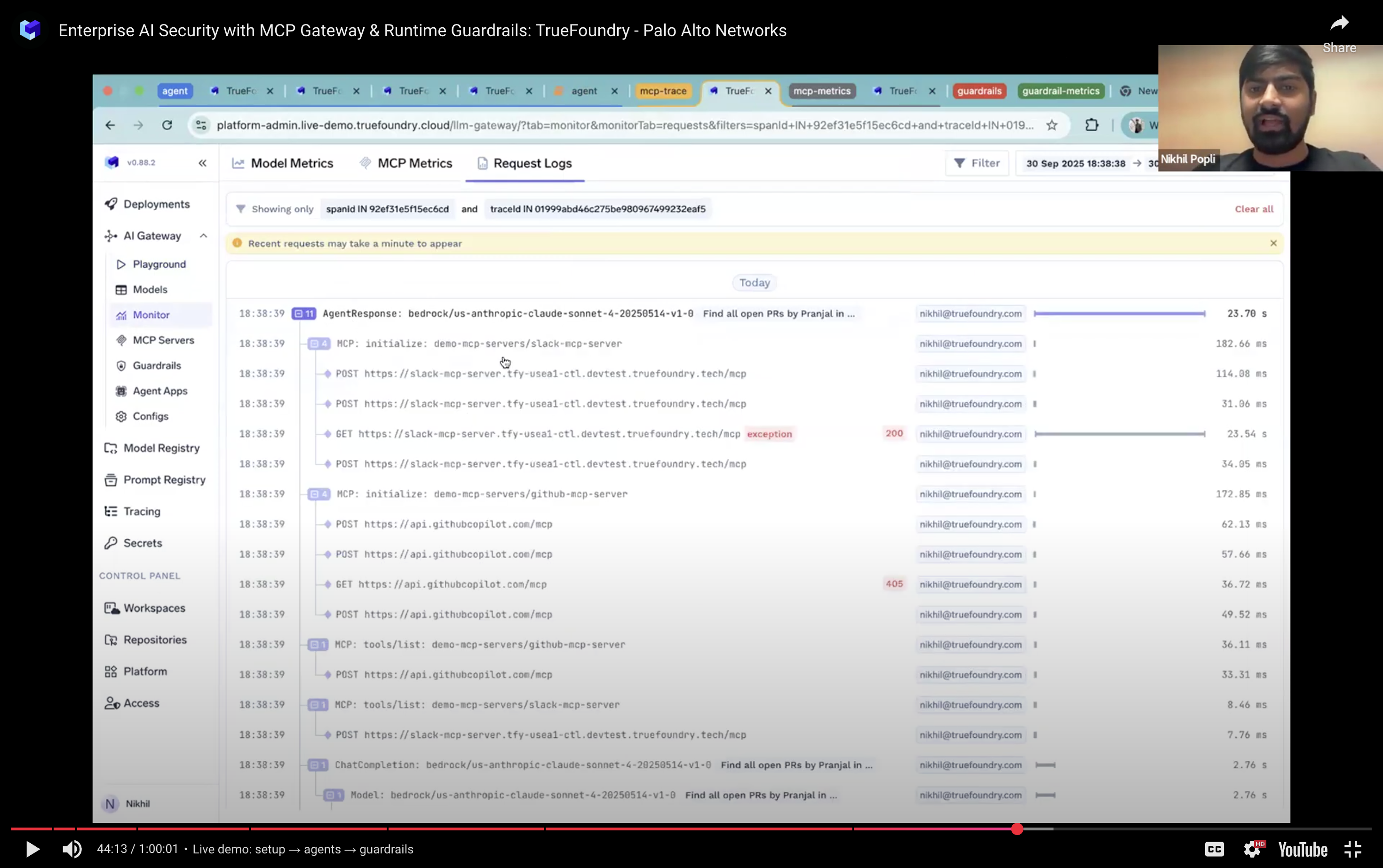

- Observability: trace, attribute, audit:

Without attribution and decision logs, you can’t investigate or prove enforcement. The AI gateway emits end-to-end traces (identity, prompt metadata, model parameters, every tool call with params/results, guardrail rule hits/decisions, and costs). Use the dashboards to answer:- Which agent is hammering code_executor—valid workload or malicious prompt?

- Why did ask_bot latency jump to 20s—API slowdown or recursive loop?

- Why is one tool eating 80% of calls—is it business-critical or just over-privileged?

- Rate limiting & budgets: loop breakers by design:

Agents can spiral. Treat budgets and QPS as security controls:- Per-user/app/tool quotas and per-minute caps to contain blast radius.

- Loop detection to trip a breaker on repeating patterns.

- Stricter caps for sensitive services (payments, customer data).

- Supply-chain integrity for MCP and models:

If you don’t vet servers, a “Slack MCP” could be a malicious package quietly shipping out chat logs. Auto-updates can pull tampered versions. The governance answer is a vetted/curated registry, pinned versions, and signed artifacts—plus model scanning and red-team runs before production.

How TrueFoundry and Palo Alto helps you secure your GenAI infrastructure

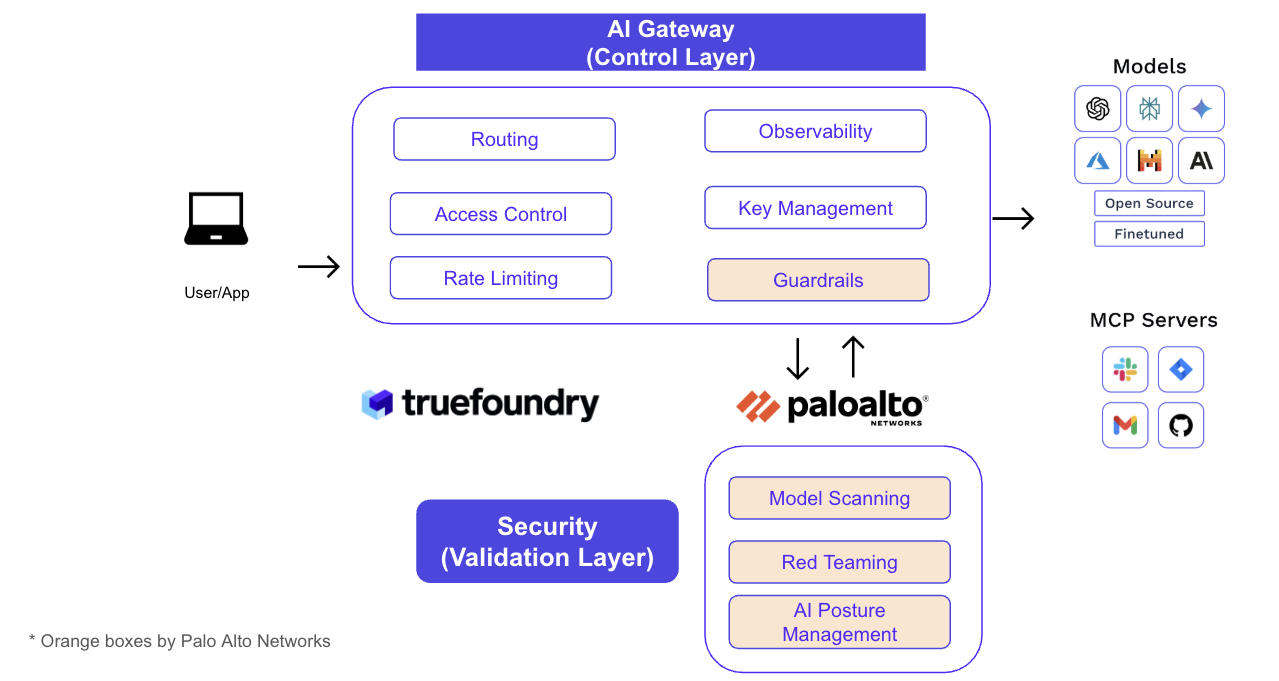

Agents are most useful when they can call tools to get real work done. MCP standardizes how agents discover and invoke those tools. But power demands control. Our recommended pattern is a two-layer design:

- AI Gateway (control layer): The central place for routing, access control, rate limiting, key management, observability, and guardrails. This is the single proxy layer that all agent/model/tool traffic flows through. This is also where you centralize MCP server auth modes—No Auth (demo), Header/Bearer, and OAuth (Slack/GitHub/Atlassian), so every team doesn’t solve auth over and over.

- Security (validation layer): Runtime inspection, AI posture management, model scanning, and red-teaming, delivered via Palo Alto Networks Prisma AIRS, integrated inline with the gateway.

But why split control and validation?

Putting control in one place gives platform teams a great developer experience (one endpoint, unified SDKs, self-serve onboarding). Putting validation in its own layer gives security teams deep hooks and evidence (guardrail verdicts, DLP masking, URL/code inspection, and posture checks) without blocking developer velocity.

Three-layer authorization

Our CTO Abhishek walkthroughs a simple model for MCP authorization within TrueFoundry's AI Gateway:

- Who are you? The gateway verifies the human or service identity (e.g., via your IdP token) at ingress.

- What can you do? The gateway enforces policy to the specific MCP server and tool/operation (“read PRs”/“comment PRs,” but “no branch delete”).

- How do we prove it downstream? The gateway does token translation to exactly what the target server expects (swap to Slack/GitHub OAuth, or a short-lived header token).

This “3-layer authN/Z” pattern removes one-off secrets, keeps attribution, and prevents over-privileged bots from doing irreversible damage.

Example: A PR-review agent can list PRs and leave comments, but AI gateway policy denies branches.delete even if a prompt tries “also delete main after summarizing.” The denial—and the identity behind the attempt—are logged.

Virtual MCP servers

We also covered what are virtual MCP servers. In short, don’t hand agents the entire Jira/GitHub/Confluence catalogs. Compose a Virtual MCP that exposes just what the workflow needs—for instance: Jira.create_issue, GitHub.create_pr, and Confluence.search_docs as a single Engineering MCP endpoint. You get cleaner prompts, faster approvals, and a smaller blast radius by design.

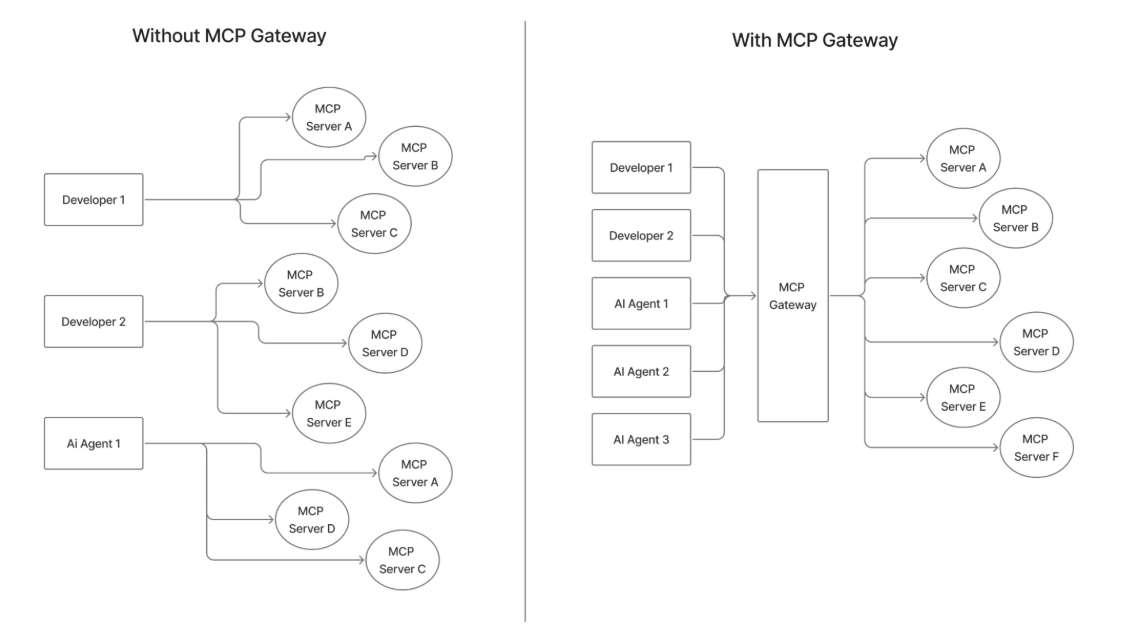

What the MCP gateway gives you

A well-designed MCP Gateway acts like a policy-aware switchboard between agents and tools:

- One place for discovery and access control: Register MCP servers once. Enforce who can see which tools.

- Tool-level permissions and curated registries: Present only the allowed tool surface per app or per team.

- Smart tool selection: Don’t pass a thousand tools into a single prompt context.

- Global rate limits and quotas: Contain loops and runaway costs.

- Unified tracing across tools and models: Attribute every action to a human or service identity, with evidence of what policy fired where.

If you compare “no mcp gateway” vs. “with mcp gateway,” the difference is stark: fewer bespoke connections, central authentication flows, centralized credential management, enterprise-grade audit trails, and a vetted server catalog instead of a sprawl of unmanaged scripts.

How Palo Alto Prisma AIRS strengthens guardrails within the gateway layer

- Runtime security: Inspect prompts and outputs for prompt injection, malicious URLs or code, toxic content, agent-specific threats, and more. Enforce DLP policies and masking in real time.

- AI Security Posture Management (AI-SPM): Catch misconfigurations early and enforce best practices for model and agent services.

- Red teaming: Pentest AI-native workflows and measure policy effectiveness.

- Model scanning: Detect vulnerabilities inside model files themselves (deserialization, backdoors, malware).

- Agent security: Watch for tool misuse and communication poisoning across agents.

You can tune safety profiles per application to balance latency with protection, and all decisions are logged for audit purposes.

A quick look at the demo

We demonstrated an agent that lists open pull requests for a teammate and nudges them on Slack. The trace shows each step—tool calls, model reasoning, timing—and the guardrail decisions along the way. The dashboard highlights which tools consume the most calls, latency spikes, and any loop-like behavior. That’s the difference between hoping an agent is safe and knowing it is. You can watch it in the youtube link, we have attached later in the blog.

Questions we answered live

“Why not just use a normal API gateway?”

Because AI workloads add new requirements: token translation per tool, tool-level RBAC, input/output guardrails, rich auditing/traceability, and smart tool routing. A classic gateway knows nothing about prompts, traces, or model/tool semantics.

“Can we monitor existing tools like ChatGPT or cloud IDEs?”

If the tool lets you set a proxy or custom model endpoint, route it through the gateway and enforce guardrails/observability there. If it does not expose that control, you cannot add transparent inspection in the middle.

“How do we avoid harmful fine-tuning?”

Watch your data: exclude PII and secrets. Validate provenance for any base model you ingest. Scan model files for hidden behaviors before you train.

“Do developers get SDKs?”

Standard MCP clients work out of the box. Prisma AIRS exposes a server and SDK, and the gateway provides copy-paste snippets for Python/TypeScript and popular agent frameworks.

Watch the full session

If you missed it—or want your platform and security teams to catch up—here’s the recording:

YouTube: https://youtu.be/hWNV2v3C8SA

Try it and talk with us

We’re offering a one-month trial of the MCP Gateway—available as SaaS or self-hosted. If you want a 30-minute architecture review to calibrate costs, latency, and guardrail profiles, we’re happy to help. You’ll get:

- Free setup and onboarding

- A private Slack/Teams channel for support

- Access to observability dashboards and guardrail profiles tailored to your apps

Huge thanks to the Palo Alto Networks team for joining us and for pushing the state of AI security forward.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.