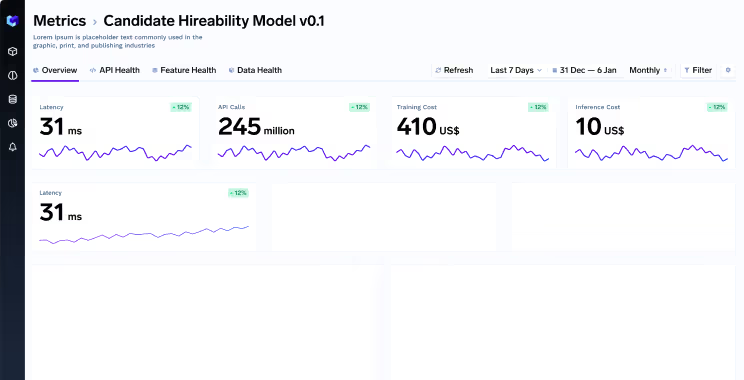

In this blog, we will show the summary of various open-source LLMs that we have benchmarked. We benchmarked these models from a latency, cost, and requests per second perspective. This will help you evaluate if it can be a good choice based on the business requirements.

Deploying open-source Large Language Models (LLMs) at scale while ensuring reliability, low latency, and cost-effectiveness can be a challenging endeavor. Drawing from our extensive experience in constructing LLM infrastructure and successfully deploying it for our clients, I have compiled a list of the primary challenges commonly encountered by individuals in this process.

This blog assumes an understanding of fine-tuning & gives a very brief overview of LoRA. The focus here will be serving LoRA fine-tuned models, especially, if you have many of them.

In this article, we will talk about how to productionize a question-answering bot on your docs. We will also be deploying it in your cloud environment and also enable the usage of open-source LLMs instead of OpenAI if data privacy and security is one of the core requirements.

In this article, we discuss about deploying Falcon model on your own cloud. The Technology Innovation Institute in Abu Dhabi has developed Falcon, an innovative series of language models. These models, released under the Apache 2.0 license, represent a significant advancement in the field. Notably, Falcon-40B stands out as a truly open model, surpassing numerous closed-source models in its capabilities. This development brings tremendous opportunities for professionals, enthusiasts, and the industry as it paves the way for various exciting applications.

.webp)