Observability in AI Gateway

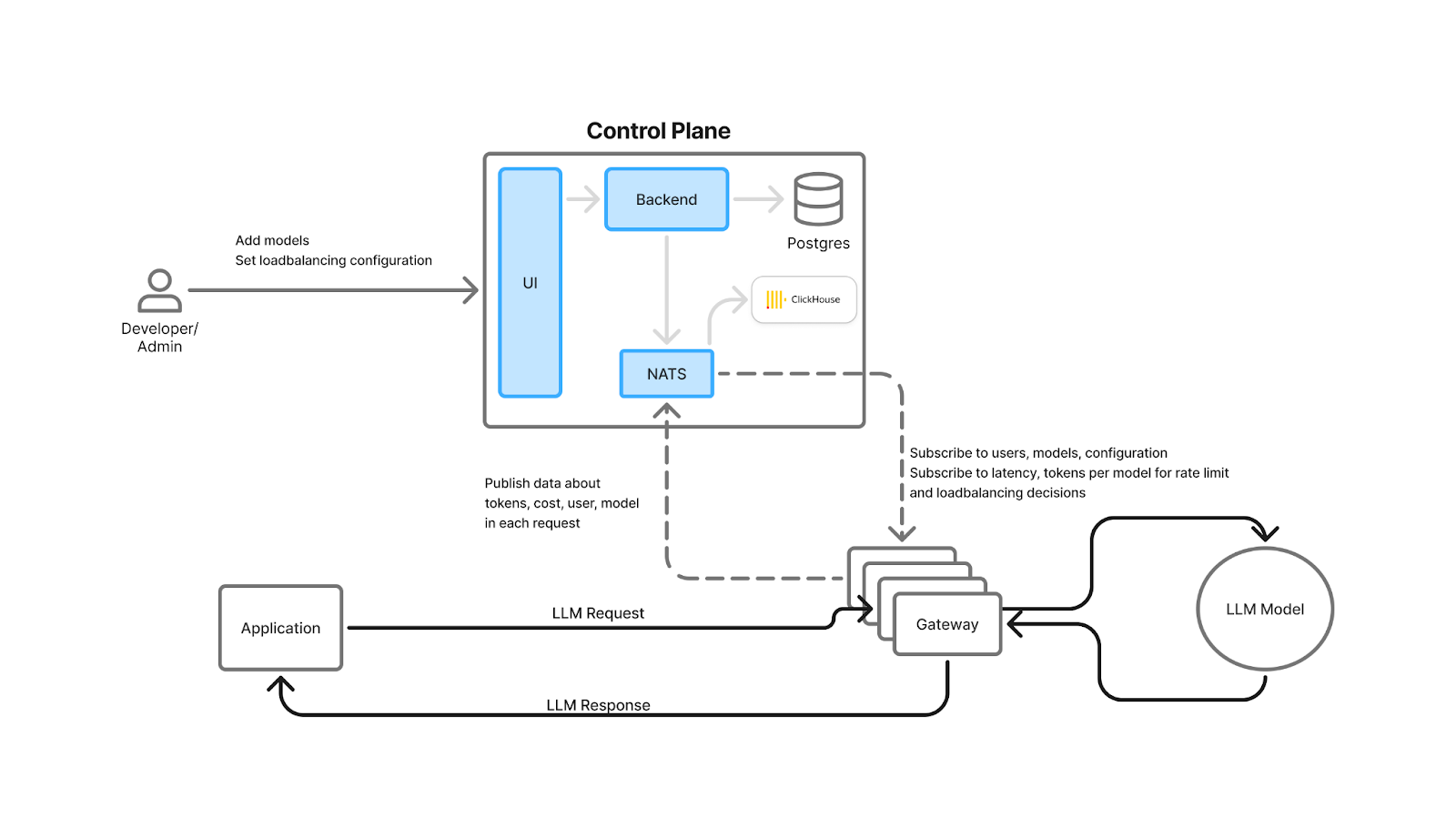

Gateways are becoming the operational control plane of GenAI systems. They unify traffic for third‑party APIs (OpenAI, Anthropic, Mistral, Bedrock) and self‑hosted models, enforce policy, and expose a single pane of glass for latency, errors, token consumption, and spend. That same choke point is the ideal place to capture traces, compute model‑level and user‑level analytics, and trigger guardrails and alerts—without adding latency to the request path.

Real organizations have learned this the hard way. Consider a support copilot serving thousands of agents. One afternoon, an innocuous prompt update increases output length by ~40%. Agent satisfaction falls as responses lag; finance notices the bill. With gateway observability, you would see p95 latency and output tokens climbing for the affected route, correlate it to the deployment or prompt version, and roll back—ideally with an automated alert set to catch it next time.

This post recaps what an AI Gateway is, why observability is critical, and the concrete metrics, dashboards, and workflows teams should put in place. We’ll also show how TrueFoundry’s AI Gateway ships the observability stack out of the box: unified analytics (latency, TTFT/ITL, errors), granular cost tracking, customer/user‑level breakdowns, healthy/failed routing visibility, and scalable, low‑overhead collection built into the architecture.

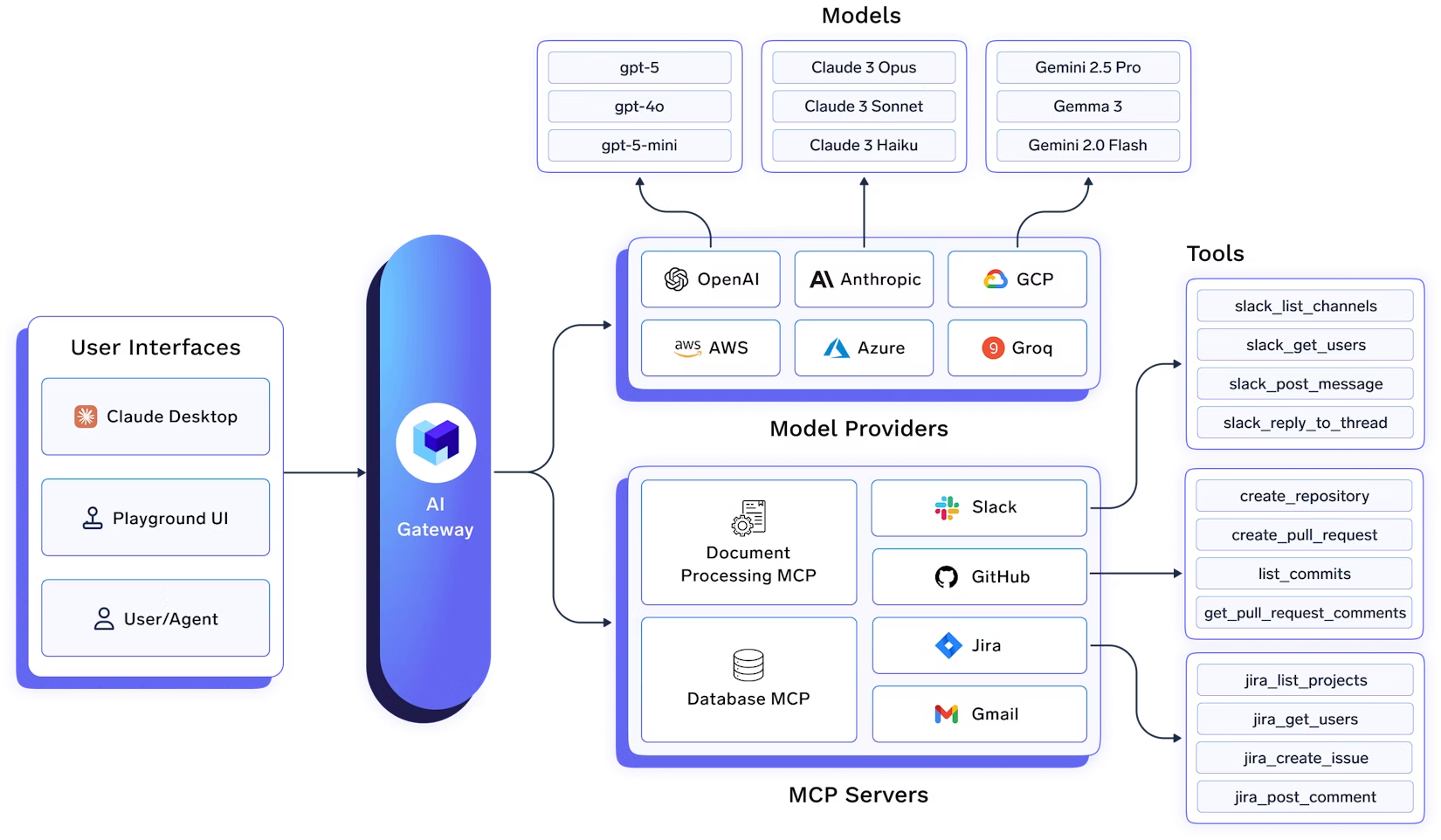

What Is AI Gateway?

An AI Gateway is a thin, high‑performance layer that proxies application requests to one or more LLM providers or self‑hosted models. It unifies APIs, centralizes authentication and RBAC (Role Based Access Control) , applies rate limits and guardrails, performs load balancing and failover, and captures observability and cost data for every request. Think of it as the “ingress + policy + telemetry” layer for GenAI.

Operationally, modern gateways support weighted and latency‑based routing, health checks, and automatic fallbacks when a model or region is unhealthy—so requests continue even through provider hiccups. Because every request passes through the gateway, teams can compare providers by latency/cost, run A/B tests, and gradually shift traffic as conditions change.

TrueFoundry’s architecture is designed so these controls and metrics add minimal overhead: checks for auth, rate limiting, and load balancing are done in‑memory; logs/metrics are written asynchronously to a queue; and the request path avoids external calls (unless you opt into caching). The gateway is horizontally scalable and CPU‑bound, keeping end‑to‑end latency overhead to single‑digit milliseconds.

Why Observability Is Critical in AI Gateways

Performance & User Experience

LLM latency is multi‑modal: there’s time to first token (TTFT), inter‑token latency (ITL) for streaming, and total request latency. Each affects perceived UX differently. Gateways that track all three help you diagnose whether slowdowns come from provider queues, model compute, network, or prompt length—and choose the best routing strategy.

Cost Governance

Tokens are the new CPU cycles. A single prompt can fan out to multiple tools or retrieval steps, and costs accumulate across providers. Observability must attribute spend by model, provider, environment, application, tenant, and user and stay current with providers’ public pricing to avoid manual spreadsheets.

Reliability & Resilience

Production apps need guardrails against provider outages, throttling, and model regressions. Observability tied to health checks, 4xx/5xx code breakdowns, retry/fallback rates, and rate‑limit utilization lets you enforce SLOs and automatically fail over when performance deteriorates.

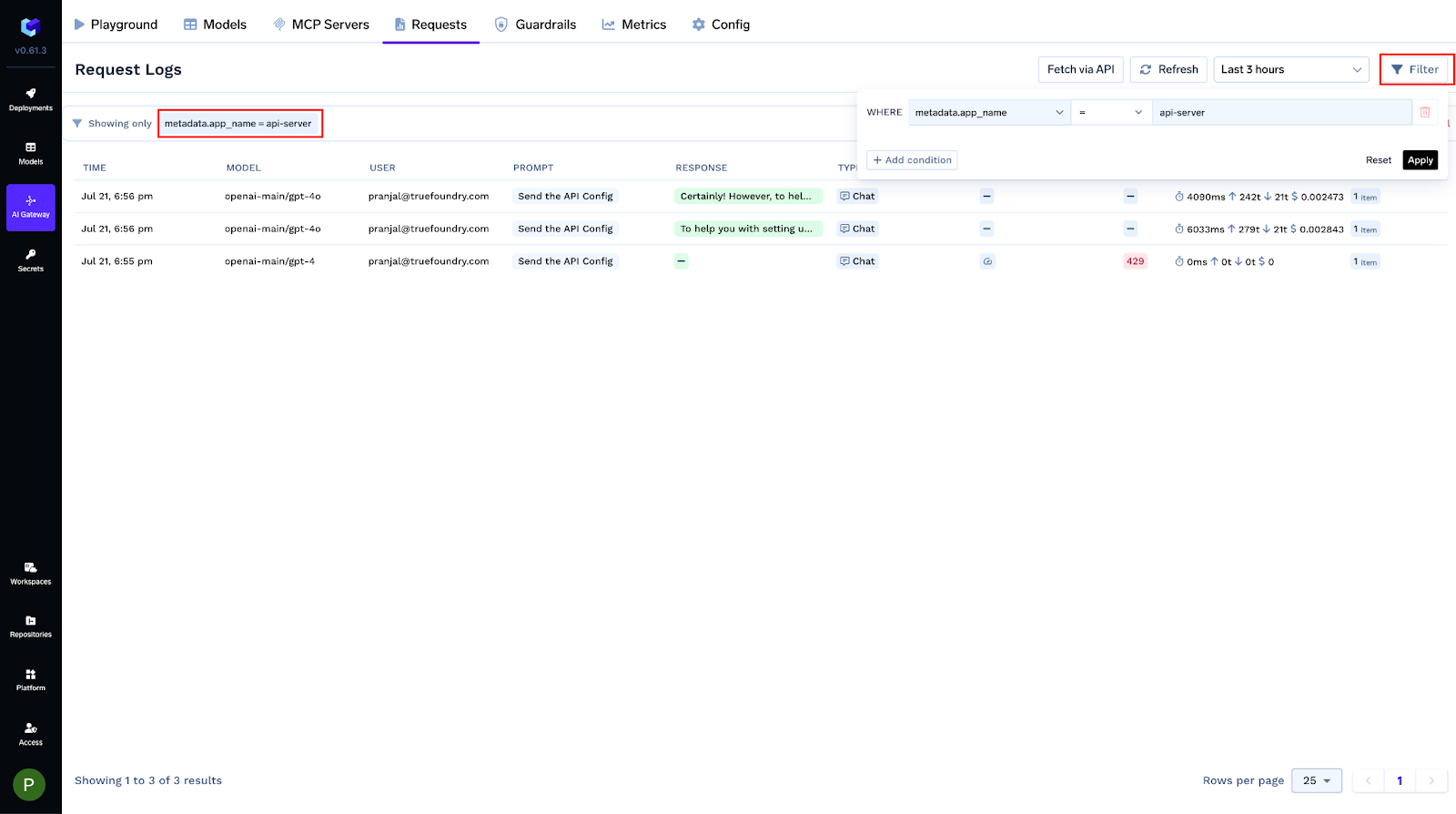

Compliance & Auditability

Enterprises need full request/response trails with access controls and PII/content moderation policies. A gateway centralizes this enforcement and logging so teams can prove who called which model, with what data, and what it returned—without sharing provider API keys broadly.

Operational Agility

Model quality, pricing, and quotas change frequently. Organizations that instrument gateways can compare providers head‑to‑head and shift traffic based on fresh latency/cost/error data—maintaining performance and margins as the market evolves.

External guidance echoes these needs: industry leaders emphasize AI observability for rapid response to drift, outages, and cost spikes; OpenAI and Azure recommend structured logging and exponential backoff for rate limits, which a gateway can standardize across apps.

Key Observability Features in AI Gateway

Below are capabilities you should expect from a production‑grade AI Gateway—and that TrueFoundry provides natively.

- End‑to‑end request tracing

Capture inputs, outputs, metadata (model, provider, region), token counts, costs, latencies, errors, and streaming timings for every call, with correlation IDs. This turns black‑box interactions into traceable workflows. - Latency analytics: total, TTFT, and ITL

Track p50/p95/p99 across routes and providers. TTFT pinpoints backend wait time; ITL highlights throughput for streaming UIs. - Error code breakdowns & provider health

See 4xx vs 5xx, rate‑limit hits, timeouts, and provider‑specific error classes. Feed these into routing/fallback decisions. - Granular cost tracking

Auto‑populate per‑token pricing from official provider rates; show cost per request, per 1K tokens, per model/provider, and per user/tenant/project. - Rate‑limit telemetry

Enforce and observe token‑aware quotas (not just RPS), with dashboards for utilization, throttles, and drops by route or user. - Routing visibility

Show which backend each request hit, why (weight vs latency), and whether fallback/retry occurred—plus comparative latency/cost charts to guide traffic shifts. - User / Customer / Environment breakdowns

Slice metrics by API key, organization, workspace, or environment (dev/stage/prod) to identify heavy users, regressions, or runaway experiments. - Alerting & SLOs

Configure alerts on latency, error rate, cost per request, or rate‑limit saturation; couple with automated fallbacks and budgets.

- Security & audit trails

Centralize API keys, apply RBAC, and retain immutable logs for compliance.

Observability in AI Gateway with TrueFoundry

Here is how TrueFoundry bakes observability into the core request path and ships a full analytics stack out of the box—without slowing down production traffic.

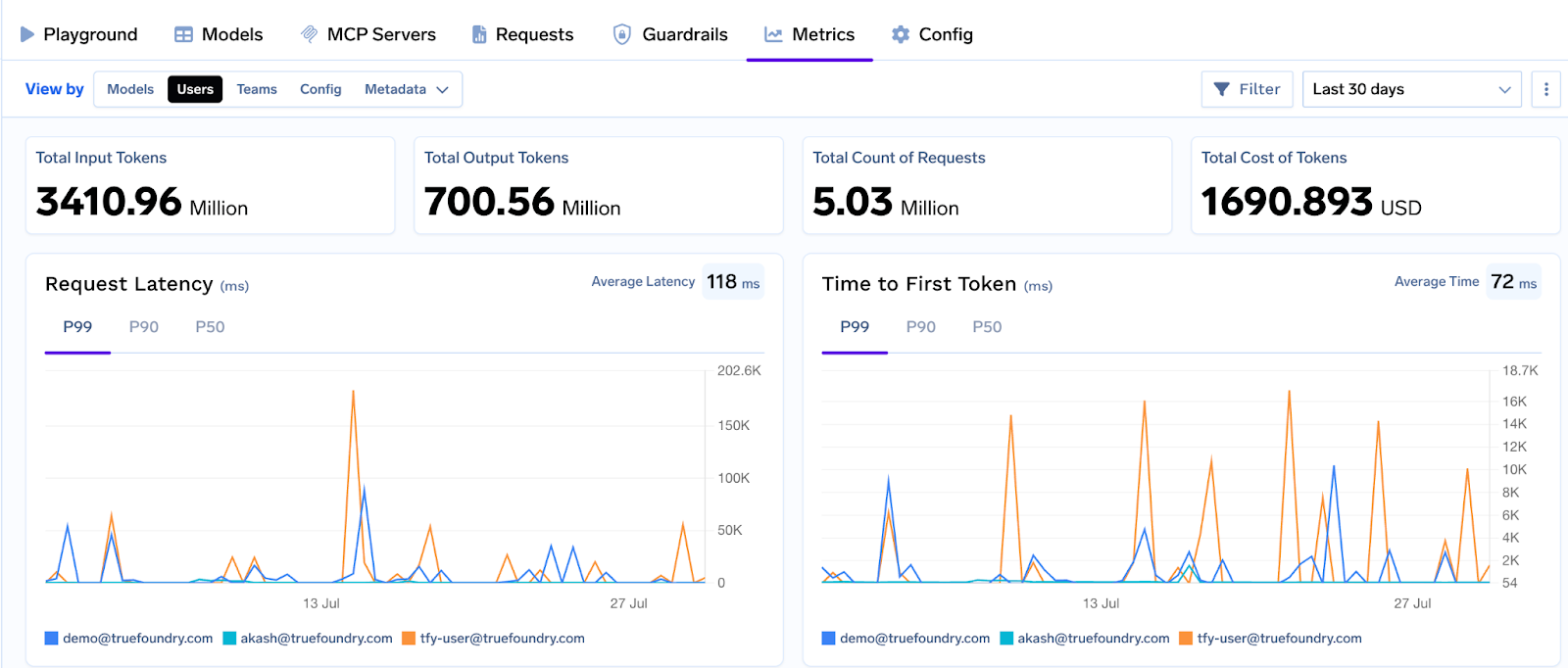

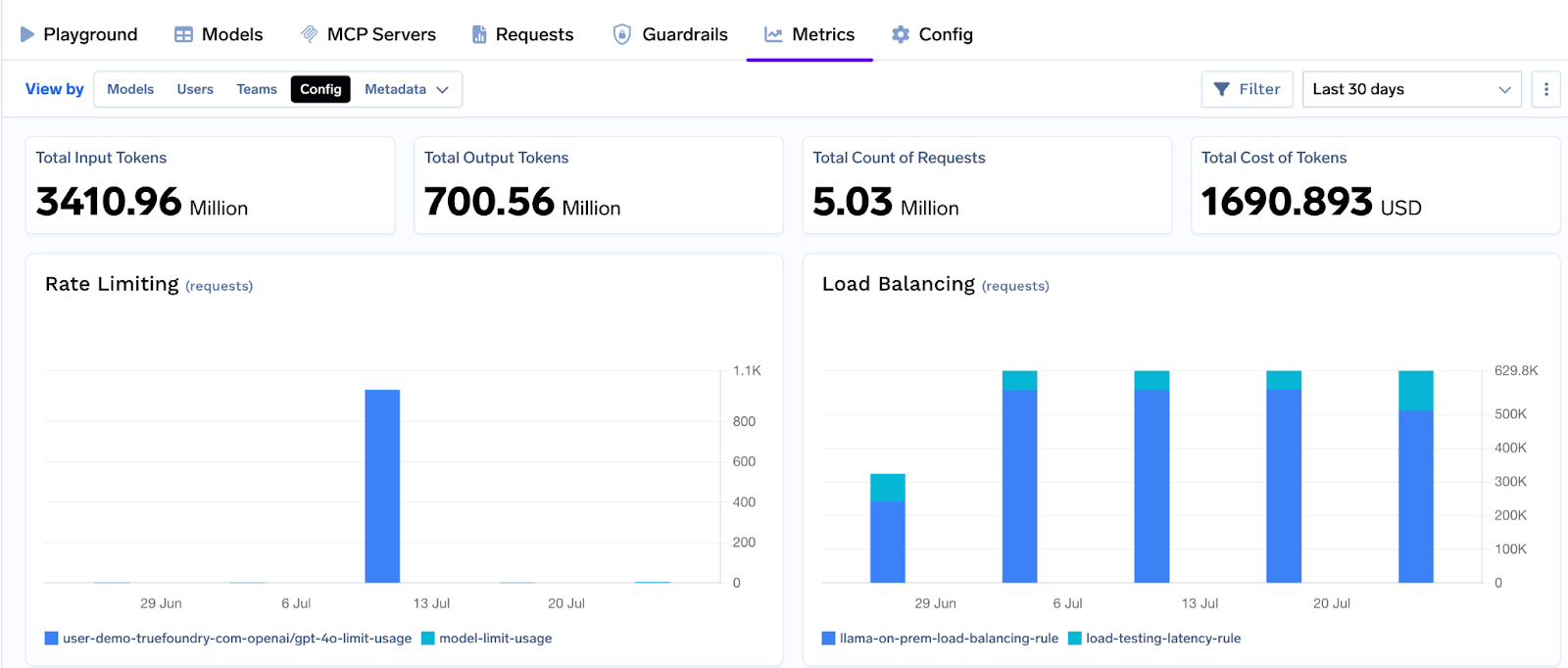

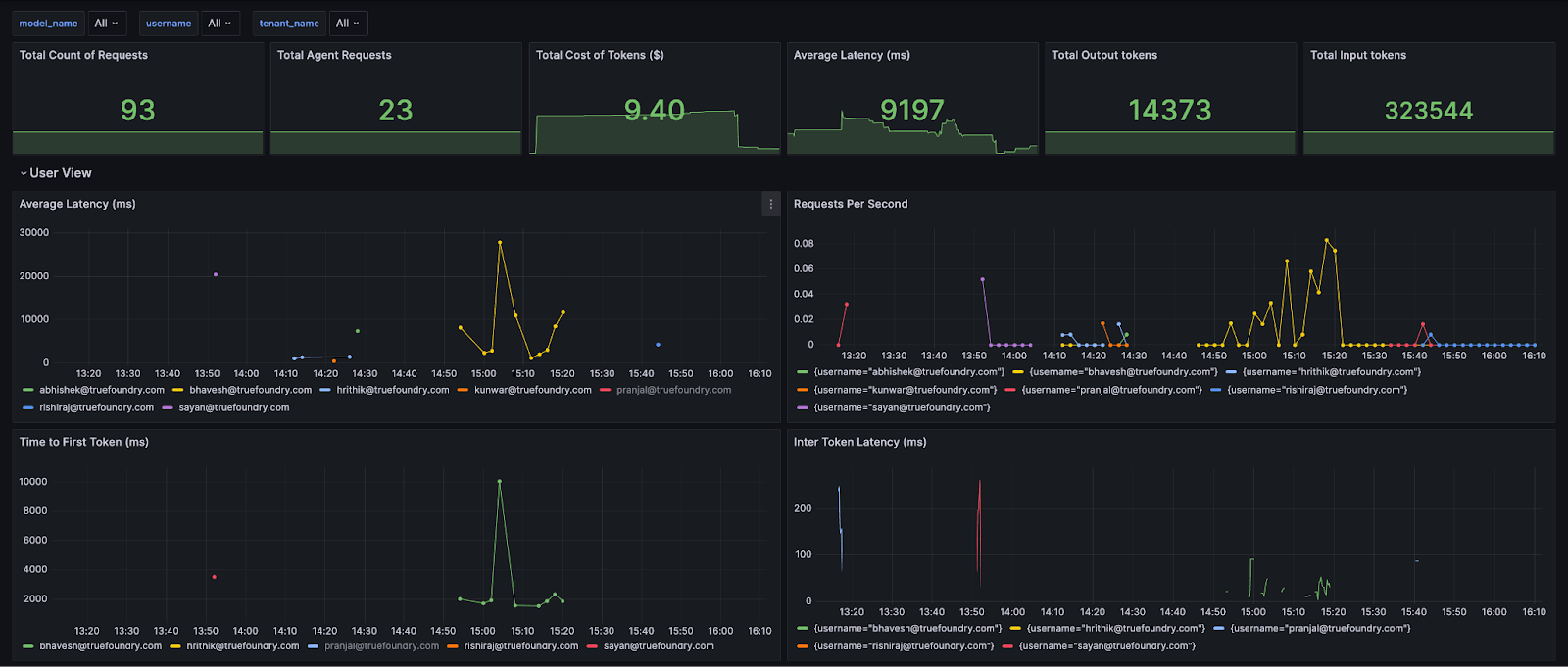

The Analytics dashboard exposes: Request Latency (p50/p95/p99), Time to First Token (TTFT/TTFS), Inter‑Token Latency (ITL), cost per model/provider, input/output tokens, error codes, and policy activity (rate‑limit, load‑balancing, fallbacks, guardrails, budgets). Views slice by model, user, team, ruleId, and custom metadata; you can also download raw CSVs.

Accurate, up‑to‑date cost accounting

Enable Public Cost to auto‑populate per‑token pricing from providers’ published rates (OpenAI, Anthropic, Bedrock, etc.). For negotiated or fine‑tuned models, set Private Cost with custom input/output token prices. Both flow into per‑request and aggregate cost analytics.

Customer, user, and project‑level insights

Attach business context (customer, feature, environment) and break down tokens, latency, and spend by any dimension—ideal for chargebacks, noisy‑neighbor detection, and prioritizing optimizations.

Token‑aware rate limiting with observability

Define quotas by tokens or requests per minute/hour/day, scoped to users, models, or segments identified via metadata. Dashboards show utilization and throttles so you can right‑size limits and protect shared capacity.

Load balancing, health, and failover visibility

Use weight‑based splits for experiments or latency‑based routing for steady‑state. Health checks mark backends unhealthy on error/latency thresholds and exclude them automatically. Fallback chains retry on failure, with spans and metrics that show which path was taken and its latency/cost impact.

Security, RBAC, and audit trails

Centralize provider keys, issue scoped access tokens, enforce RBAC, and retain immutable request/response logs for compliance—across LLMs and MCP servers

Logging Metadata Keys

You can tag every request with structured metadata via the X-TFY-METADATA header. Logged keys become queryable filters, Grafana labels, and conditions in gateway configs (rate limits, load balancing, fallbacks, guardrails). Values are strings (≤128 chars).

X-TFY-METADATA: {"tfy_log_request":"true","environment":"staging","feature":"countdown-bot","customer_id":"acme-42"}

Use this to isolate logs, group cost/latency by tenant or feature, and roll out policy changes safely to a subset of traffic.

Example — rate‑limit by metadata

name: ratelimiting-config

type: gateway-rate-limiting-config

rules:

- id: openai-gpt4-dev-env

when:

models: ["openai-main/gpt4"]

metadata:

env: dev

limit_to: 1000

unit: requests_per_day

The same when metadata pattern applies to load balancing and fallback rules

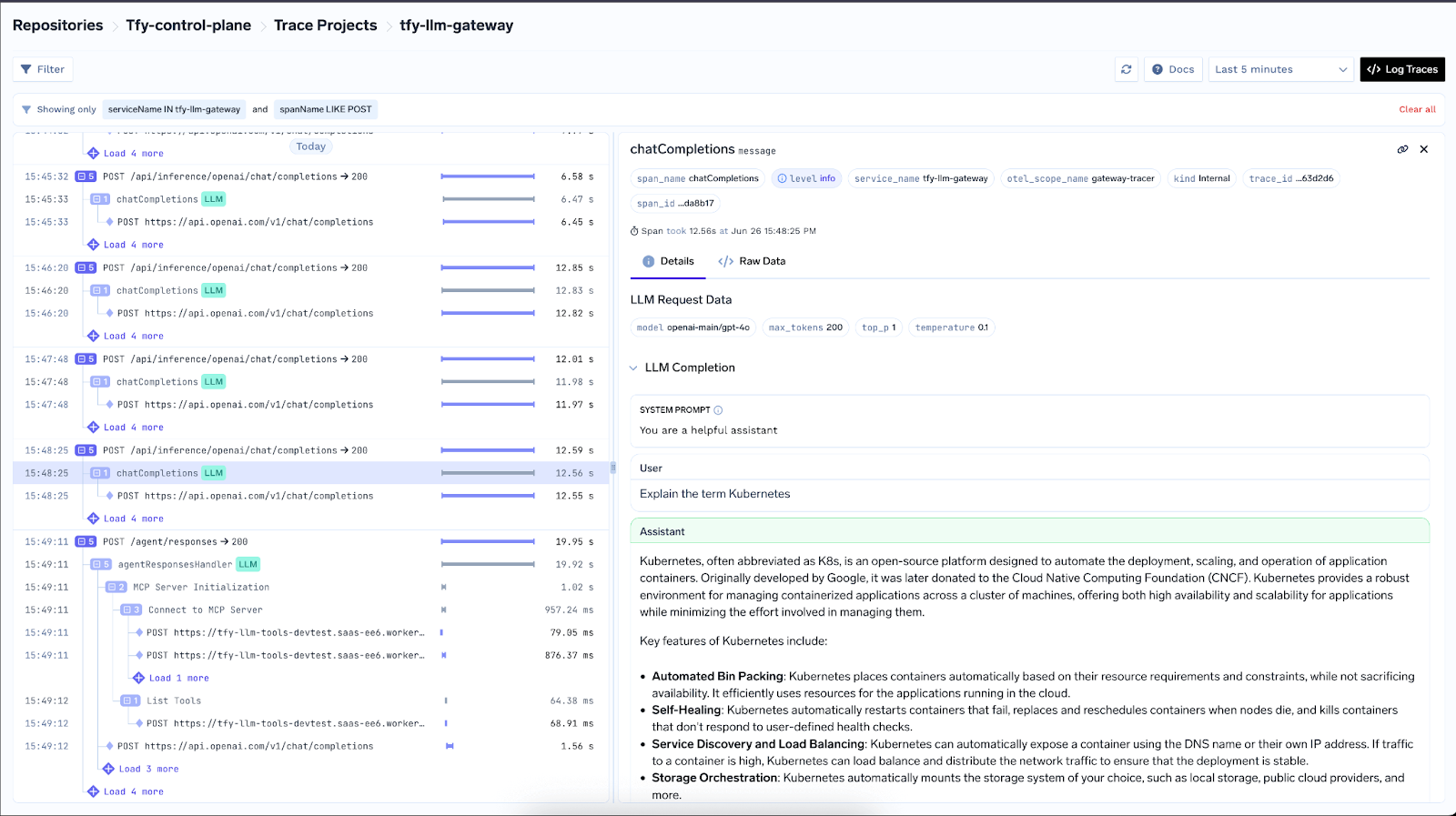

OpenTelemetry Tracing

The gateway is OpenTelemetry‑compliant. Turn on OTLP export and send traces to any backend (Tempo, Jaeger, Datadog/New Relic via Collector, TrueFoundry Tracing). Spans include genai attributes—model, tokens, TTFT, ITL, parameters, tool calls, errors—and detailed spans for rate limiting, load balancing, fallbacks, and MCP server/tool calls, letting you correlate provider behavior with app‑level spans.

Enable tracing

ENABLE_OTEL_TRACING="true"

OTEL_SERVICE_NAME=<your_service>

OTEL_EXPORTER_OTLP_TRACES_ENDPOINT="https://<otel-collector>/v1/traces"

OTEL_EXPORTER_OTLP_TRACES_HEADERS="Authorization=Bearer <token>"Representative spans

Prometheus & Grafana Integration

Expose /metrics for Prometheus or push OTEL metrics by setting:

ENABLE_OTEL_METRICS="true"

OTEL_EXPORTER_OTLP_METRICS_ENDPOINT="https://<otlp-endpoint>/v1/metrics"

OTEL_EXPORTER_OTLP_METRICS_HEADERS="Authorization=Bearer <token>"

LLM_GATEWAY_METADATA_LOGGING_KEYS='["customer_id","request_type"]'

Metadata keys listed in LLM_GATEWAY_METADATA_LOGGING_KEYS become Prometheus labels llm_gateway_metadata_<key>, enabling per‑customer/per‑feature cost and latency charts. (Truefoundry Docs)Metadata keys listed in LLM_GATEWAY_METADATA_LOGGING_KEYS become Prometheus labels llm_gateway_metadata_<key>, enabling per‑customer/per‑feature cost and latency charts. (Truefoundry Docs)

Key metric families (subset)

Tokens & cost: llm_gateway_input_tokens, llm_gateway_output_tokens, llm_gateway_request_cost.

Latency: llm_gateway_request_processing_ms, llm_gateway_first_token_latency_ms, llm_gateway_inter_token_latency_ms.

Errors: llm_gateway_request_model_inference_failure, llm_gateway_config_parsing_failures.

Policy activity: llm_gateway_rate_limit_requests_total, llm_gateway_load_balanced_requests_total, llm_gateway_fallback_requests_total, llm_gateway_budget_requests_total, llm_gateway_guardrails_requests_total.

Agent/MCP: llm_gateway_agent_request_duration_ms, llm_gateway_agent_llm_latency_ms, llm_gateway_agent_tool_latency_ms, llm_gateway_agent_tool_calls_total, llm_gateway_agent_mcp_connect_latency_ms, llm_gateway_agent_request_iteration_limit_reached_total. A pre‑built Grafana dashboard JSON is published by TrueFoundry, organized into Model, User, Config, and MCP Invocation views. Add variables for your custom metadata, e.g.:

label_values(llm_gateway_input_tokens, llm_gateway_metadata_customer_id)

MCP‑Aware Observability & Governance

Anthropic’s Model Context Protocol (MCP)—announced November 25, 2024—standardizes how assistants connect to tools, prompts, and resources. The ecosystem has accelerated through 2025 with many prebuilt servers (GitHub, Slack, Google Maps, Puppeteer, etc.).

TrueFoundry integrates MCP natively:

- MCP Registry: Central catalog of MCP servers (hosted or external), with discovery and metadata.

- Centralized auth: User‑scoped OAuth2, PAT (Personal Access Token) for users, and VAT (Virtual Account Token) for apps with least‑privilege access.

- RBAC & approvals: Restrict tools/servers by teams; support review/approval for sensitive actions.

- Agent playground & built‑in MCP client: Orchestrates the agent loop, streams progress (LLM messages, tool calls, tool results) to the UI.

- Observability: OTEL spans plus Prometheus **agent/**MCP metrics (tool counts, connect latency, iteration limits, per‑tool latency) and Grafana panels

This makes the gateway the operational control plane for agentic workloads—unifying policy, auth, routing, and end‑to‑end visibility across both LLM calls and tool executions.

Observability Metrics to Track

Below is a practical checklist. Each metric includes what it tells you, how to use it, and how TrueFoundry surfaces it.

1. Request Latency (p50/p95/p99)

- What: Total time from request received to final token (non‑streaming) or stream completion.

- Why: p95/p99 drive perceived snappiness and SLOs. Spikes often correlate with provider congestion, larger prompts/outputs, or fallbacks.

- TrueFoundry: Shown per model/provider with trends; combine with routing/fallback logs for root cause.

2. Time to First Token (TTFT)

- What: Delay before the first streamed token.

- Why: Dominant factor in UX for chat UIs; high TTFT suggests provider queueing or cold starts.

- TrueFoundry: First‑class metric in Analytics. Set alerts when TTFT exceeds thresholds for key routes.

3. Inter‑Token Latency (ITL)

- What: Average time between streamed tokens.

- Why: Indicates throughput; degraded ITL makes the stream feel “sticky” even if TTFT is good.

- TrueFoundry: Tracked for streaming responses to diagnose throughput regressions.

4. Success Rate & Error Codes

- What: 2xx vs 4xx/5xx; rate‑limit hits; timeouts.

- Why: Early signal of provider issues, bad prompts, or quota misconfiguration.

- TrueFoundry: Error code breakdowns and counts; pair with rate‑limit and routing metrics.

5. Token Usage (Input / Output / Total)

- What: Tokens per request and totals over time.

- Why: Detect runaway prompts or verbose outputs; normalize latency by tokens to compare models.

- TrueFoundry: Visualized by model/provider/user; correlate with latency and cost.

6. Cost per Request & Cost per 1K Tokens

- What: Dollar spend normalized by request and by token.

- Why: Compare providers fairly; enforce budgets and ROI.

- TrueFoundry: Auto‑priced using official provider rates; no manual upkeep.

7. Rate‑Limit Utilization & Throttles

- What: How close clients are to configured token/RPM ceilings; counts of throttled or delayed requests.

- Why: Right‑size quotas; protect shared capacity; avoid unexpected 429s.

- TrueFoundry: Token‑aware limits with dashboards and logs; guidance on token‑based vs RPS.

8. Routing & Fallback Rates

- What: Distribution of traffic across backends; frequency of fallbacks/retries.

- Why: Validate A/B experiments, ensure stability during incidents, and quantify the cost/latency impact of failovers.

- TrueFoundry: Shows chosen backend and health; supports weight‑ and latency‑based routing and declarative fallback chains.

9. Provider Health Indicators

- What: Rolling latency, error, and success trends by provider/region/model.

- Why: Decide when to shift traffic proactively.

- TrueFoundry: Health checks mark backends unhealthy when thresholds are breached; excluded from routing until recovered.

10. Prompt / Version Analytics

- What: Performance and cost by prompt or workflow version.

- Why: Detect regressions after prompt edits or model upgrades.

- TrueFoundry: Trace logs and analytics used to pinpoint prompt‑level anomalies in real teams; pair with alerts on latency spikes.

11. Compliance Signals

- What: PII or safety rule triggers, audit log coverage.

- Why: Enforce governance and prove compliance.

- TrueFoundry: RBAC, centralized keys, guardrails, and full request logs.

Real‑World Examples

Scenario A — Support copilot budget spike

A prompt change increases output verbosity for enterprise customers. Symptoms: rising output tokens, higher p95 latency, and daily spend. Action with TrueFoundry: Analytics shows a jump in output tokens for the “support‑prod” environment and a cost surge for the primary model. You compare an alternate provider that shows lower TTFT and cheaper output tokens; you shift 30% of traffic via weight‑based routing and set an alert on “cost per conversation.”

Scenario B — Provider throttling during peak hour

At 10:00 IST, error rates climbed to 429s. Action with TrueFoundry: Rate‑limit dashboards confirm throttles from the upstream. Fallback chains kick in, and routing shifts toward a healthier backend. You keep user experience stable and later tune token quotas and backoff parameters.

Scenario C — Streaming UX feels “sticky”

Users report “the answer starts fast but then crawls.” Action with TrueFoundry: TTFT is fine, but ITL is elevated on the primary model. Latency‑based routing automatically prefers a provider with better streaming throughput; you also set an alert on ITL p95.

Scenario D — Multi‑tenant fairness

One customer’s batch job hogs tokens and slows everyone else. Action with TrueFoundry: Token‑based rate limits by customers enforce fair‑share and protect SLOs; analytics verify utilization and rejected counts so you can upsell higher quotas.

Challenges and Considerations

- Capturing enough detail without hurting latency

Telemetry should be written asynchronously, and the hot path should avoid external calls. TrueFoundry’s design follows this principle so observability doesn’t become a bottleneck. - Token vs request‑based controls

RPS alone is misleading for LLMs: a single long prompt can consume far more compute than many short ones. Prefer token‑aware limits and monitor utilization. - Pricing drift and cost accuracy

Providers change prices and introduce new models frequently. Automating cost mapping to official rates keeps financial reporting correct. - Multi‑provider consistency

Different vendors return different error codes, headers, and usage fields. A gateway should normalize these so your dashboards are apples‑to‑apples. (TrueFoundry unifies APIs and translates requests/responses to a common schema.) - Alert fatigue

Start with a few SLO‑aligned alerts: p95 latency, error rate, cost per 1K tokens, and rate‑limit utilization. Expand as you learn normal baselines. Industry guides recommend targeted, high‑signal alerts over broad firehoses. - Compliance & data retention

Decide what you log, how long you keep it, and who can access it. Centralized RBAC, token scoping, and audit logs are essential in regulated environments. - Routing policies during incidents

Weighted splits are predictable; latency‑based routing is adaptive. Many teams use weight‑based for experiments and latency‑based with health checks for stead‑state, plus fallback chains for resilience. TrueFoundry supports both. - Complementing application‑level tracing

If you already have instrument spans in your app (tool calls, RAG steps), keep doing that. Use the gateway for uniform enforcement and provider analytics, and join the data via correlation IDs.

How TrueFoundry Solves It — A Summary Map

Conclusion

LLM applications are dynamic systems. Models evolve, providers change quotas and prices, prompts morph, and user behavior surprises you. The AI Gateway is where you can observe, control, and optimize all of it—if you collect the right signals and turn them into actions.

TrueFoundry’s AI Gateway gives you that operational command center. It captures latency (TTFT/ITL), tokens, cost, and errors with low overhead; enforces token‑aware rate limits, RBAC, and guardrails; and provides routing, health, and fallback visibility so you can keep experiences fast, reliable, and cost‑efficient. With granular customer/user analytics and automated, up‑to‑date cost attribution, teams can move from reactive firefighting to proactive optimization.

If you’re centralizing your GenAI stack—or untangling a sprawl of one‑off integrations—start by routing traffic through the gateway, turn on the dashboards above, and set a few SLO‑aligned alerts. You’ll gain the visibility to ship faster, contain costs, and keep your agents and users delighted.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)