LangChain integration with Truefoundry

Supercharge Your LangChain Applications with TrueFoundry's Production-Ready Platform

LangChain has emerged as a powerful framework for building innovative applications powered by Large Language Models (LLMs). Its modularity and extensive integrations allow developers to create sophisticated conversational AI, data analysis tools, and more. However, deploying and managing these LangChain applications in a production environment introduces complexities around scalability, observability, cost management, and multi-LLM support.

This blog post will guide you through leveraging TrueFoundry with your LangChain projects to unlock a seamless and efficient development-to-production workflow.

Why use TrueFoundry for Your LangChain Applications?

TrueFoundry addresses the critical challenges of running LangChain applications at scale:

- Unified LLM Access: Connect to various leading LLM providers (OpenAI, Anthropic, and more coming soon) through a single, consistent API endpoint, simplifying integrations and enabling easy experimentation.

- Effortless Model Deployment: Deploy and serve your LangChain-powered models with automatic scaling, ensuring high availability and optimal resource utilization without the Kubernetes complexity.

- Deep Observability with LLM Tracing: Gain unprecedented visibility into your LangChain application's LLM interactions. Monitor, debug, and optimize every step of your chains and agents in production.

- Cost and Performance Tracking: Keep a close eye on your LLM expenses and performance metrics across different models, enabling data-driven decisions for cost optimization and improved user experience.

- Production-Grade Reliability: Implement intelligent fallbacks and retry mechanisms effortlessly, ensuring your LangChain applications are resilient and dependable in real-world scenarios.

Quickstart: Connecting LangChain to TrueFoundry

Getting started with TrueFoundry and LangChain is incredibly straightforward due to TrueFoundry's OpenAI signature compatibility. You can seamlessly integrate by simply configuring your ChatOpenAI interface.

Installation

First, ensure you have the LangChain OpenAI integration installed:

pip install langchain-openaiBasic Setup

Connect your LangChain application to TrueFoundry's unified LLM gateway by updating your ChatOpenAI model:

from langchain_openai import ChatOpenAI

TRUEFOUNDRY_PAT = "YOUR_TRUEFOUNDRY_PERSONAL_ACCESS_TOKEN"

TRUEFOUNDRY_BASE_URL = "YOUR_TRUEFOUNDRY_UNIFIED_ENDPOINT"

llm = ChatOpenAI(api_key=TRUEFOUNDRY_PAT,base_url=TRUEFOUNDRY_BASE_URL,model="openai-main/gpt-4o")

response = llm.invoke("What's the weather like today in Bengaluru?")

print(response.content)Key Points:

- Replace

"YOUR_TRUEFOUNDRY_PERSONAL_ACCESS_TOKEN"with your actual TrueFoundry Personal Access Token (PAT). - Set

"YOUR_TRUEFOUNDRY_UNIFIED_ENDPOINT"to the base URL provided by your TrueFoundry setup. - Use TrueFoundry's model naming convention:

provider-main/model-name. For instance,openai-main/gpt-4ofor OpenAI's GPT-4o.

With this minimal configuration, all requests made through the llm object will be automatically routed through your TrueFoundry AI Gateway, benefiting from authentication, load balancing, and comprehensive logging.

Seamless Integration with LangGraph

TrueFoundry integrates flawlessly with LangGraph, LangChain's framework for building multi-agent workflows. Simply configure your LLM nodes within your LangGraph to use the TrueFoundry ChatOpenAI client, and TrueFoundry will automatically handle the underlying infrastructure and observability.

from langchain_openai import ChatOpenAI

from langgraph.graph import StateGraph, MessagesState

from langchain_core.messages import HumanMessage

TRUEFOUNDRY_PAT = "YOUR_TRUEFOUNDRY_PERSONAL_ACCESS_TOKEN"

TRUEFOUNDRY_BASE_URL = "YOUR_TRUEFOUNDRY_UNIFIED_ENDPOINT"

def call_model(state: MessagesState):

model = ChatOpenAI(api_key=TRUEFOUNDRY_PAT,base_url=TRUEFOUNDRY_BASE_URL,model="openai-main/gpt-4o")

response = model.invoke(state["messages"])

return {"messages": [response]}

workflow = StateGraph(MessagesState)

workflow.add_node("agent", call_model)

workflow.set_entry_point("agent")

workflow.set_finish_point("agent")

app = workflow.compile()

result = app.invoke({"messages": [HumanMessage(content="Tell me a short story.")]})

print(result)Unlocking Observability and Insights

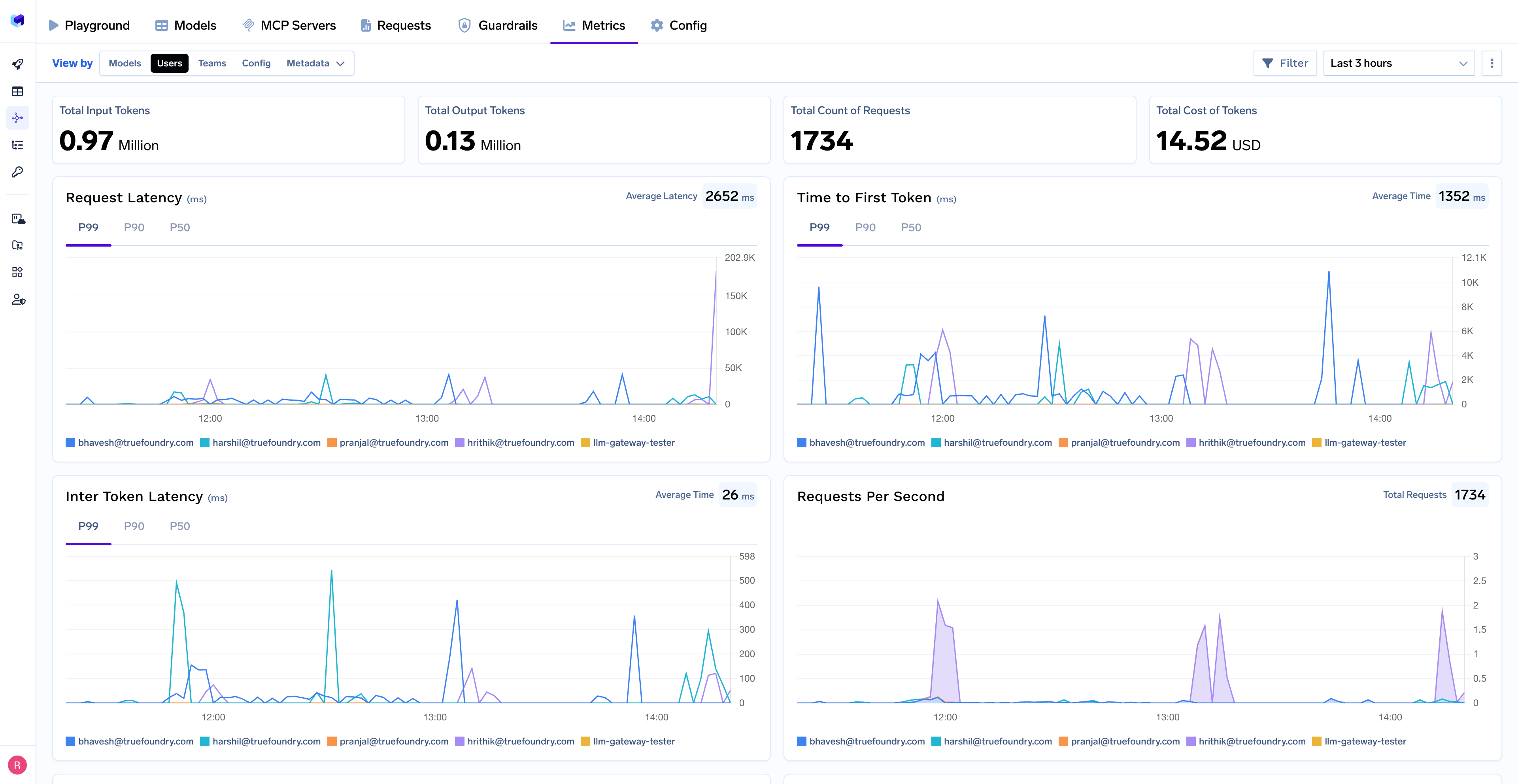

One of the most significant advantages of using TrueFoundry with LangChain is its built-in monitoring and observability capabilities. All requests flowing through the TrueFoundry AI Gateway are automatically:

- Logging: Providing detailed insights into requests and responses.

- Tracing: Allowing you to follow the execution flow across your LangChain components.

- Monitoring: Tracking key performance indicators and costs.

Access your intuitive monitoring dashboard on the TrueFoundry platform to gain comprehensive visibility into your LangChain application's behavior in production. Explore request logs, analyze performance metrics like latency and token usage, track cost breakdowns by model, and identify error patterns for faster debugging.

Getting Started with TrueFoundry and LangChain Today!

TrueFoundry provides the essential infrastructure and tools to take your innovative LangChain applications from development to production with confidence and efficiency. By simplifying LLM integration, automating deployments, and providing deep observability, TrueFoundry empowers your team to focus on building cutting-edge AI solutions rather than wrestling with infrastructure complexities.

Ready to experience the power of TrueFoundry for your LangChain projects?

- Visit the TrueFoundry website to learn more and sign up for a free trial.

- Explore the comprehensive TrueFoundry Documentation for detailed guides and API references.

- Dive deeper into LLM Tracing and LangGraph Tracing with TrueFoundry.

For any questions or support, please don't hesitate to reach out to the TrueFoundry team at support@truefoundry.com

Unlock the full potential of your LangChain applications with TrueFoundry and build the future of AI, today!

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.