Building Low-Code AI Agent with Flowise on TrueFoundry AI Gateway

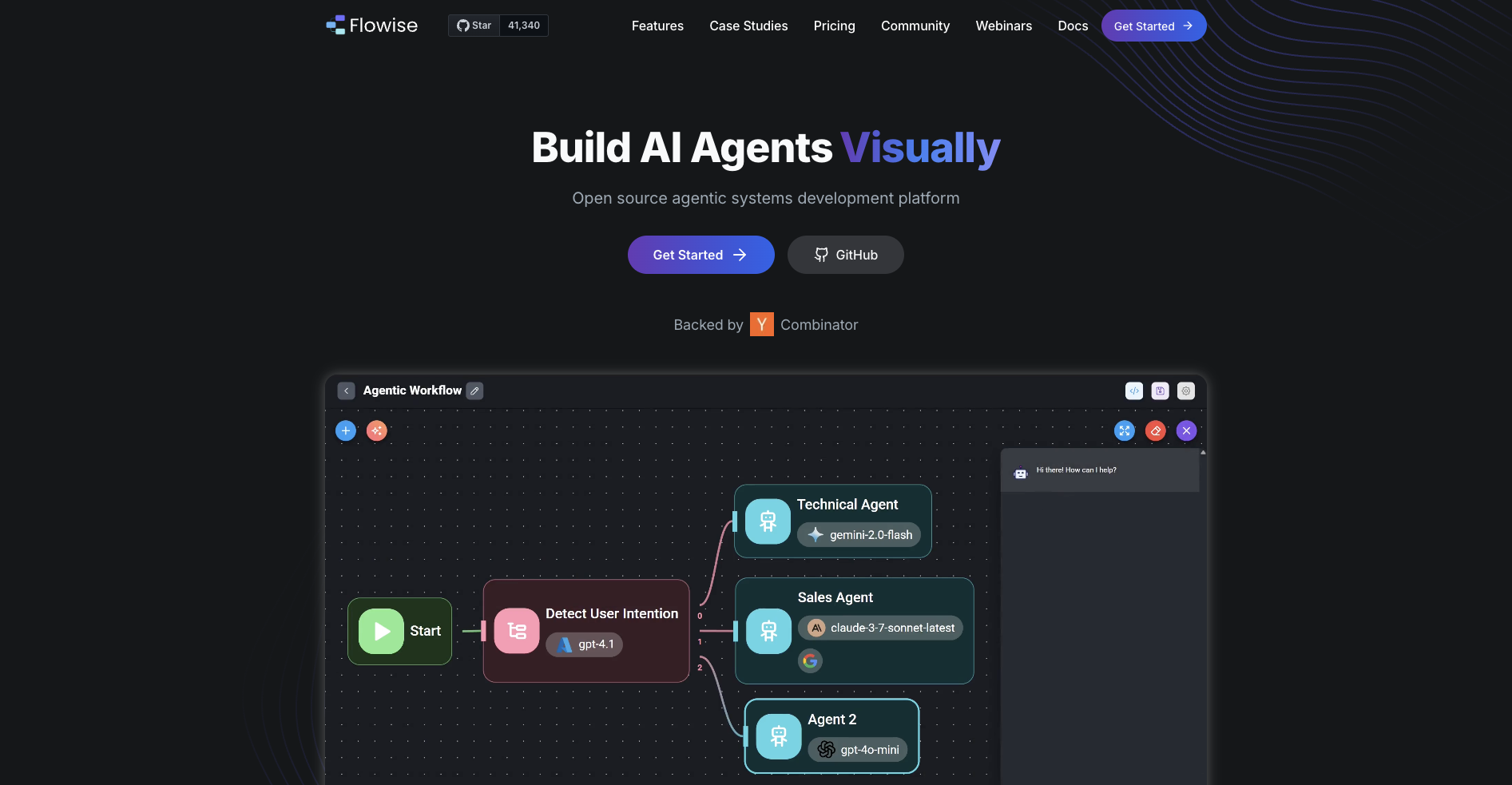

Low‑code builders like Flowise have surged because they’re fast. The drag‑and‑drop canvas lets data scientists and product managers link prompts, tools, vector search, and multi‑step agents - no Python needed. Proof‑of‑concepts ship in hours, not weeks. But when those prototypes start driving real value, the hidden tax shows up: every node calls a different model endpoint, each with its own API key, its own usage logs, and its own line on the company card. Multiply that across teams and experiments and no one can answer the basics: Who called what? How much did it cost? Was it safe?

This is exactly what the TrueFoundry AI Gateway solves. It processes over a million LLM calls a day for enterprises, applying project‑level authentication, per‑request cost controls, latency SLOs, and full audit trails - whether you’re using GPT‑5, Claude 4, Mistral, or an in‑house fine‑tuned model. Teams asked us to bring the same guardrails to Flowise agents. So here is the detailed blog on: Why low‑code agents belong behind the gateway

Why low-code agents belong behind the Gateway

- Centralized control: truefoundry ai gateway unifies governance for Flowise, LangChain apps, and custom microservices behind one OpenAI‑compatible endpoint.

- Flowise security and safety: Enforce role‑based access, PII redaction, policy checks, and audit logging at the edge. flowise safety isn’t a bolt‑on; it’s built into the request path.

- Flowise observability and tracing: Get unified traces, token counts, p50/p95 latency, and replay. flowise tracing plus gateway logs makes debugging fast and reliable.

- Flowise track costs: See per‑team spend, set budgets, cap runaway agents, and receive alerts before invoices spike.

- Flexibility: Swap providers (Anthropic, OpenAI, Mistral, Groq, vLLM) without changing the Flowise canvas. Your agents keep working; routing changes at the gateway.

- Compliance ready: Automatic audit logs, policy enforcement, and data controls to pass reviews without slowing down flowise experimentation.

That’s the same governance we already apply to mission‑critical traffic (>1M calls/day). Adding Flowise simply extends it to your low‑code experiments. For a quick look at Truefoundry's AI Gateway visit: Link

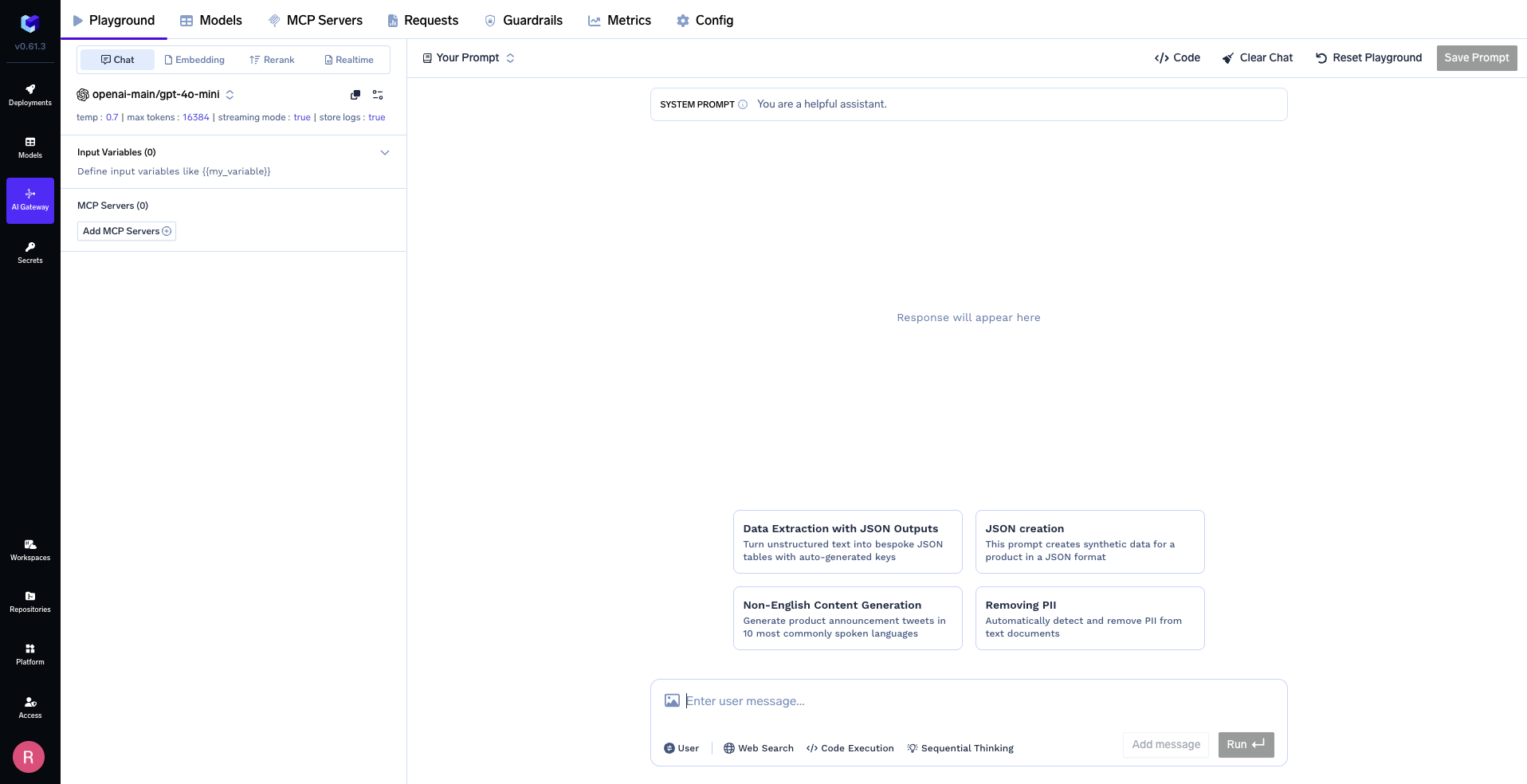

A quick look at the integration

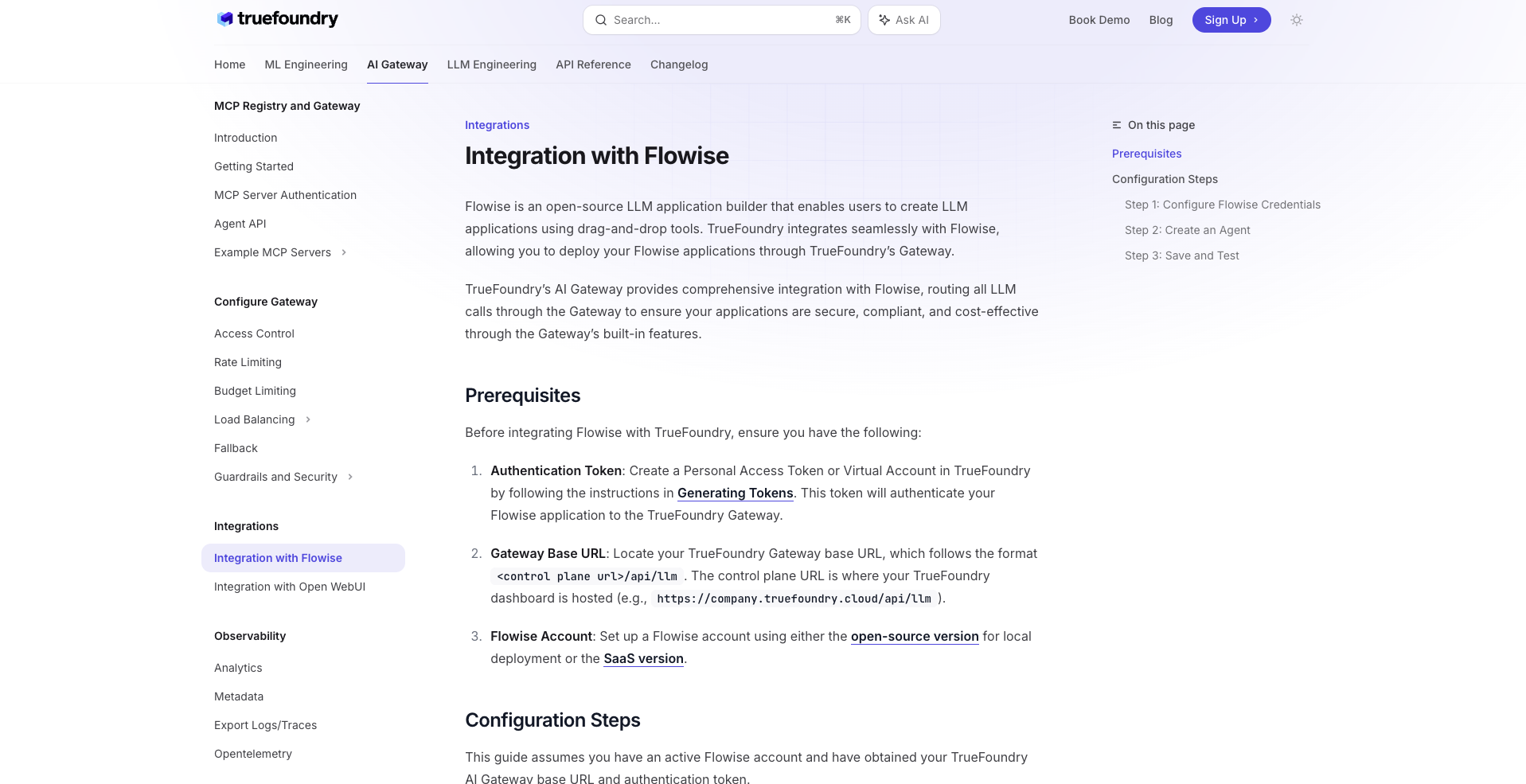

Prerequisites

- A Flowise deployment (self-hosted or SaaS)

- A TrueFoundry personal-access token

- Your Gateway base URL (https://<your-org>.truefoundry.cloud/api/llm)

Set-up in two short steps

- Paste your credentials into Flowise

- Open Flowise → Credentials → choose “OpenAI Custom”.

- Drop in the Gateway URL and your token—no wrapper code required.

- Build an agent and point it to your model

- Inside the canvas, add an AgentFlow node, connect it from Start, and paste the model-ID you copied from the TrueFoundry dashboard. Click Save; every LLM call now flows through the Gateway.

You can find a more detailed walk-through, complete with screenshots, in our docs

What you gain out of the box

Once those two fields are filled, Flowise inherits everything the Gateway already does for production workloads:

- Unified observability – token counts, p50/p95 latency and full trace replay in one place.

- Cost governance – per-team spending caps and auto alerts for runaway agents.

- Vendor choice – swap Anthropic for Mistral by changing a single drop-down, no canvas edits.

- Security & compliance – violations blocked at the edge, audit logs stored automatically.

Want to get started? Sign up for an account here: TrueFoundry no credit card required :), follow the setup steps in our quick start guide, and you'll have a production-ready AI stack running in about 15 minutes. No complex migrations, no code rewrites - just better performance, security, and control for your AI applications.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)